20th Century Studios

Before the recent arrival of AI chatbots, AI music, and AI art generators, the rise of artificial intelligence represented a far-off, futuristic scenario in the public consciousness. For a long time, AI's ascendence was thought of as some doomsday scenario in which the real-world equivalent of Skynet would become self-aware and send killer robots out to either enslave or wipe out the entire human race. It was the stuff of films and fantasy, even while we all sort of had an inkling that at some point in the distant future, it could well become a reality.

Recent years have taught us that while we were all worrying in a vague sort of way about a "Terminator"-like scenario, the immediate threat of AI had nothing to do with self-aware killer robots. We now live in an age where we are surrounded by so-called narrow AI. This refers to a version of the technology built for a specific purpose such as generating text or playing chess, and is at the core of all the Large Language models that have been causing such alarm across industries of late. The issue of robots writing screenplays was a major sticking point of the WGA strikes of 2023, while actors similarly went on strike partly due to the threat of studios using AI-generated versions of their likenesses in future projects. Elsewhere within the film industry, the threat of some god-awful movie written by AI looms large, as creators including Joe Russo, Zack Snyder, and Jason Blum stand ready to welcome such developments with open arms.

The point is that all of this tumult is not the result of some super-intelligent AI system becoming self-aware, but the result of comparatively simple narrow AI systems, which are slowly revealing themselves to be a much more concerning contemporary threat to artistic integrity and authentic creativity. What's worse, is that so many prominent figures within the film industry seem ready to embrace the coming narrow AI takeover, which in the face of recent artificial intelligence-wrought blunders seems at best misguided. In just the past year, we've seen a backlash to AI-generated backgrounds in "True Detective: Night Country" and a significant controversy build around the use of AI art in the otherwise decent horror mockumentary "Late Night with the Devil."

For those keeping up with what will surely become an increasingly pressing issue over the coming years, there is another less conspicuous but just as concerning controversy brewing elsewhere in the industry: AI remasters of existing movies.

AI arrives at a tumultuous time for the film industry

Warner Bros.

The past couple of years have been particularly chaotic and turbulent for the film industry. As the effect of so-called superhero fatigue sends Hollywood scrambling for new IP to regurgitate and repackage, streaming services have seemingly kicked their output of middling "content" into high gear, further upsetting the old order. On the positive side, exciting new filmmaking trends have emerged to balance out the onslaught of nostalgia bait. Films such as "Skinamarink" and "I Saw the TV Glow" heralded the arrival of an entirely new style, one informed by internet aesthetics and adolescent years spent immersed in the online age. Meanwhile, films like "Spider-Man: Across the Spider-Verse" showed how the fascinating concept of the multiverse — thus far given short shrift by superhero blockbusters eager to shoehorn tortured cameos into their narratives — could produce truly boundary-pushing movies, with its refulgent concatenation of art styles speaking to our current moment where we stand on the event horizon of all cultural history.

Amid all this, AI has started to rear its head as a burgeoning force within the film industry. Aside from the threat of AI-generated scripts and studios scanning actors' likenesses for future artificial performances, those studios have also quietly sunk vast amounts of time and money into AI development, with Disney forming an entire business unit to coordinate the future use of AI and augmented reality (via Reuters). Meanwhile, Lionsgate has partnered with artificial intelligence firm Runway to train a new AI model on the studio's films in order to develop future projects (via The Hollywood Reporter), which followed Warner Bros. signing a deal for a system that would use AI to help the studio make decisions about which projects should be greenlit (via THR).

In the face of all this, AI remasters of existing movies may seem like a small issue. But for dedicated film fans, this rising trend of sending movies through AI remastering tools as an alternative to carrying out full-on restorations is just as controversial as any other of these seemingly larger developments. You've likely seen some of the monstrosities wrought by these learning algorithms, which have a penchant for turning background actors into hideous eldritch beasts from the beyond. But there's a lot more to this whole thing than unintentionally mangled visages, as evidenced by the director who warned us all about AI coming to embrace the technology.

James Cameron's AI restoration controversy

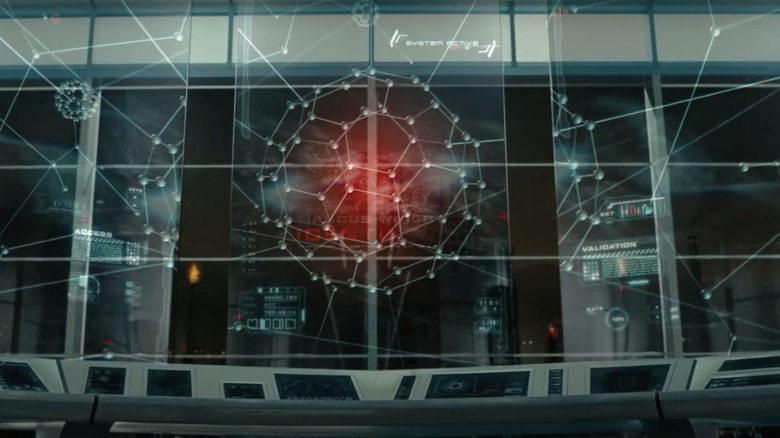

20th Century Studios

In 2024, three James Cameron classics came to 4K. "The Abyss," "True Lies," and "Aliens," were all given Ultra HD Blu-ray releases that included newly updated prints of the films, which were supposed to be the most crystal clear versions ever released. But when the new releases arrived, they proved immediately controversial. Why? Because Cameron and his Lightstorm Entertainment group had used AI to "restore" the film prints, recruiting Peter Jackson's New Zealand-based Park Road Post Production to help clean up the films with their machine-learning software — the same technology used on Jackson's shapeless Disney+ documentary "The Beatles: Get Back" and his vivid Wold War I documentary "They Shall Not Grow Old."

Unfortunately, the use of this cutting-edge technology yielded versions of the films that some fans found uncanny for their distinct visual distortions. Journalist Chris Person told the New York Times, "It just looks weird, in ways that I have difficulty describing. It's plasticine, smooth, embossed at the edges. Skin texture doesn't look correct. It all looks a little unreal." It wasn't just the odd detractor that spoke out against these machine-generated restorations either. Reddit is full of complaints, while YouTuber Nerrel produced an entire video on how AI had "ruined" Cameron's films, gaining more than a million views and producing a comment section flush with users in violent agreement with the video's sentiments. One user in particular summed up the point being made, writing, "The studio had the option to obtain REAL detail by simply re-scanning the original film rather than asking an AI to hallucinate detail for them."

The emphatic nature of these complaints along with their sheer volume should tell you that this is about more than films where actors look a bit off and the film grain has been reduced slightly too much. So, how bad are these AI restorations really? And as this technology becomes more and more prevalent, how will you be able to tell if one of your favorite movies has been fed through the AI machine?

The telltale demonic faces of AI restorations

CBS

To be clear, the issue with AI restoration is not simply that skin textures look a little waxy or that lines have been over-sharpened. These machine learning algorithms are also adding objects and artifacts into movies that previously weren't there, leading to multiple visual quirks that are at one amusing and depressing. Particularly in the case of "True Lies" and "Aliens," the technology seems to struggle to render the faces of actors in the backgrounds of shots. As pointed out by Nerrel in his video, Jenette Goldstein's Private Jenette Vasquez can be seen in the background of one "Aliens" scene with a constantly morphing face, as the AI clearly tries to interpret the data from the print it was fed and struggles to land on how the pixels should actually be arranged. It makes for a cursed little pocket of ungodly pixel scrambling as Private Vasquez's face morphs between various demonic arrangements à la the demons of "Devil's Advocate." This kind of thing is prevalent throughout the "True Lies" UltraHD update, too, and is one of the biggest tell-tale signs that a film has been handed over to artificial intelligence for its remaster.

But it's not just beloved Cameron classics that have been affected. When "I Love Lucy" was given a Blu-ray re-release in November 2024, it came complete with an array of infernal hell beasts populating its backgrounds, with one Twitter/X user pointing out some of the more egregious examples:

AI upscaling has turned "I Love Lucy" into some kind of horror.https://t.co/WriL3nqwXg pic.twitter.com/wD4VDypN8s

— 🄼🄴🄴🄷🄰🅆🄻 ⭕ (@meehawl) November 30, 2024

To be clear, we're not talking about directors going back and adding VFX after the fact, like when George Lucas added CGI to the original "Star Wars" trilogy in the '90s and for its 2011 Blu-ray re-release. We're talking about a machine making decisions about what should and shouldn't appear on-screen, and even fabricating entire elements that weren't there to begin with.

In the case of "Aliens" or "True Lies," this is surely just one undesirable result of applying a technology to 35mm that was developed to update extremely degraded footage. 35mm already has no problem preserving fine details and producing a stunning image by itself, and has a digital resolution of around 5.6K or 5,600 × 3,620 pixels which is more than enough to create a beautiful image without the need for AI "enhancements."

How to tell if AI has been unleashed on your favorite movie

20th Century Studios

Back in 2014, "Buffy the Vampire Slayer" received an HD remaster that saw the beloved series transformed into what many fans considered to be an over-sharpened yet also somehow overly-smoothed mess. Video essays have been made on the "tragedy" of the "Buffy" HD remaster, which is populated by actors who look like they've had one of those Instagram skin smoothing filters applied, and fans have been filling forums with their distaste for Fox's remasters ever since. Lamentably, for all its supposed power, AI has seemingly done little to help reduce these kinds of issues, as James Cameron's 4K remasters showcase much of the uncanny horror of the "Buffy" remasters.

Even for actors who haven't seemingly been possessed by beelzebub himself, there is an uncanny look to their appearance — a combination of over-sharpening lines and over-smoothing skin akin to, though not quite as pronounced as, the "Buffy" remasters. This over-sharpening also causes another major issue. The halo effect imbued by the overly defined edges of actors and objects make certain scenes look like they were shot against a green screen, a particular shame for James Cameron movies which are known for their practical effects and stunt work. In the case of "True Lies," for instance, actors and stunt performers risked their lives when a stunt nearly ended in disaster, only to have their work transformed into something that looks like it was shot on one of Marvel's sprawling blue screen hellscapes.

Other telltale signs of an AI overhaul are when certain details, especially hair, remain sharp even while characters are moving and obscured by motion blur. Similarly, details in the background are often caught by the AI's sharpening function, popping out of otherwise blurry footage and drawing attention away from the main action. These might seem like small errors, and many of them sort of are. But all of these missteps combine to create that same feeling from the updated "Buffy" episodes, i.e. that something is just off. What's more, we're talking about UltraHD remasters here — a format that is predicated on providing the best possible image quality. In that sense, nitpickery feels like it comes with the territory.

What do we want from a remaster?

TriStar Pictures

The real question underlying all this is: What is the point of a remaster? Is it to update the look of a movie shot on 35mm to more closely resemble digital film? Is it to make things as crystal clear as possible? I would argue that these goals actually subvert some of the most important aspects of filmmaking.

Take the practice of removing film grain, for example. Typically this is done by a process known as digital noise reduction, or DNR. This tech long predates AI but looks to have been a major part of the software used in the James Cameron 4K releases. For whatever reason, studios and directors decided at some point that film grain was undesirable and have spent years trying to remove it from their back catalogues via re-releases and remasters. One of the worst offenders was another Cameron classic, "Terminator 2: Judgment Day," which arrived in 4K UHD form in 2017 having been DNRd to hell, producing similarly waxy-looking characters to those in the most recent Cameron remasters. It followed a Blu-ray release of the movie some years earlier that also went heavy on the DNR, much to fans' dismay.

While the intent is seemingly to produce as clear an image as possible, this isn't necessarily all that desirable an outcome. Anyone who grew up in the pre-digital, pre-streaming, pre-social media age will surely recall a time when movies themselves possessed a certain magic that has been lost in the era of 4K digital releases and non-stop "content" creation. In a time before movies arrived accompanied by massive social media marketing campaigns, trailers that revealed all the details, and articles detailing every development in the lead up to a movie's release, films used to feel like some magical transmission from Hollywood. There was a mystery and an allure to them, and though it may sound trivial, film grain actually served to enhance this effect, giving films a faint sense that the footage was being broadcast from behind some otherworldly veil. It was part of the visual style, and removing it seems to represent a desire to remove some crucial part of the viewing experience. Is that what we want from remasters? Better yet, is that what we want to teach AI about how films should look?

AI and the alignment problem when it comes to our media

20th Century Studios

As machines inevitably take over more jobs previously carried out by humans, it would seem prudent to ask what kind of objectives and goals we want to imbue this technology with. In the context of AI at large, this is known as the alignment problem, which refers to the idea that we need to ensure artificial intelligence systems are aligned with human values and goals as they become more powerful and prevalent. In the context of these AI remasters, then, there is another alignment problem in microcosm. What do we want from these remasters, and how do we make sure the AI algorithms powering the software are aligned with those desires? What's more, who gets to decide what those desires are? James Cameron? Judging by his track record so far, that doesn't seem like the best idea.

You don't have to be a film purist to argue against these AI restorations. Very few believe that a film negative should be preserved with every scratch and speck of dust intact. But it seems like a fairly reasonable middle ground to assert that a real restoration would be something akin to what the original "Alien" got in 2019, or what "2001: A Space Odyssey" received when Christopher Nolan oversaw its return to theaters in 2018. The director led a process that involved scanning the original camera negative and emphasizing a complete lack of what he called "digital tricks, remastered effects, or revisionist edits." A similar process that involves beginning with the original negative, cleaning up the more obvious imperfections and scratches, scanning the cleaned film, and then bringing in the director to preserve their vision for color grading surely seems like the ideal scenario for a real restoration — something which could have been applied to "True Lies," with The Digital Bits claiming to have confirmed via Cameron's own Lightstorm Entertainment that Park Road was working from recent 4K scans of the original camera negative.

Does AI have a place in film restoration? Surely the answer is yes. In the case of "Aliens," which was shot on a high-speed film stock that yielded arguably too much film grain in the final image, bringing out finer details that were actively degraded by the grain seems like something worth doing, and if AI can get the job done, then why not use it? The issue seems to be that while all this talk of AI "ruining" films is somewhat rhetorical, we are at a point where we have to decide how much worse this stuff can get. In much the same way as the WGA and SAG stood firm to ensure AI use was somewhat regulated moving forward, anyone who really cares about film will surely want to ensure future iterations of their favorites don't come with hellish goblins populating the backgrounds and wax figures in place of movie stars.

English (US) ·

English (US) ·