Efficient connectivity is a key enabler for hyperscale and exascale clusters with tens or even hundreds of thousands of nodes — and traditional Ethernet is anything but.

That's not because of its peak throughput per se, but because of its architecture and the very way it transfers data. To resolve that bottleneck, a group of companies led by Meta, Microsoft, and Oracle began developing a next-generation data-center connectivity standard to deliver low latency, high bandwidth scale-out networking over standard Ethernet and IP. In mid-2025, the Ultra Ethernet 1.0.1 specification was defined by the Ultra Ethernet Consortium, which now includes over 100 companies.

Need for nodes … and connectivity

Ethernet has long been the backbone of enterprise networking and the connectivity technology of choice for cloud data centers and now AI data centers. But traditional Ethernet protocols were never designed for scale-out environments with up to a million of nodes. But Ultra Ethernet? Yeah, it can handle that.

The core design of standard Ethernet, specifically TCP's ordered delivery and reactive congestion control, struggles when hundreds of thousands of connections are active at once (a common situation for AI and HPC workloads). As node counts grow, packets more readily collide or get delayed, and switches must keep track of too many flows, causing false congestion and unstable latency. Ethernet also relies on software-heavy reliability and best-effort delivery, which is good enough for enterprise networks but adds latency and overhead in synchronized AI or HPC clusters.

Supercomputers generally rely on custom interconnects such as Infiniband, tech that feature hardware-level flow control, deterministic routing, and hardware-management reliability. With these connections, the NIC itself takes care of packet acknowledgments, retransmissions, and error recovery, opening doors to clusters with more nodes. But they're proprietary and expensive, and therefore were barely considered for next-generation AI and HPC data centers when Meta, Microsoft, and Oracle began their work in 2022.

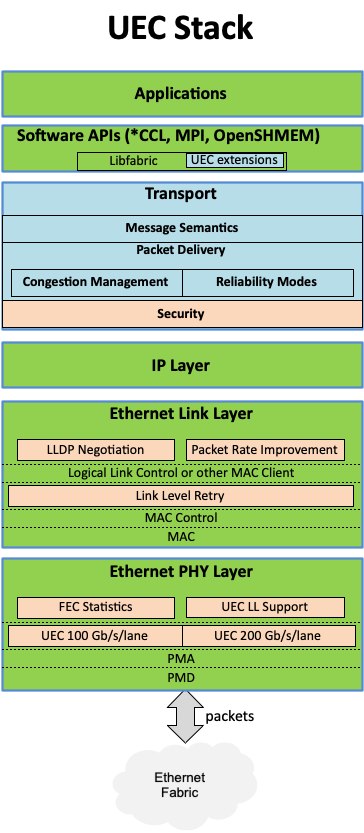

Rather than adopting an existing solution, the UEC created an entirely new networking standard that reshapes data transport by removing the traditional requirement for packets to arrive in order. The new standard introduces an all-new architecture — from software to physical layer — designed for unordered, connectionless communication over existing Ethernet and IP infrastructure. The result is an efficient and highly scalable transport stack that outperforms legacy RDMA implementations and remains compatible with today's data-center hardware and software ecosystems (e.g., IEEE 802.1, 802.3, and IETF RFC).

Ultra Ethernet: Design goals

The consortium's main goal was to solve long-standing issues inherited from RDMA technologies such as RoCE v2; those protocols from the early 2000s were optimized for ordered low-latency networks, but they lacked multipathing, effective congestion control, and embedded security.

RDMA's rigid ordering mechanisms force all packets along a single route, making networks prone to false congestion and inefficient load balancing and particularly inefficient for the bursty workloads seen in today's AI and HPC clusters. As a consequence, modern AI cluster consisting of tens of thousands of GPUs can lose roughly 30% of performance due to contemporary Ethernet's shortcomings.

UEC is designed to split network responsibilities into clearer parts, separating reliability from semantics. As the protocol's architects put it, connections should not be heavyweight, permanent, or software visible. Instead of having one system handle everything — message integrity, flow control, and connection state — these functions are now divided: A reliability layer takes care of packet delivery, acknowledgments, and retransmissions, while a semantic layer handles higher-level details like messages, addressing, and tags.

This design lets packets arrive out of order without confusing the software above it. Furthermore, because the change happens below the application level, existing apps, middleware, and Ethernet/IP networks can keep working as they are, which greatly simplifies the deployment of Ultra Ethernet in existing data centers.

Tailored evolution turns into a revolution

Like a napoleon pastry, Ultra Ethernet technology has many layers that combine to form something greater than the sum of their parts. Their tailored evolutionary steps have created a major leap forward for networking technology aimed at hyperscale AI and HPC deployments.

The Physical Layer of Ultra Ethernet remains based on standard IEEE 802.3 Ethernet signaling and optics, meaning it uses the same cables, transceivers, and switch ports already deployed in today's data centers. But UE's physical layer introduces enhanced forward error correction (FEC), lower-latency link training, more precise timing and jitter specifications, better telemetry (to give operators more visibility into signal integrity and link health) and improved synchronization for large-scale AI and HPC deployments. Arguably the key improvements of Ultra Ethernet are in the link and transport layers.

The Link Layer manages direct connections between switches and network adapters and ensures signal integrity, low latency, and reliable performance across a data-center expanses. Ultra Ethernet's link layer introduces three optional upgrades: Credit-Based Flow Control (CBFC) keeps data flow steady between devices by avoiding buffer overloads; Link Layer Retry (LLR) quickly fixes transmission errors; and Packet Trimming (PT) prevents packet loss. Together, they help prevent congestion, reduce data loss, and keep network performance reliable and predictable.

The Transport Layer is the core of Ultra Ethernet as it enables quick, reliable, and secure data transfers in an out-of-order manner between systems. The layer uses the Ultra Ethernet Transport (UET) protocol, which is divided into four parts — Semantic, Packet Delivery, Congestion Management, and Transport Security — that manage how messages are sent, keep packets in order, control network traffic, and protect data through encryption. Even when packets take different routes and arrive out of order, the system reassembles them instantly, thus keeping latency low, throughput high, and performance steady across massive AI and HPC clusters.

The Storage Layer of Ultra Ethernet is more of an integration and optimization effort than a full redesign. It builds on existing Ethernet storage protocols like NVMe-over-Fabrics, RDMA, and RoCE rather than replacing them. Nonetheless, it optimizes the protocols to better suit large AI clusters by integrating them tightly with the UET to reduce I/O delays and improve consistency across thousands of nodes to connect high-speed networking with storage infrastructure more efficiently.

As for the Management Layer, Ultra Ethernet transforms management from basic device configuration into fabric-wide, automated network orchestration for large AI and HPC systems. To do so, it includes automated tools for device discovery, network topology mapping, and performance monitoring, which enables administrators to quickly diagnose and fix problems across thousands of interconnected nodes.

The Software Layer (or rather Software Stack) in Ultra Ethernet — which interfaces applications and network — is not radically different from that in traditional Ethernet, but it is significantly expanded to make Ethernet more programmable and usable for AI and HPC deployments. The key improvement is that SL now integrates Libfabric, an open-source network API with unordered operations that allows programs to send data directly from memory to the network interface, bypassing the CPU and improving performance.

In addition, the Software Layer supports OpenConfig to standardize network management and the YANG data modeling language, which defines how configuration data is structured. In general, the software layer keeps Ethernet's core compatibility intact but adds programmability, cuts latency, and improves overall efficiency.

Managing Ultra Ethernet

As with traditional Ethernet, the evolution of Ultra Ethernet is managed by a well-established ecosystem of standards organizations that form the Ultra Ethernet Consortium. Among these organizations, a Compliance Working Group sets the official standards and testing procedures to ensure that all Ultra Ethernet devices and software work together correctly and meet the required performance levels. There is also the Management Working Group, which creates tools and models for configuring, monitoring, and controlling UE networks so operators can manage large fabrics efficiently. Finally, there is a Performance and Debug Working Group to define benchmarks and diagnostic tools to test, measure, and troubleshoot UE systems to ensure high reliability and consistent performance.

A work in progress

While the UEC 1.0.1 specification defines the core architecture, transport, and link models, the technology is still in in its infancy in terms of its validation, adoption, and development. Most of its innovations — like the Ultra Ethernet Transport protocol, congestion management, packet trimming, and many others — are being tested, refined, and integrated into prototype hardware and software.

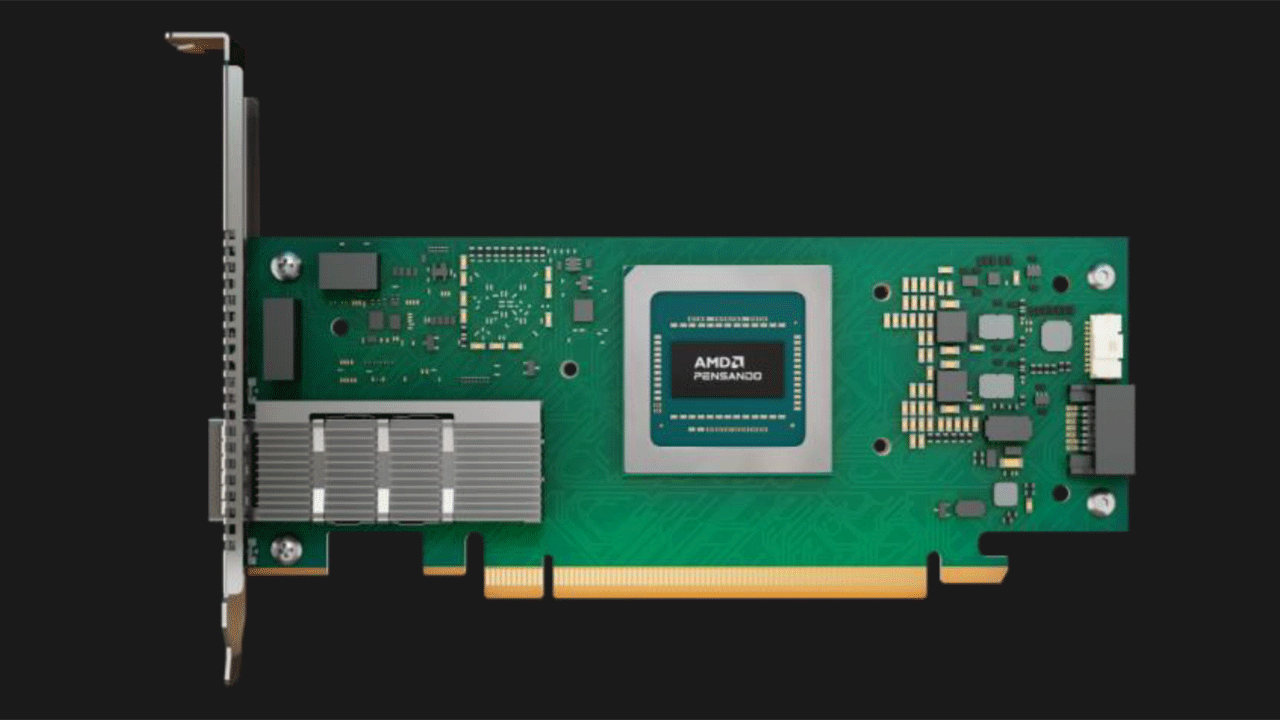

When it comes to hardware, there are already network cards said to be UEC-ready (rather than UEC 1.0-compliant), such as AMD's Pensando Pollara 400 AI NIC, which implies that for now it does not support all the features of the specification. While the card supports features like Intelligent Packet Spray, Out-of-order Packet Handling, Selective Retransmission, and Path-Aware Congestion Control, its developer does not mention link-level features like Packet Trimming, link-level Credit-Based Flow Control (CBFC), or advanced Link-Layer Retry (LLR) support. Then again, these are optional capabilities for now.

The consortium continues to develop future versions and extensions to the Ultra Ethernet specification, though only time will tell when these will be formally introduced.

Follow Tom's Hardware on Google News, or add us as a preferred source, to get our latest news, analysis, & reviews in your feeds.

3 weeks ago

35

3 weeks ago

35

English (US) ·

English (US) ·