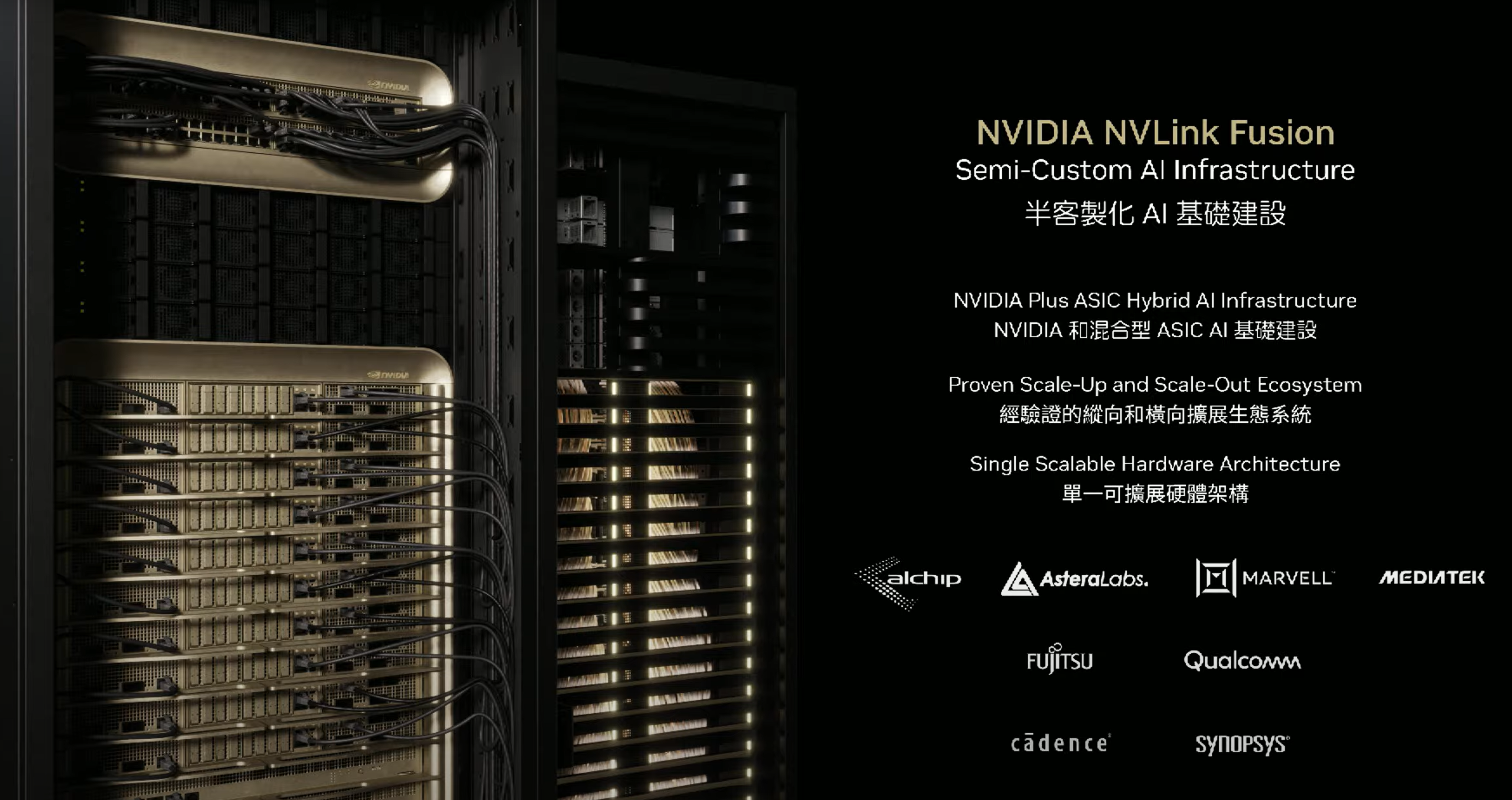

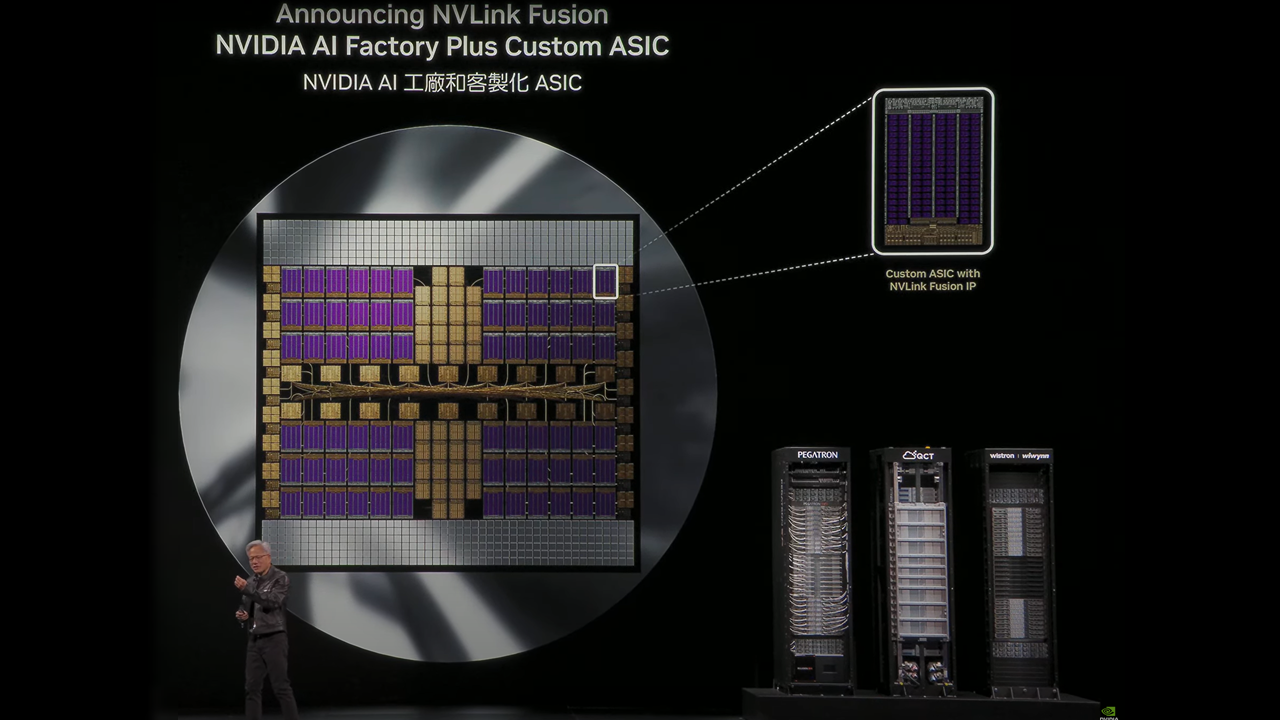

Nvidia made a slew of announcements at Computex 2025 here in Taipei, Taiwan, focused on its data center and enterprise AI initiatives, including its new NVLink Fusion program that allows customers and partners to use the company’s key NVLink technology for their own custom rack-scale designs. The program enables system architects to leverage non-Nvidia CPUs or accelerators in tandem with Nvidia’s products in its rack-scale architectures, thus opening up new possibilities. Nvidia has amassed a number of partners for the initiative, including Qualcomm and Fujitsu, who will integrate the technology into their CPUs. NVLink Fusion will also extend to custom AI accelerators, so Nvidia has roped in several silicon partners into the NVLink Fusion ecosystem, including Marvell and Mediatek, along with chip software design companies Synopsys and Cadence.

NVLink has served as one of the key technologies that assured Nvidia’s dominance in AI workloads, as communication speeds between GPUs and CPUs in an AI server are one of the largest barriers to scalability, and thus peak performance and power efficiency. Nvidia’s NVLink is a proprietary interconnect for direct GPU-to-GPU and CPU-to-GPU communication that delivers far more bandwidth and superior latency than the standard PCIe interface, up to a 14X bandwidth advantage, even though it leverages the tried-and-true PCIe electrical interface.

Nvidia has increased NVLink’s performance over the course of several product generations, but the addition of custom NVLink Switch silicon allowed the company to extend NVLink from within a single server node to rack-scale architectures that enable massive clusters of GPUs to chew through AI workloads in tandem. Nvidia’s NVLink advantage has thus served as a core advantage that its competitors, such as AMD and Broadcom, have failed to match.

However, NVLink is a proprietary interface, and aside from the company’s early work with IBM, Nvidia has largely kept this technology captive to products utilizing its own silicon. In 2022, Nvidia made its C2C (Chip-to-Chip) technology, an inter-die/inter-chip interconnect, available for other companies to use in their own silicon to facilitate communication with Nvidia GPUs by leveraging industry-standard Arm AMBA CHI and CXL protocols. However, the broader NVLink Fusion program is much broader, addressing larger scale-out and scale-up applications in rack-scale architectures using NVLink connections.

NVLink Fusion changes that paradigm, allowing Fujitsu and Qualcomm to utilize the interface with their own CPUs, thus unlocking new options. The NVLink functionality is integrated into a chiplet located next to the compute package. Nvidia has also roped in custom silicon accelerators, like ASICs, from designers MediaTek, Marvell, and Alchip, enabling support for other types of custom AI accelerators to work in tandem with Nvidia’s Grace CPUs. Astera Labs has also joined the ecosystem, presumably to provide specialized NVLink Fusion interconnectivity silicon. Chipmaking software providers Cadence and Synopsys have also joined the initiative to provide a robust set of design tools and IP.

Qualcomm recently confirmed that it is bringing its own custom server CPU to market, and while details remain vague, the company's partnership with the NVLink ecosystem will allow its new CPUs to ride the wave of Nvidia's rapidly expanding AI ecosystem.

Fujitsu has also been working on bringing its mammoth 144-core Monaka CPUs, which feature 3D-stacked CPU cores over memory, to market. “Fujitsu’s next-generation processor, FUJITSU-MONAKA, is a 2-nanometer, Arm-based CPU aiming to achieve extreme power efficiency. Directly connecting our technologies to NVIDIA’s architecture marks a monumental step forward in our vision to drive the evolution of AI through world-leading computing technology — paving the way for a new class of scalable, sovereign and sustainable AI systems,” said Vivek Mahajan, CTO at Fujitsu.

Nvidia is also releasing new Nvidia Mission Control software to unify operations and orchestration, optimizing system-level validation and workload management, a key capability that speeds time to market.

Nvidia rivals Broadcom, AMD, and Intel are notably absent from the NVLink Fusion ecosystem. These companies, and many more, are members of the Ultra Accelerator Link (UALink) consortium, which aims to provide an open industry-standard interconnect to rival NVLink, thus democratizing rack-scale interconnect technologies.

Meanwhile, Nvidia’s partners are forging ahead with chip design services and products the company says are available now.

6 months ago

165

6 months ago

165

English (US) ·

English (US) ·