Beyond selling its Vera data center CPUs as part of Vera Rubin NVL72 rack-scale systems, Nvidia has expressed ambitions to become a standalone data center CPU vendor, and a new partnership with hyperscale giant Meta represents a big step forward for that plan.

As part of a new multi-year strategic partnership announced today, Meta says it'll expand its use of Nvidia tech as it continues to build out hyperscale data centers optimized for its AI training and inference efforts. Those plans include “millions” of Blackwell and Rubin GPUs, part of a massive AI spending plan from Meta that could reach as much as $135 billion in total for 2026.

Go deeper with TH Premium: AI and data centers

Nvidia data center honcho Ian Buck told The Register that Meta is seeing gains of up to 2x the performance per watt on certain workloads with the Grace platform, and that it's already test-driving Nvidia's next-gen Vera CPU with "very promising" results. The companies say that large-scale Vera-only deployments could begin as soon as 2027.

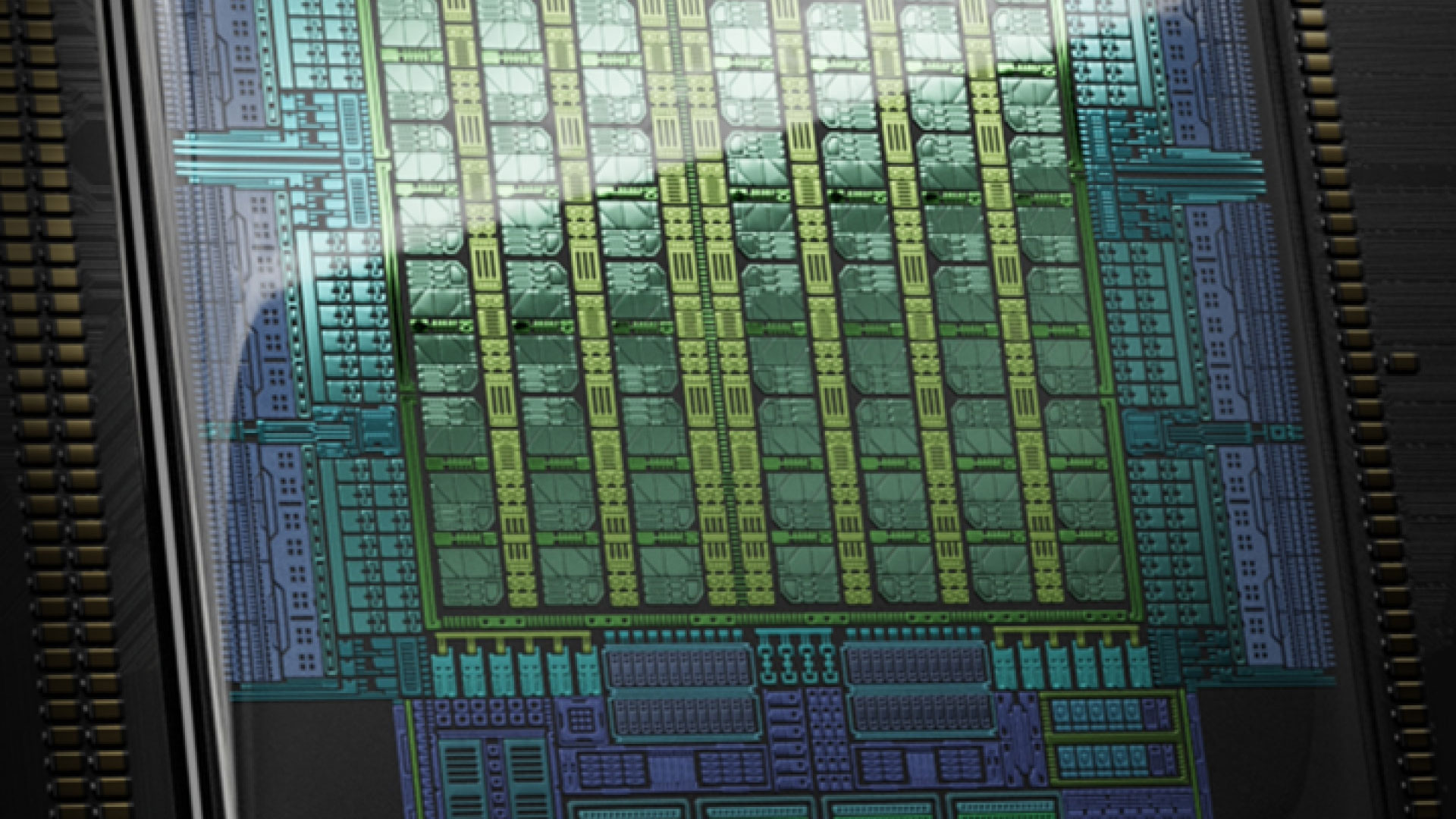

For reference, the Grace CPU sports 72 Arm Neoverse V2 cores and supports up to 480GB of LPDDR5X memory in its standalone C1 config. Nvidia also offers Grace as a "CPU Superchip" that joins two dies together over the NVLink-C2C interconnect, resulting in 144 cores with up to 960GB of LPDDR5X and up to 1024 GB/s of aggregate memory bandwidth from certain memory capacities.

Vera, in turn, has 88 custom Arm cores with up to 176 threads, support for up to 1.5 TB of LPDDR5X memory with up to 1.2TB/s of memory bandwidth, and PCIe Gen 6 and Compute Express Link 3.1 connectivity. Importantly, Vera is also the first Nvidia CPU to support a confidential or trusted computing environment throughout the entirety of its rack-scale systems.

Beyond CPUs, Meta also says it'll deploy Nvidia Spectrum-X Ethernet switches throughout its data centers. As we learned at CES, Spectrum-X switches with co-packaged optics promise to increase performance-per-watt for scale-out applications by eliminating active cabling with optical transceivers that can, when combined with the power usage of the switch itself, account for up to 10% of each rack’s power consumption.

Power used for data movement is power that isn’t used to feed GPUs, and with demands for the absolute maximum compute density and efficiency in every rack these days, a savings of that magnitude is a huge deal, so it's no surprise that Meta is hopping on board as it continues to expand its footprint.

Beyond hardware, Nvidia will offer its considerable in-house expertise in designing AI models to Meta’s engineers to help the company tune and boost the performance of its own core AI applications.

All of this just goes to show that as the AI revolution continues, Nvidia’s reach into tech far beyond gaming graphics cards is so extensive that people are likely to use software powered or shaped by its models and accelerators, whether they realize it or not, and that’ll only grow more true by the day as Meta expands its use of Nvidia's platforms and tech.

Follow Tom's Hardware on Google News, or add us as a preferred source, to get our latest news, analysis, & reviews in your feeds.

2 hours ago

6

2 hours ago

6

English (US) ·

English (US) ·