"Non-profits, including legal non-profits, are incorporating AI in their services to better serve those who need it most," the spokesperson said. "However, every attorney has a legal and professional responsibility to ensure that court pleadings accurately cite real, applicable case law, not fake AI-generated ones. Like any new technology, users have a responsibility to use it ethically and responsibly. The integrity of the justice system is vital for the vulnerable populations we serve, and we are confident that the courts will continue to safeguard fairness and accuracy as new tools are introduced."

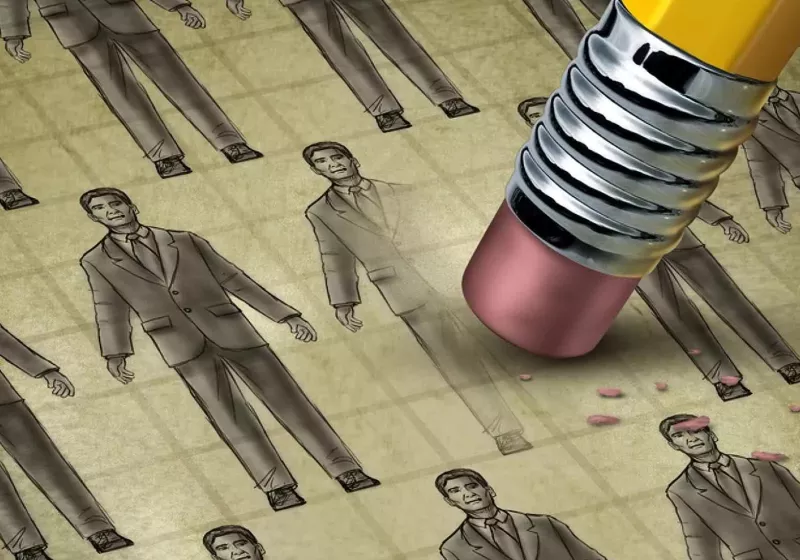

College students rely too much on ChatGPT

Barneck told ABC4 that it's common for law clerks to be unlicensed, but little explanation was given for why an unlicensed clerk's filing wouldn't be reviewed.

Kouris warned that "the legal profession must be cautious of AI due to its tendency to hallucinate information," and likely the growing pains of adjusting to the increasingly common use of AI in the courtroom will also include law firms educating recent college graduates on AI's well-known flaws.

And it seems law firms may have their work cut out for them there.

College teachers recently told 404 Media that their students put too much trust in AI. According to one, Kate Conroy, even the "smartest kids insist that ChatGPT is good 'when used correctly,'" but they "can’t answer the question [when asked] 'How does one use it correctly then?'"

"My kids don’t think anymore," Conroy said. "They try to show me 'information' ChatGPT gave them. I ask them, 'How do you know this is true?' They move their phone closer to me for emphasis, exclaiming, 'Look, it says it right here!' They cannot understand what I am asking them. It breaks my heart for them and honestly it makes it hard to continue teaching."

Ars could not immediately reach Bednar or comment.

English (US) ·

English (US) ·