![]()

Last month, Samsung launched the Galaxy S25 series of devices. During the keynote presentation in San Jose, it revealed that it was bolstering its AI disclosure over the S24 series by integrating C2PA content authenticity. It did, but only on AI images and that feels incomplete and backward.

“If you take a photo, use Generative Edit and save the photo, you’ll see text at the bottom that says ‘Contains AI-generated content AI tools used: Photo assist,'” a Samsung representative tells PetaPixel. “From there, you click the CR icon where it’ll store and show “edit history,” which is the new component with the C2PA standard that we did not have on S24.”

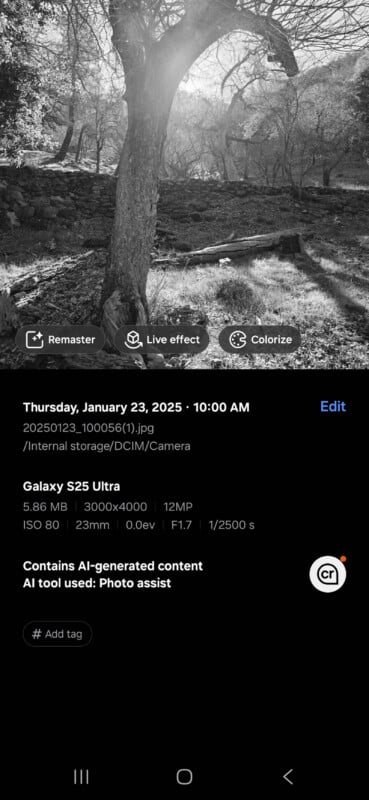

The content authenticity visibility on a Samsung Galaxy S25 Ultra.

The content authenticity visibility on a Samsung Galaxy S25 Ultra.Unfortunately, Samsung isn’t embedding any C2PA data on images that don’t use AI, which was the original point of the system. Doing this only to AI images and not real photos feels, therefore, backward.

This approach isn’t entirely Samsung’s fault, however. The Content Authenticity Initiative also bears some responsibility since it shifted its marketing last year to overly emphasize the tool’s capability to detect and track AI-generated images. There is an entire web page dedicated to convincing readers that the C2PA authenticity standard is perfect for identifying where an AI-generated image came from and while this is technically true, it’s missing the second half of the equation — verifying real images.

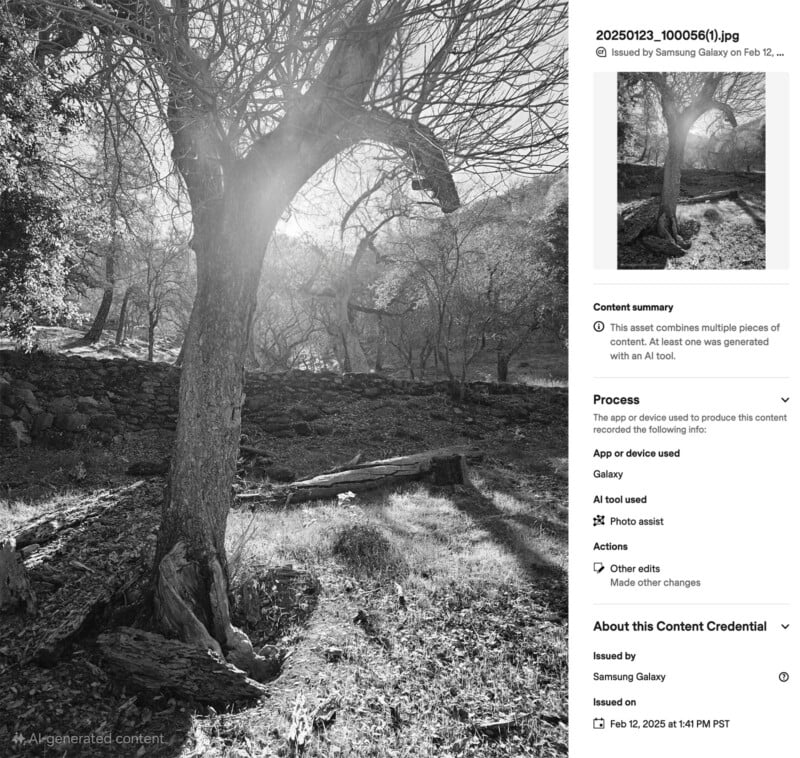

A photo edited with Samsung’s AI flare removal tool results in a watermark and the C2PA metadata tag.

A photo edited with Samsung’s AI flare removal tool results in a watermark and the C2PA metadata tag.“Content Credentials make the origin and history of content available for everyone to access, anytime. With this information at your fingertips, you have the ability to decide if you trust the content you see—understanding what it is and how much editing or manipulation it went through,” the Content Authenticity Initiative (CAI) writes.

But that only works when there is authentication happening on both fake and real images in a system where the C2PA is properly deployed. Without a complete, working system, just adding the C2PA metadata to AI images doesn’t meaningfully help anyone.

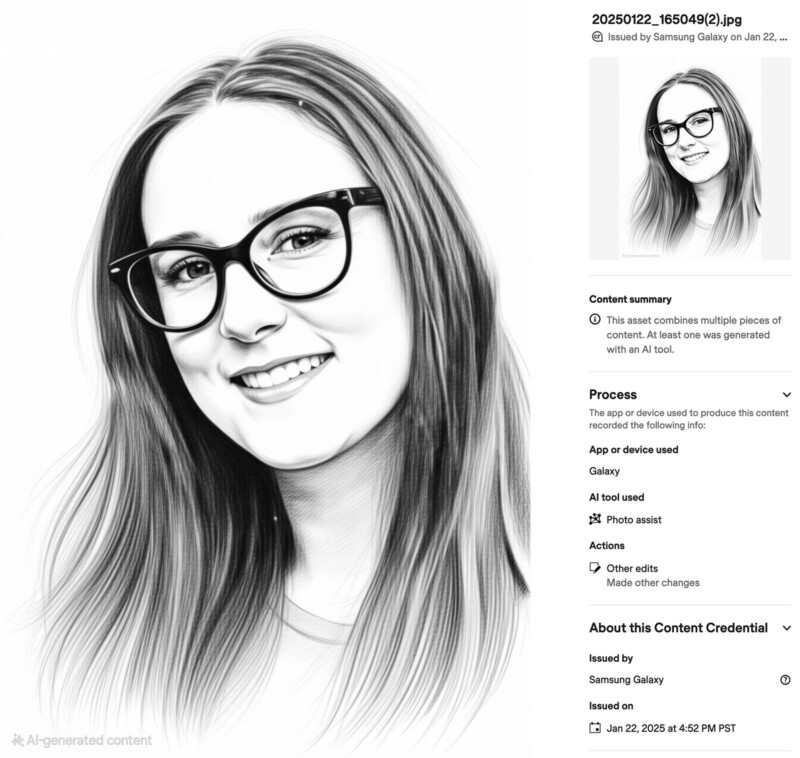

Samsung’s AI-powered “Sketch” tool results in a watermark and the C2PA metadata tag.

Samsung’s AI-powered “Sketch” tool results in a watermark and the C2PA metadata tag.Samsung is doing its best here, at least with the guidance that the CAI appears to be providing. Firstly, it kept the watermark on AI-generated images that it launched last year and even expanded it to be significantly larger. Paired with that, it added the C2PA metadata tag to any images that have any AI use.

The CAI, and Samsung, are responding to the public’s demand to know when an image they’re seeing isn’t real. But while that is what the public is saying, it’s not what they actually want. What the public really wants to know is when a photo isn’t AI — when it’s real.

An unedited photo taken on the Galaxy S25 Ultra has no C2PA metadata.

An unedited photo taken on the Galaxy S25 Ultra has no C2PA metadata.It is remarkably difficult to prove a photo is fake or was edited in some way but it’s very easy to prove a photo is real and unchanged.

Proving a photo is real and unedited is also the original pitch of the CAI and C2PA. When all real images have this metadata tag, there won’t be a need to prove an image is AI-generated because it won’t have a tag. This approach also answers the very, very common question of, “Why can’t I just remove the C2PA metadata tag?”

In the ideal CAI world, if an image doesn’t have the C2PA tag, then it shouldn’t be trusted. That, then, fully covers all possible AI-generated uses and photos where the tag has been removed. Suddenly, it doesn’t matter.

Unfortunately, everyone involved here is approaching the problem from the other side which is much, much more difficult. If real photos have no C2PA tag and the AI-generated ones do, then there is a perceived benefit by nefarious parties to removing the watermark and C2PA metadata tag. The whole system suddenly falls apart.

English (US) ·

English (US) ·