![]()

While Adobe’s Firefly text-to-video generator leaves a lot to be desired, a secondary application of the technology is currently in the beta version of Premiere Pro. Called Generative Extend, it allows video editors to extend a clip by up to two seconds and does a surprisingly good job at it.

Just like the Firefly text-to-video generator, this new tool is only available in beta. But unlike Firefly, Adobe isn’t charging to use the tool and it doesn’t consume any “generative credits” to try out. Editors who want to try the tool can download Premiere Pro (beta) from the Creative Cloud app starting today.

The tool is limited but the usefulness of what Adobe is building is already on display. For now, only 1080p Full HD videos can be expanded with the tool and only select color spaces — log footage is not compatible. It also maxes out at two seconds of additional footage. Adobe will likely expand the use cases of the tool in the future, however.

Generative Extend answers the question, “What if my clip is just barely not long enough for the coverage I need?” This is not an uncommon situation in video editing. Often, a clip cuts off too quickly to allow an editor to edit a transition or a bit of b-roll isn’t quite long enough to cover a-roll that has been chopped together to remove pausing, for example. In these cases, two seconds is usually enough to skate by.

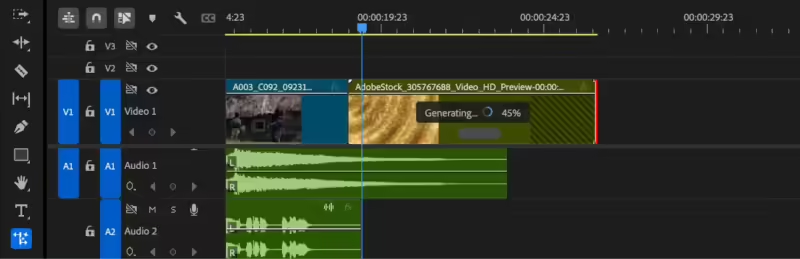

Generative Extend will create additional frames and insert them into your sequence. | Adobe

Generative Extend will create additional frames and insert them into your sequence. | AdobeI tested four different situations that ask Premiere Pro to address varying problems: a panning scene, a walking scene, a walking scene with tilt, and a shot of a human face. In my mind, these have varying levels of difficulty, with the last option being the most challenging.

Starting with a simple panning scene, two seconds were added to the end of the clip:

I can definitely tell when the AI kicks in on this clip, as the perceived resolution dips dramatically and the scene starts to “jitter” a bit, which is a common problem with AI-generated footage. This scene is pretty busy, so perhaps this would be considered more difficult to Firefly than it seems to me, the editor, to be.

The next scene is a simple outdoor walking scene, and again two seconds were added to the end of the clip.

This is more impressive. If I wasn’t looking for that little dip in resolution, I might have missed it. I think this is good enough to provide coverage for an edit and I would be satisfied with this result. Yes, you can see the footage “jitter” a bit, but it might help that the scene has a slower shutter speed and a lot of motion already, which hides the transition to AI pretty well.

I tried another walking scene, this one in low light (which pushes the camera’s sensor a bit) and includes a tilt motion.

You can, again, see the transition to AI generation pretty distinctly. The perceived resolution falls off and everything in the scene takes on the aforementioned “jitter” that is so indicative of AI-generated video. I was hoping for better here, but I could be convinced to ship this if I were in a real bind on the edit.

The final scene is the one I thought would be the most difficult: me, talking to the camera. Instead of adding to the end of the clip like I had for the last three examples, I added two seconds to the beginning. This is, again, a normal use case that I have personally come up against in the past.

Given my experience with Firefly, I assumed that it would not be able to handle a real human face very well, let alone the transition that would occur between the AI-generated segment and the real video clip. That’s why I was so surprised that the resolution of the video matched very well and, other than the creepy mouth movements, it looks almost legitimate. It is very close.

I don’t even hold the mouth movements against Premiere Pro’s AI generation since the clip starts with my mouth moving; it doesn’t really have anything else to go on. This was, in my opinion, the most impressive showing.

While I am less than impressed with Adobe’s pure generative AI, this beta version of Generative Extend is very promising and addresses an actual need by artists — that’s more than I can say about some of Adobe’s other AI endeavors. There are improvements to be made, for sure, but for a free beta, this is a very good start.

English (US) ·

English (US) ·