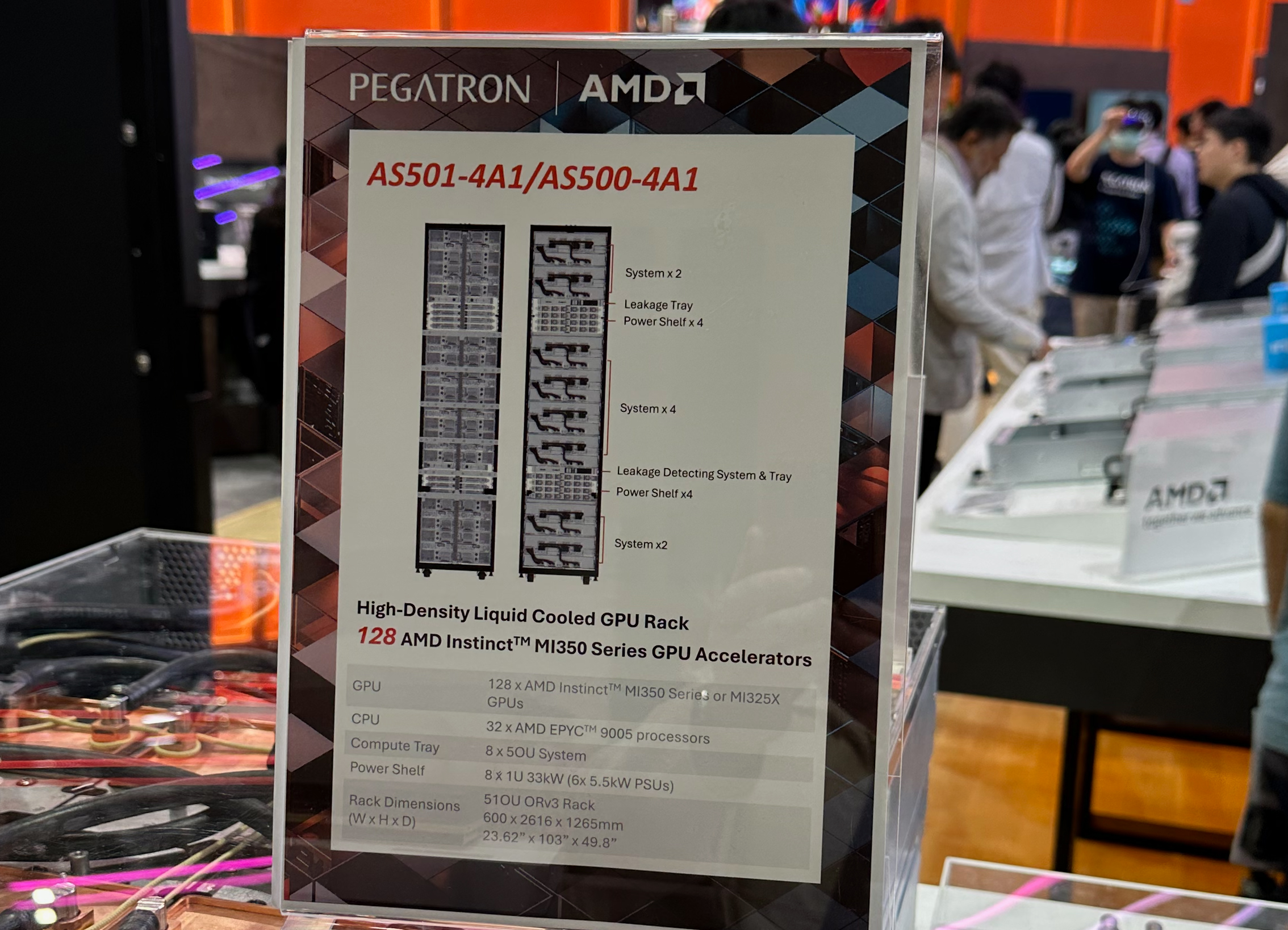

Pegatron showcased a unique rack-scale solution based on 128 AMD’s next-generation Instinct MI350X accelerators designed for performance-demanding AI inference and training applications at Computex. The system precedes AMD’s in-house designed rack-scale solutions by a generation, so for Pegatron, this system will serve as a training vehicle to build rack-scale AMD Instinct MI450X-based IF64 and IF128 solutions that are about a year away.

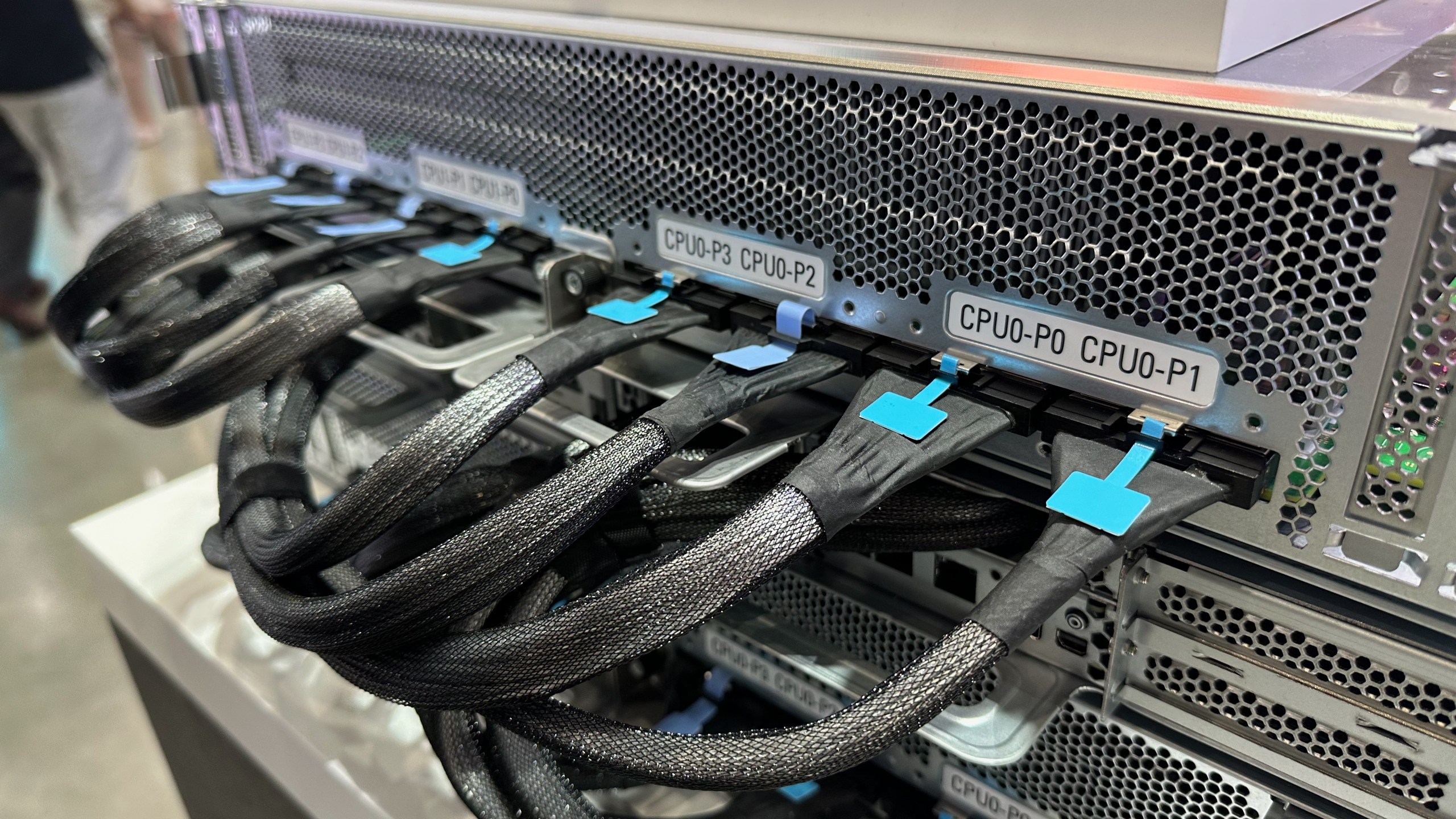

The Pegatron AS501-4A1/AS500-4A1 rack-scale system relies on eight 5U compute trays, each packing one AMD EPYC 9005-series processor and four AMD Instinct MI350X AI and HPC accelerators for AI and HPC. The CPU and the accelerators are liquid-cooled to ensure maximum and predictable performance under high loads. The machine comes in a 51OU ORV3 form-factor, making it suitable for cloud datacenters relying on the OCP standards (read: Meta).

The machine connects GPUs located in a different chassis using 400 GbE as AMD does not have proprietary switches for Infinity Fabric connections (in any case, the maximum scale-up world size of AMD’s Instinct is eight processors today). This contrasts with Nvidia’s GB200/GB300 NVL72 platform, which has 72 GPUs interconnected with the company’s ultra-fast NVLink connection. To that end, the Instinct MI350X system will barely match the GB200/GB300 NVL72 in terms of scalability.

The new machine will be used by OCP adopters for their immediate workloads and for learning how to better build AMD Instinct-based systems with loads of GPUs both from hardware and software ports of view. Pegatron’s machine is hard to overestimate for multiple reasons, main of which being setting the stage to challenge Nvidia’s dominance in rack-scale AI solutions.

Given what we know about AMD’s Instinct MI350X, Pegatron’s 128-GPU rack-scale system based on these units offers up to a theoretical peak of 1,177 PFLOPS PFLOPs of FP4 compute for inference, assuming a near linear scalability. With each MI350X supporting up to 288GB of HBM3E, the system delivers 36.8TB of high-speed memory, enabling support for massive AI models that exceed the capacity of Nvidia’s current Blackwell-based GPUs.

However, its reliance on Ethernet for GPU-to-GPU communication limits the system's scalability. With a maximum scale-up domain of eight GPUs, the system is probably built for inference workloads or multi-instance training rather than tightly synchronized LLM training, where Nvidia's NVL72 systems excel. Still, it serves as a high-performance, memory-rich solution today and a precursor toward AMD's next-generation Instinct MI400-series solutions.

Follow Tom's Hardware on Google News to get our up-to-date news, analysis, and reviews in your feeds. Make sure to click the Follow button.

6 months ago

94

6 months ago

94

English (US) ·

English (US) ·