Nvidia has announced its new Rubin CPX GPU today, a "purpose-built GPU designed to meet the demands of long-context AI workloads." The Rubin CPX GPU, not to be confused with a plain Rubin GPU, is an AI accelerator/GPU focused on maximizing the inference performance of the upcoming Vera Rubin NVL144 CPX rack.

As AI workloads evolve, the computing architectures designed to power them are evolving in tandem. Nvidia's new strategy for boosting inference, termed disaggregated inference, relies on multiple distinct types of GPUs working in tandem to reach peak performance. Compute-focused GPUs will handle what it calls the "context phase," while different chips focused on memory bandwidth will handle the throughput-intensive "generation phase."

The company explains that cutting-edge AI workloads involving multi-step reasoning and persistent memory, like AI video generation or agentic AI, benefit from the availability of huge amounts of context information. Inference for these large AI models has become the new frontier for AI hardware development, as opposed to training those models.

To this end, Rubin CPX GPU is designed to be a workhorse for the compute-intensive context phase of disaggregated inference (more on that below), while the standard Rubin GPU can handle the more memory-bandwidth-limited generation phase.

Rubin CPX is good for 30 petaFLOPs of raw compute performance on the company's new NVFP4 data type, and it has 128 GB of GDDR7 memory. For reference, the standard Rubin GPU will be able to reach 50 PFLOPs of FP4 compute and is paired with 288 GB of HBM4 memory.

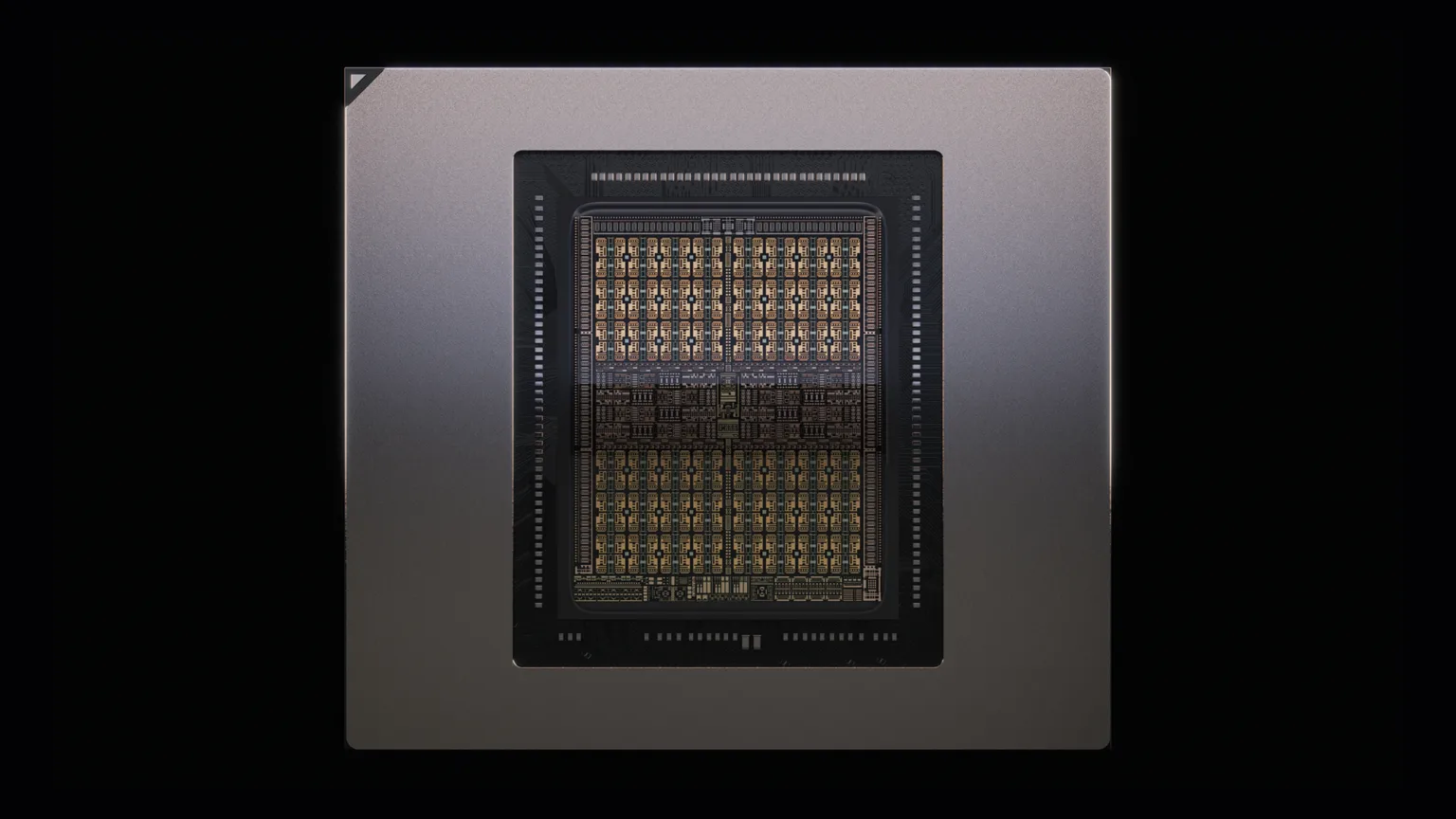

Early renders of the Rubin CPX GPU, such as the one above, appear to feature a single-die GPU design. The Rubin GPU will be a dual-die chiplet design, and as pointed out by ComputerBase, one-half of a standard Rubin would output 25 PFLOPs FP4; this leads some to speculate that Rubin CPX is a single, hyper-optimized slice of a full-fat Rubin GPU.

The choice to include GDDR7 on the rather than HBM4 is also one of optimization. As mentioned, disaggregated inference workflows will split the inference process between the Rubin and Rubin CPX GPUs. Once the compute-optimized Rubin CPX has built the context for a task, for which the performance parameters of GDDR7 are sufficient, it will then pass the ball to a Rubin GPU for the generation phase, which benefits from the use of high-bandwidth memory.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Rubin CPX will be available inside Nvidia's Vera Rubin NVL144 CPX rack, coming with Vera Rubin in 2026. The rack, which will contain 144 Rubin GPUs, 144 Rubin CPX GPUs, 36 Vera CPUs, 100 TB of high-speed memory, and 1.7 PB/s of memory bandwidth, is slated to produce 8 exaFLOPs NVFP4. This is 7.5x higher performance than the current-gen GB300 NVL72, and beats out the 3.6 exaFLOPs of the base Vera Rubin NVL144 without CPX.

Nvidia claims that $100 million spent on AI systems with Rubin CPX could translate to $5 billion in revenue. For more about what all we know about the upcoming Vera Rubin AI platform, see our premium coverage of Nvidia's roadmap. We'll expect to see Rubin, Rubin CPX, and Vera Rubin altogether in person at Nvidia's presentation at GTC 2026 this March.

Follow Tom's Hardware on Google News, or add us as a preferred source, to get our up-to-date news, analysis, and reviews in your feeds. Make sure to click the Follow button!

2 months ago

60

2 months ago

60

English (US) ·

English (US) ·