Nvidia RTX Blackwell cards aren't just for gaming. Hopefully, that's no surprise, and that sort of statement has been true about every Nvidia GPU architecture dating back to the original Quadro cards in 2000. The professional and content creator features present in Nvidia GPUs have increased significantly, targeting video and photo editing, 3D rendering, audio, and more. As with so many other features of the Blackwell architecture, many of the latest developments will be powered by AI.

We have a whole suite of Nvidia presentations to get through today, including neural rendering and DLSS 4, the Blackwell RTX architecture, Founders Edition 50-series cards, generative AI for games and RTX AI PCs, and a final session on how to benchmark Blackwell "properly." We've got the full slide deck from each session from Nvidia's Editors' Day on January 8, so check those out for additional details.

AI and machine learning have caused a massive paradigm shift in the way we use a lot of applications, particularly those that deal with content creation. I remember the earlier days of Photoshop, where the context-aware fill often resulted in a blob of garbage. Now, it's become an indispensable part of my photo editing workflow, doing in seconds what used to require minutes or more. Similar improvements are coming to video editing, 3D rendering, and game development.

For AI image generation, one of the new LLMs is Flux. It basically requires a 24GB graphics card to get it running properly by default, though there are quantized models that aim to fit all of that into a smaller amount of VRAM. But with native FP4 support in Blackwell, not only do the quantized models run in 10GB of VRAM, but they also run substantially faster. It's not all apples to apples, but Flux.dev in FP16 mode required 15 seconds to create an image, compared to just five seconds when using FP4 mode. (Wait, what happened to FP8 mode on the RTX 4090? Yeah, there's marketing fluff happening...)

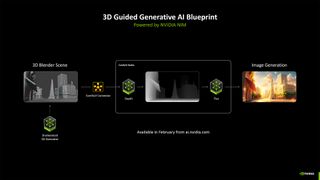

Another cool demo Nvidia showed was image generation guided by a 3D scene. Using Blender, you could mock up a rough 3D environment and then provide a text prompt, which generative AI would then use the depth map combined with Flux to spit out an image. It still had some of the usual issues, but the ability to place 3D models to provide hints to the AI LLM often resulted in images that were far closer to the desired result. There was a live blueprint demo shown at CES that should be publicly available next month.

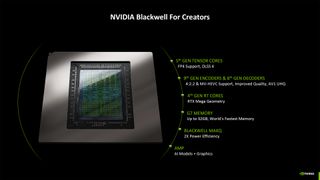

Nvidia has also added native 4:2:2 video codec support to Blackwell's video engine. Many videos use 4:2:0, which has known quality issues, but many modestly priced cameras now support raw 4:2:2. The problem is that editing 4:2:2 can be very CPU-intensive. Blackwell will fix that, and a sample workflow using a Core i9-14900K went from taking 110 minutes to encode to just 10 minutes with a Blackwell GPU.

Blackwell's video engine has also improved the AV1 and HEVC quality, with a new AV1 UHQ mode. And the RTX 5090 will come with triple NVENC blocks, compared to two NVENC blocks on the 4090 and just one on the 30-series and earlier GPUs. Support for popular video editing applications, including Adobe Premiere and DaVinci Resolve, will come starting in February.

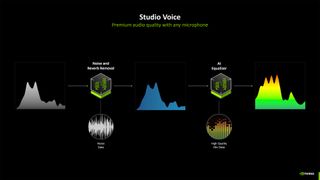

Something I'm more interested in seeing is the updated Nvidia Broadcast software, which adds new Studio Voice and Virtual Key Light filters. The former should help lower-tier microphones sound better, while the latter aims to improve contrast and brightness. But these aren't the only changes for streamers.

Nvidia demonstrated a new ACE-powered AI sidekick. It went about as well as the ACE game demos, though, and feels like something more for beginners rather than quality streamers. But you can give it a shot when it becomes available, and I'll freely admit that it's not something really targeted at the sort of work I do.

Other new features include faster ray tracing hardware for 3D rendering, with Blender running 1.4X faster on a 5090 than a 4090. (Much of that comes courtesy of having 27% more processing cores, of course.) Other applications like D5 Render saw even larger gains, but that's thanks to DLSS 4. Still, boosting the speed of viewport updates can really help with 3D rendering applications, and that seems to be a great use of AI denoising and upscaling.

One final item Nvidia showed was the ability to create 3D models using SPAR3D, which can take images and make a 3D model in seconds. It's already available from HuggingFace, and there will be a new NIM (Nvidia Inference Microservice) soon.

7 hours ago

4

7 hours ago

4

English (US) ·

English (US) ·