Nvidia kicked off its CES 2025 keynote with a rehashing of the company history, starting with NV1 and arcade machines and evolving into an AI powerhouse. The goal is to apply machine learning to every application possible, all powered by Nvidia GPUs — the house that GeForce built. And that's what we're really here to see: The next generation of GeForce hardware, powered by the Blackwell architecture.

Jumping right into the heart of the matter, Nvidia kicked off the GPU announcements with the RTX 5070 at $549. That will leverage AI in various ways to deliver, according to Nvidia, RTX 4090 performance for one third the price. It will also allow for higher levels of performance in laptops, with a mobile RTX 5070 using half the power of an RTX 4090.

The rest of the announced 50-series lineup consists of the RTX 5090 at $1,999 as the halo part of this generation, with 3,400 AI TOPS of performance. The RTX 5080 will deliver a bit more than half the AI performance at 1,800 TOPS, with a far more attractive price of $999 — inheriting the price of the outgoing RTX 4080 Super. The RTX 5070 Ti comes next at $749 with 1,400 TOPS, and then finally the RTX 4070 with 1,000 TOPS at already noted $549 price point.

It's an impressive start to the next generation GPU announcements, but we need to understand how Nvidia plans to deliver these upgrades. There's also plenty that we don't yet know (officially) about these GPUs. But let's start with what we do know.

Swipe to scroll horizontally

| Architecture | GB202 | GB203 | GB203 | GB205 |

| Process Technology | TSMC 4NP | TSMC 4NP | TSMC 4NP | TSMC 4NP |

| Transistors (Billion) | 92 | ? | ? | ? |

| Die size (mm^2) | 744 | ? | ? | ? |

| SMs / CUs / Xe-Cores | 170? | 84? | 64? | 44? |

| GPU Shaders (ALUs) | 21760? | 10752? | 8192? | 5632? |

| Tensor / AI Cores | 680? | 336? | 256? | 176? |

| Ray Tracing Cores | 170? | 84? | 64? | 44? |

| Boost Clock (MHz) | 2450? | 2620? | 2680? | 2770? |

| VRAM Speed (Gbps) | 28 | 32? | 30? | 30? |

| VRAM (GB) | 32 | 16? | 16? | 12? |

| VRAM Bus Width | 512 | 256? | 256? | 192? |

| L2 / Infinity Cache | 128? | 64? | 64? | 48? |

| Render Output Units | 240? | 112? | 96? | 64? |

| Texture Mapping Units | 680? | 336? | 256? | 176? |

| TFLOPS FP32 (Boost) | 125 | 56.3 | 43.9 | 31.2 |

| TFLOPS FP16 (INT8 TOPS) | 853 (3412) | 451 (1803) | 351 (1405) | 250 (998) |

| Bandwidth (GB/s) | 1792 | 1024 | 960 | 720 |

| TBP (watts) | 600 | 350 | 300 | 225 |

| Launch Date | Jan 2025 | Jan 2025 | Feb 2025 | Feb 2025 |

| Launch Price | $1,999 | $999 | $749 | $549 |

Given the stated AI TOPS of performance, the first thing that's obvious is that Nvidia has doubled the AI compute operations relative to Ada Lovelace — at least for INT8 workloads. Our Blackwell RTX 50-series overview has had rumored specifications for a while, and based on the AI TOPS we've gone ahead and updated the data to at least give us a ballpark estimate on clock speeds and core configurations.

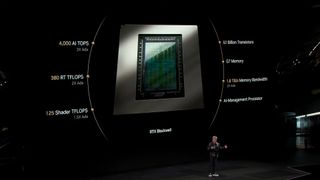

These aren't just random guesses, either. Even if the exact core counts and clock speeds may be slightly off, the stated compute levels should work out. Nvidia shows RTX Blackwell offering 125 TFLOPS of FP32 graphics compute via the shaders, which is 1.5X more than its Ada generation counterpart, while the AI performance will be 3X as high. So, AI performance relative to shader performance has doubled.

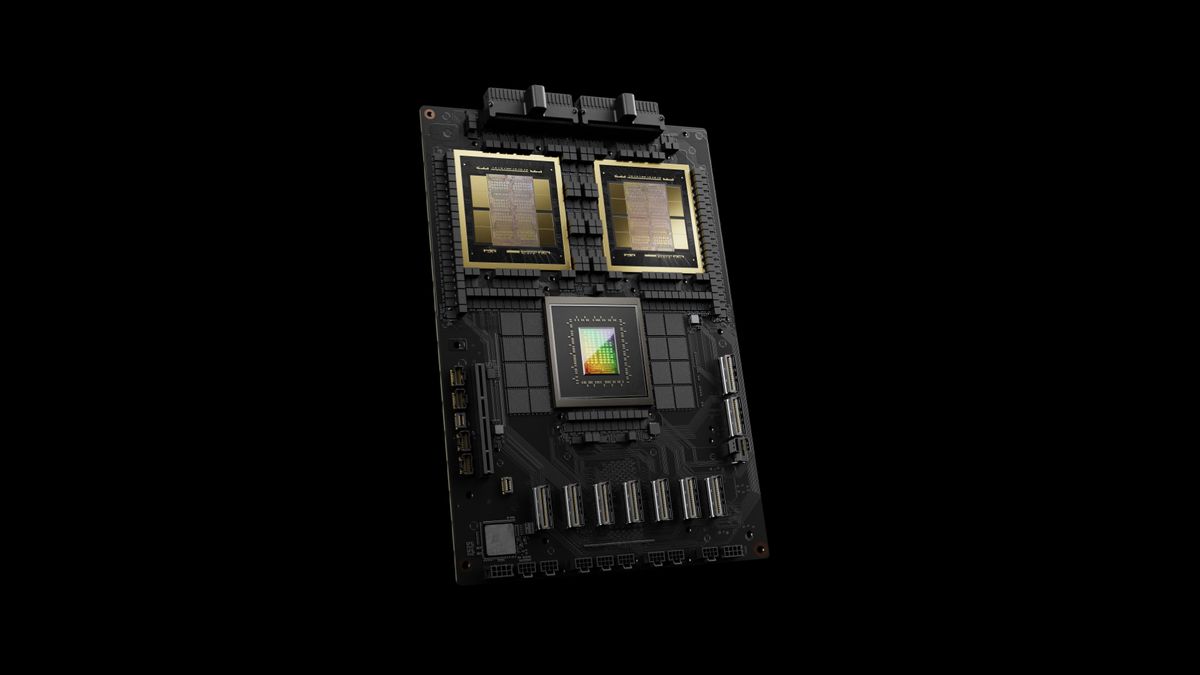

We don't know if the fifth generation tensor cores used in Blackwell will double the throughput for other number formats. Considering the multipurpose use cases for these GPUs — they'll go into gaming cards, yes, but also professional GPUs and data center AI solutions — we suspect all aspects of the tensor cores got upgraded.

What's interesting is that if we plug in the clock speeds and rumored core counts, we can get a better idea of the final specs. The 125 TFLOPS figure is also accompanied by a maximum 4,000 INT8 TOPS, while the RTX 5090 scales that down to 3,400 TOPS. So the 125 TFLOPS figure represents a hypothetical fully enabled Blackwell chip, while the RTX 5090 will only be partially enabled. That makes sense.

Current rumors put the GB202 at up to 192 SMs, while the RTX 5090 will only have 170 enabled. Doing the math, that will give the RTX 5090 about 107 TFLOPS of shader compute to go with the 3,400 TOPS. But the 1.8 TB/s of bandwidth figure does match up perfectly with the previously rumored 28 Gbps GDDR7 memory running on a 512-bit memory interface.

What will Nvidia do with double the AI compute on all of its gaming GPUs? Naturally, it plans to have new features and software solutions that will take advantage of the capabilities. With the RTX 5070 offering 1,000 TOPS of compute, nearly the same performance as the RTX 4090 at one third the price, that opens the doors for more computationally demanding tasks.

One of the most likely use cases will be AI-based texture compression. We've heard about this in the past, and the idea was demonstrated running on previous generation hardware... but not at extreme framerates. Neural Texture Compression back in May 2023 ran at less than half the speed of standard BTC (Block Truncation Coding) compression. But 18 months later, with boosted AI compute and more training? It's conceivable that we could have NTC running at the same speed as traditional BTC.

Given the concerns we and others have had with GPUs running out of VRAM on modern games, it's not too surprising that NTC would be one of the major new features of the Blackwell generation of hardware. Boasting higher image quality with one third the memory use, if utilized it could make even 8GB graphics cards far more viable. There's just one slight problem: Many games are cross-platform titles that run on consoles powered by AMD GPUs.

How many games are going to support Nvidia's new texture compression technology if it requires even just an RTX graphics card? And if it requires an RTX 50-series card, that number will be far smaller. But Nvidia has enough sway to move the gaming market in ways that AMD and Intel can't.

Will this be the rumored DLSS 4, aka neurally rendered graphics? Or will that be something else? Nvidia hasn't said yet, but certainly it appears NTC will at least fall under the DLSS umbrella somewhere.

Besides the desktop GPUs, Nvidia also announced the mobile lineup product names. There will be matching RTX 5090, 5080 5070 Ti, and 5070 laptops with availability in March 2025. While the model names match the desktop line, performance will be significantly lower, and we expect the other specifications will also see similar cuts.

The RTX 5090 laptop GPU will offer 1,850 AI TOPS and start at $2,899. That means it's basically equal to the desktop RTX 5080. The mobile 5080 drops to 1,350 AI TOPS, slightly less than the desktop 5070 Ti. Mobile 5070 Ti will have the same 1,000 TOPS as the desktop 5070, and then the vanilla 5070 laptop GPU will offer up to 800 AI TOPS — which is probably a tease of the upcoming RTX 5060 Ti desktop part.

The rest of the keynote, as you might expect, spent a lot of time talking about AI use in all sorts of other areas — vehicles, medical, warehouses, robotics, etc. It's all stuff we've been hearing repeatedly from Nvidia for the past several years, and it's all interesting, but it's not really our core focus. There's so much happening in the realm of AI, and at times it feels a lot like the crypto and NFT hype we were hearing about back in 2020–2021. Except, this time it doesn't appear that we'll see an end to Ethereum mining that will quiet things down.

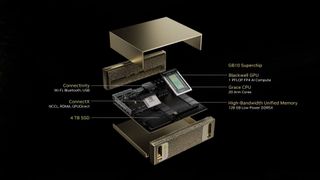

Nvidia also showed off its new "AI supercomputer" that packs a Grace Blackwell GB10 superchip into a mini PC. Called Project Digits, it runs the full DGX software stack, with 20 Grace CPU cores, 1 PFLOPS of FP4 performance, 128GB of memory, and a 4TB SSD into what should be a more affordable and portable solution that can sit on a desk.

And that wraps up the keynote. The most exciting stuff was obviously the RTX 50-series announcement, and there's still a lot that we don't know. That will all be revealed in the coming days, and we're anticipating the full RTX 50-series launch to begin before the end of the month. Stay tuned.

1 week ago

12

1 week ago

12

English (US) ·

English (US) ·