In brief

- Mistral Medium 3 rivals Claude 3.7 and Gemini 2.0 at one-eighth the cost, targeting enterprise AI at scale.

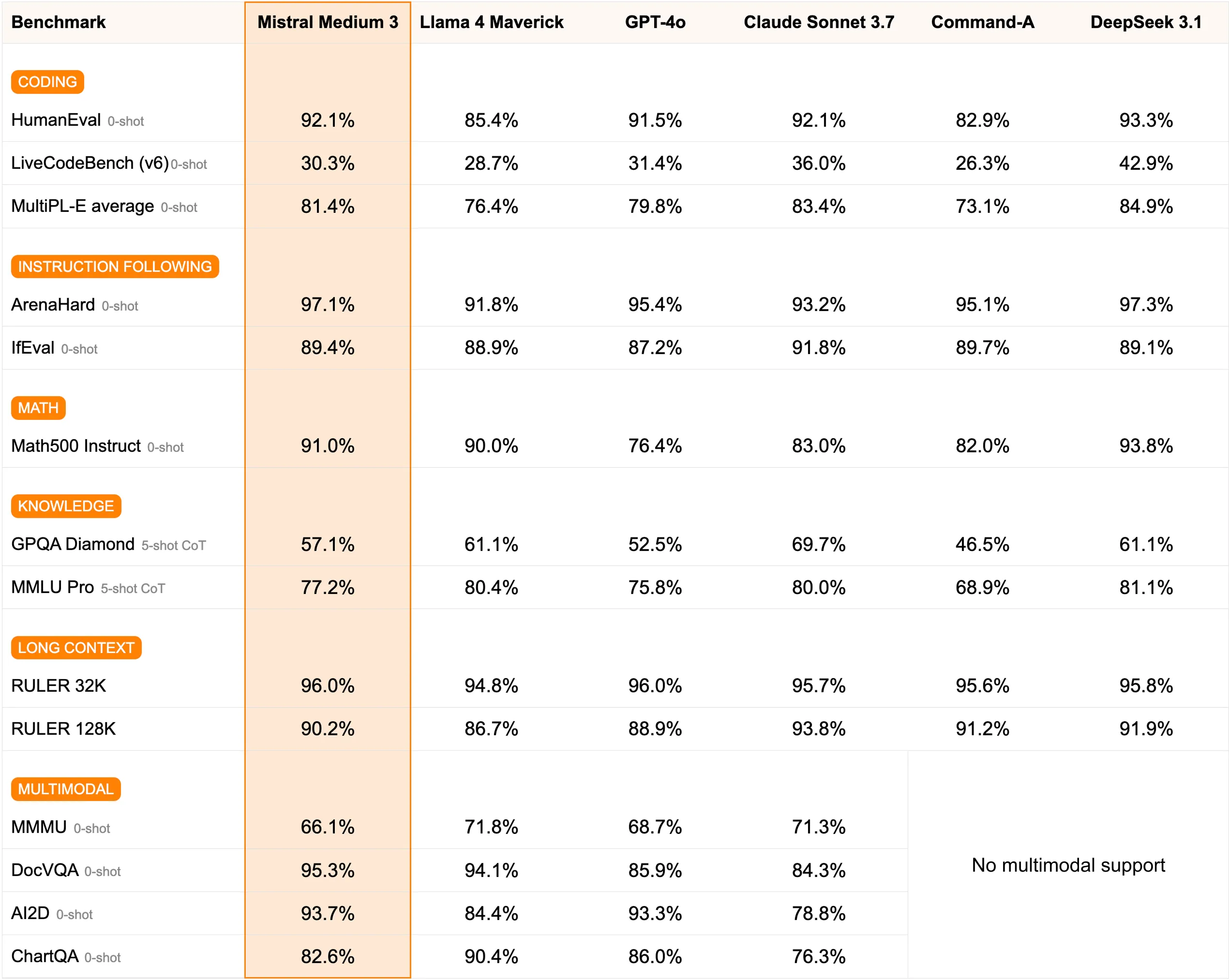

- The model excels in coding and business applications, outperforming Llama 4 Maverick and Cohere Command A in benchmarks.

- Now live on Mistral La Plateforme and Amazon Sagemaker, with Google Cloud and Azure integrations coming soon.

Mistral Medium 3 dropped yesterday, positioning the model as a direct challenge to the economics of enterprise AI deployment.

The Paris-based startup, founded in 2023 by former Google DeepMind and Meta AI researchers, released what it claims delivers frontier performance at one-eighth the operational cost of comparable models.

"Mistral Medium 3 delivers frontier performance while being an order of magnitude less expensive," the company said.

The model represents Mistral AI’s most powerful proprietary offering to date, distinguishing itself from an open-source portfolio that includes Mistral 7B, Mixtral, Codestral, and Pixtral.

At $0.4 per million input tokens and $2 per million output tokens, Medium 3 significantly undercuts competitors while maintaining performance parity. Independent evaluations by Artificial Analysis positioned the model "amongst the leading non-reasoning models with Medium 3 rivalling Llama 4 Maverick, Gemini 2.0 Flash and Claude 3.7 Sonnet."

Mistral Medium 3 independent evals: Mistral is back amongst the leading non-reasoning models with Medium 3 rivalling Llama 4 Maverick, Gemini 2.0 Flash and Claude 3.7 Sonnet

Key takeaways:

➤ Intelligence: We see substantial intelligence gains across all 7 of our evals compared… pic.twitter.com/mc9il9WV8J

— Artificial Analysis (@ArtificialAnlys) May 8, 2025

The model excels particularly in professional domains.

Human evaluations demonstrated superior performance in coding tasks, with Sophia Yang, a Mistral AI representative, noting that "Mistral Medium 3 shines in the coding domain and delivers much better performance, across the board, than some of its much larger competitors."

Benchmark results indicate Medium 3 performs at or above Anthropic's Claude Sonnet 3.7 across diverse test categories, while substantially outperforming Meta's Llama 4 Maverick and Cohere's Command A in specialized areas like coding and reasoning.

The model's 128,000-token context window is standard, and its multimodality lets it process documents and visual inputs across 40 languages.

Image: Mistral AI

Image: Mistral AIBut unlike the models that made Mistral famous, users will not be able to modify it or run it locally.

Right now, the best source for open source enthusiasts is Mixtral-8x22B-v0.3, a mixture of experts model that runs 8 experts of 22 billion parameters each. Besides Mixtral, the company has over a dozen different open-source models available.

It’s also initially available for enterprise deployment and not domestic usage via LeChat—Mistral’s chatbot interface. Mistral AI emphasized the model's enterprise adaptation capabilities, supporting continuous pretraining, full fine-tuning, and integration into corporate knowledge bases for domain-specific applications.

Beta customers across financial services, energy, and healthcare sectors are testing the model for customer service enhancement, business process personalization, and complex dataset analysis.

The API will launch immediately on Mistral La Plateforme and Amazon Sagemaker, with a forthcoming integration planned for IBM WatsonX, NVIDIA NIM, Azure AI Foundry, and Google Cloud Vertex.

The announcement sparked considerable discussion across social media platforms, with AI researchers praising the cost-efficiency breakthrough while noting the proprietary nature as a potential limitation.

The model's closed-source status marks a departure from Mistral's open-weight offerings, though the company hinted at future releases.

"With the launches of Mistral Small in March and Mistral Medium today, it's no secret that we're working on something 'large' over the next few weeks," Mistral’s Head of Developer Relationships Sophia Yang teased in the announcement. "With even our medium-sized model being resoundingly better than flagship open source models such as Llama 4 Maverick, we're excited to 'open' up what's to come."

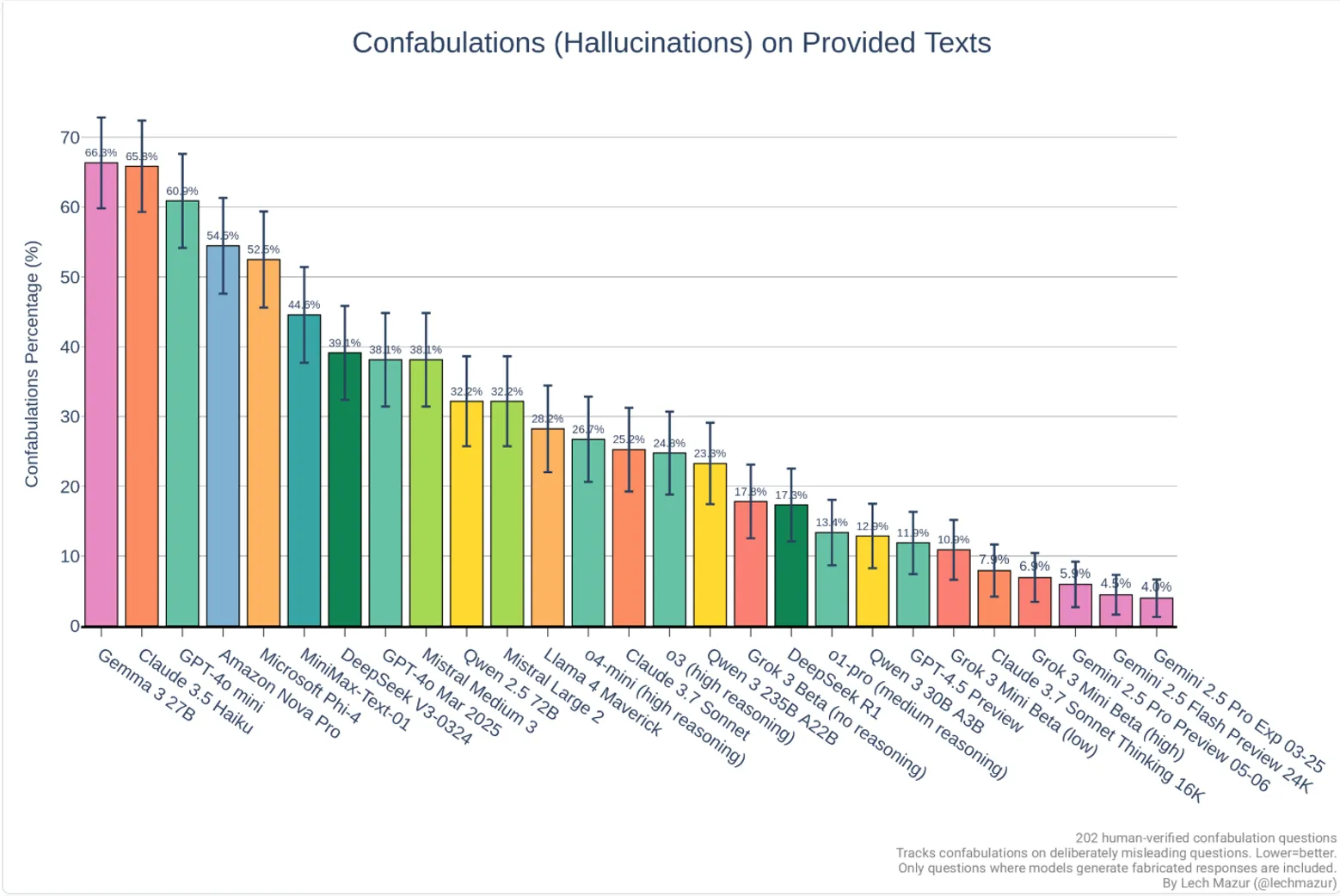

Mistral tends to hallucinate less than the average model, which is excellent news considering its size.

It’s better than Meta Llama-4 Maverick, Deepseek V3 and Amazon Nova Pro, to name a few. Right now, the model that has the least hallucinations is Google’s recently launched Gemini 2.5 Pro.

Image: Lech Mazur via Github

Image: Lech Mazur via GithubThis release comes amid impressive business growth for the Paris-based company despite being quiet since the release of Mistral Large 2 last year.

Mistral recently launched an enterprise version of its Le Chat chatbot that integrates with Microsoft SharePoint and Google Drive, with CEO Arthur Mensch telling Reuters they've "tripled (their) business in the last 100 days, in particular in Europe and outside of the U.S."

The company, now valued at $6 billion, is flexing its technological independence by operating its own compute infrastructure and reducing reliance on U.S. cloud providers—a strategic move that resonates in Europe amid strained relations following President Trump's tariffs on tech products.

Whether Mistral's claim of achieving enterprise-grade performance at consumer-friendly prices holds up in real-world deployment remains to be seen.

But for now, Mistral has positioned Medium 3 as a compelling middle ground in an industry that often assumes bigger (and pricier) equals better.

Edited by Josh Quittner and Sebastian Sinclair

Generally Intelligent Newsletter

A weekly AI journey narrated by Gen, a generative AI model.

6 months ago

87

6 months ago

87

English (US) ·

English (US) ·