Chinese developers of Deepseek AI have released a new model that leverages its multi-modal capabilities to improve the efficiency of its handling of complex documents and large blocks of text, by converting them into images first, as per SCMP. Vision encoders were able to take large quantities of text and convert them into images, which, when accessed later, required between seven and 20 times fewer tokens, while maintaining an impressive level of accuracy.

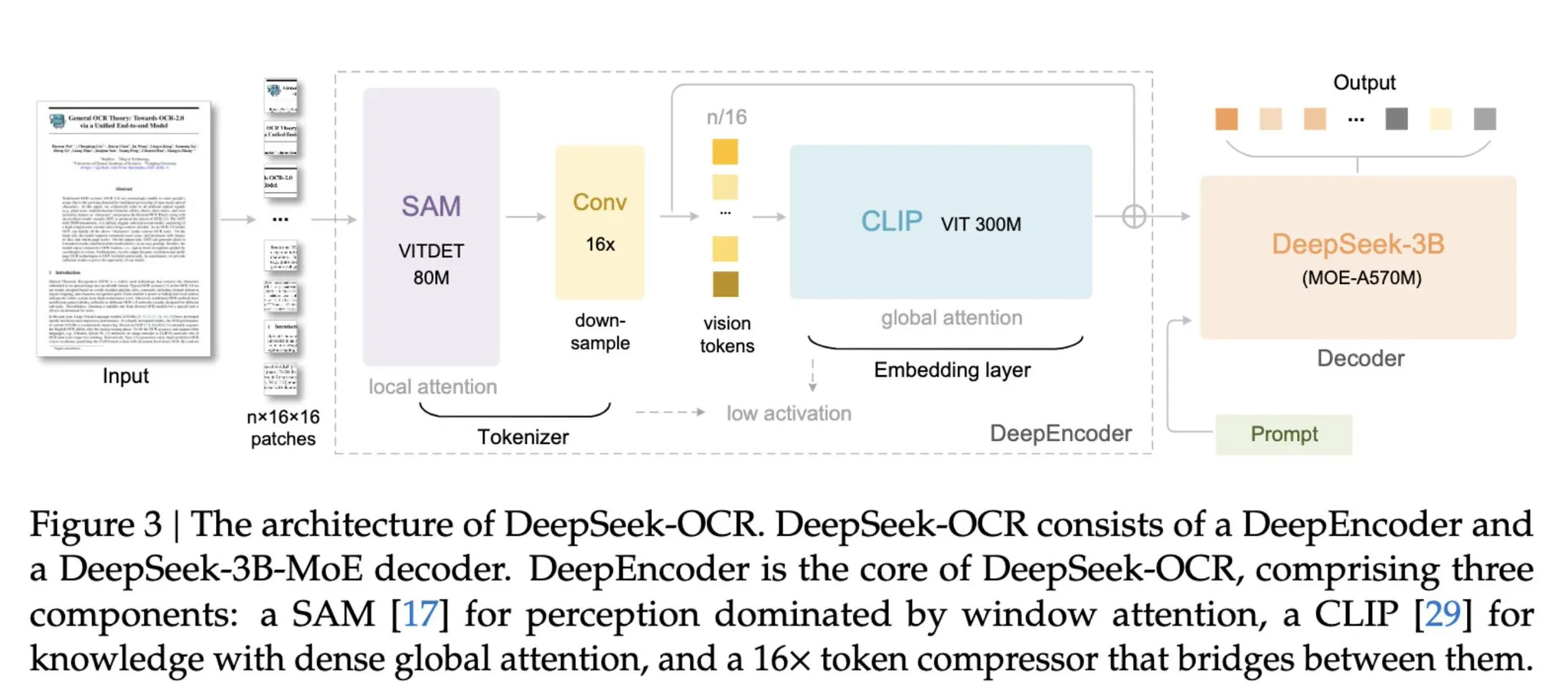

Deepseek is the Chinese-developed AI that shocked the world in early 2025, showcasing capabilities similar to those of OpenAI's ChatGPT, or Google's Gemini, despite requiring far less money and data to develop. The creators have continued to work on making the AI more efficient since, and with the latest release known as DeepSeek-OCR (optical character recognition), the AI can deliver an impressive understanding of large quantities of textual data without the usual token overhead.

“Through DeepSeek-OCR, we demonstrated that vision-text compression can achieve significant token reduction – seven to 20 times – for different historical context stages, offering a promising direction” to handle long-context calculations, the developer said.

This works really well for handling tabulated data, graphs, and other visual representations of information. This could be of particular use in finance, science, or medicine, the developers suggest.

In benchmarking, the developers claim that when reducing the number of tokens by less than a factor of 10, DeepSeek-OCR can maintain a 97% accuracy rating in decoding the information. If the compression ratio is increased to 20 times, the accuracy falls to 60%. That's less desirable and shows there are diminishing returns on this technology, but if a near-100% accuracy rate could be achieved with even a 1-2x compression rate, that could still make a huge difference in the cost of running many of the latest AI models.

It's also being pitched as a way of developing training data for future models, although introducing errors at that point, even in the form of a few percent off base, seems like a bad idea.

If you want to play around with the model yourself, it's available via online developer platforms Hugging Face and GitHub.

Follow Tom's Hardware on Google News, or add us as a preferred source, to get our latest news, analysis, & reviews in your feeds.

1 month ago

18

1 month ago

18

English (US) ·

English (US) ·