- Microsoft's 'Recall' feature for Windows has been mired in controversy over privacy concerns

- The tool uses AI to constantly take screenshots and create a searchable timeline of your activity

- A new 'sensitive information' filter has been deployed for testing, but it doesn't appear to work very well

That’s right folks, it’s that time of the week again: Microsoft Recall has yet again stumbled straight out of the gate, this time accused of storing personal user data such as credit card details and social security numbers - even with a supposed ‘sensitive information’ filter switched on.

A highly controversial feature originally announced for Copilot+ PCs way back in June, Recall uses AI to effectively take constant screenshots of whatever you’re doing on your computer and arrange those screenshots in a timeline, allowing you to ‘recall’ back to an earlier point by prompting Copilot to search back through your system history.

I wasn’t kidding with that “time of the week” remark, by the way. Just last week we reported that a glitch was stopping Recall from working at all for some users, and literally one week before that we reported a bug with the screenshotting portion of the tool. Before that, it was delayed multiple times due to privacy and security concerns. It’s been far from a smooth rollout, to say the least.

But Microsoft has now officially pushed out Recall for public testing (via the Windows Insider Channel) as an opt-in feature, so naturally some enterprising folks are trying to break it – and our friends over at Tom’s Hardware have had some immediate success.

So what’s the problem with Recall?

TH’s Avram Piltch did some in-depth testing with Recall, specifically investigating how the supposed new sensitive data filter worked. As it turns out, it doesn’t work very well at all: across multiple apps and websites, only two online stores were barred from letting Recall screenshot personal details, even when inputting financial information on a custom HTML page with an input box that literally said, “enter your credit card number below.”

Piltch obviously didn’t publish screenshots of his own credit card details, but noted that he did test using his real info and Recall still captured it. However the filter functions (it presumably uses AI to identify private information on-screen), it clearly still needs some work.

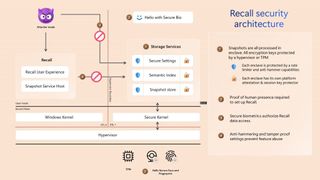

Microsoft does say on its blog that it plans to “continue to improve this functionality” and that “you can delete any snapshot in Recall that you don’t want and tell Recall to ignore that app or website in that snapshot going forward”, but as it stands right now, Insiders using the tool are effectively putting their data at risk. The screenshots are encrypted and not shared with Microsoft or any third parties, but keeping an exhaustive record of your PC use like that is basically creating a perfect database for bad actors to nab your personal information from.

Of course, the feature is still technically in testing even if members of the public can access it now, so there’s every chance that by the time Recall hits full release (whenever that ends up being) it’ll have had these kinks fully ironed out. But with so many concerns buzzing around it, I personally don’t think I’ll be using – my memory is just fine, Microsoft.

You might also like...

- Microsoft doesn’t care if your unsupported PC can run Windows 11 – it wants you to stop using it right now

- Windows 11's 'suggested actions' feature is headed to the Microsoft Graveyard - but here's what's replacing it

- Microsoft keeps trying to tempt us back to using Edge, with a new upgrade that makes browsing quicker and smoother even on older PCs

English (US) ·

English (US) ·