Serving tech enthusiasts for over 25 years.

TechSpot means tech analysis and advice you can trust.

The big picture: Nvidia holds anywhere from 75 to 90 percent of the AI chip market. Despite or rather because of this market dominance, big tech rivals and hyperscalers have been developing their own hardware and accelerators to chip away at the company's AI empire. Microsoft is now ready to show the details of its first custom AI chip.

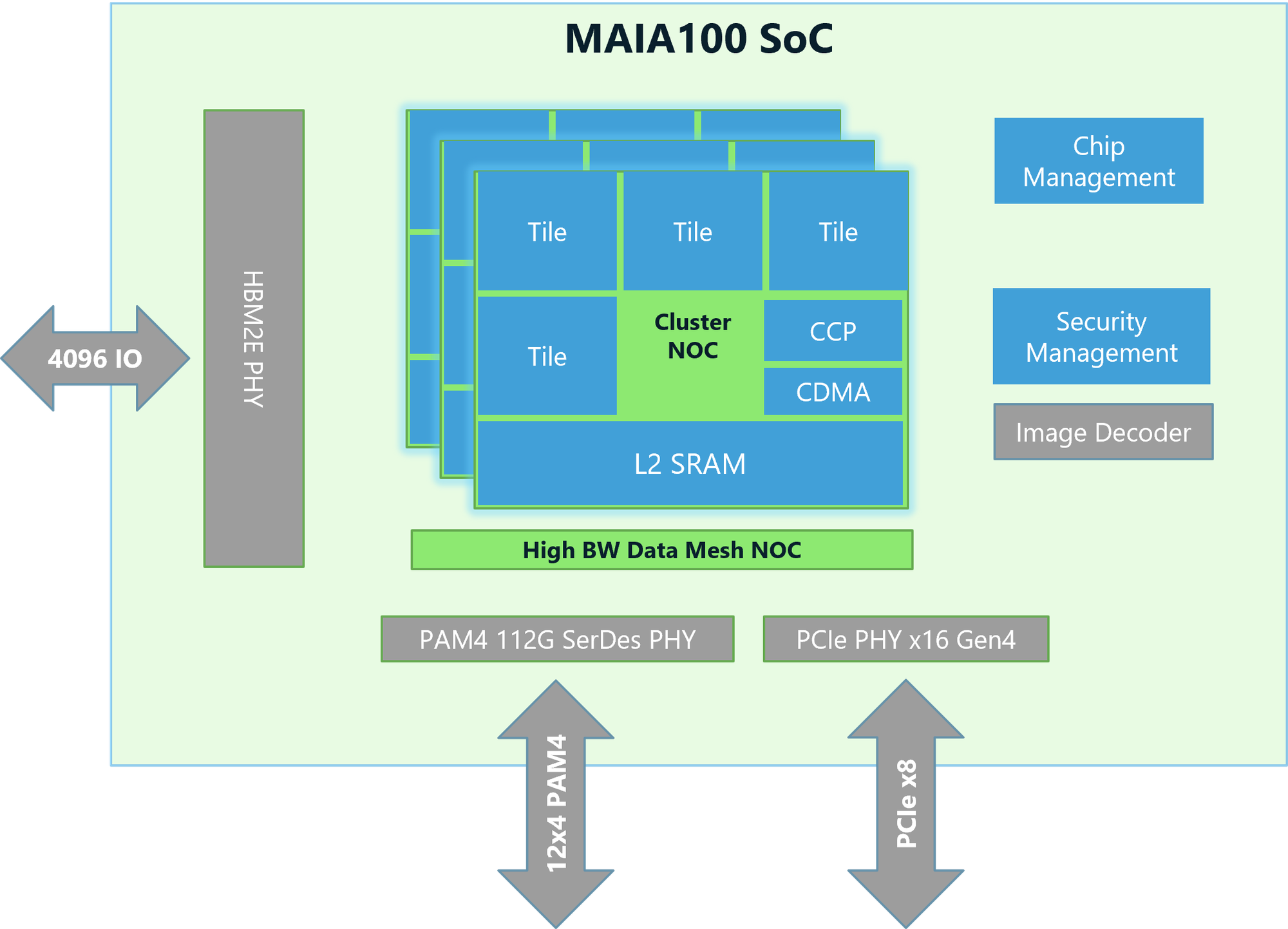

Microsoft introduced its first AI accelerator, Maia 100, at this year's Hot Chips conference. It features an architecture that uses custom server boards, racks, and software to provide cost-effective, enhanced solutions and performance for AI-based workloads. Redmond designed the custom accelerator to run OpenAI models on its own Azure data centers.

The chips are built on TSMC's 5nm process node and are provisioned as 500w parts but can support up to a 700w TDP.

Maia's design can deliver high levels of overall performance while efficiently managing its targeted workload's overall power draw. The accelerator also features 64GB of HBM2E, a step down from the Nvidia H100's 80GB and the B200's 192GB of HBM3E.

According to Microsoft, the Maia 100 SoC architecture features a high-speed tensor unit (16xRx16) offering rapid processing for training and inferencing while supporting a wide range of data types, including low precision types such as Microsoft's MX format.

It has a loosely coupled superscalar engine (vector processor) built with custom ISA to support data types, including FP32 and BF16, a Direct Memory Access engine supporting different tensor sharding schemes, and hardware semaphores that enable asynchronous programming.

The Maia 100 AI accelerator also provides developers with the Maia SDK. The kit includes tools enabling AI developers to quickly port models previously written in Pytorch and Triton.

The SDK includes framework integration, developer tools, two programming models, and compilers. It also has optimized compute and communication kernels, the Maia Host/Device Runtime, a hardware abstraction layer supporting memory allocation kernel launches, scheduling, and device management.

Microsoft has provided additional information on the SDK, Maia's backend network protocol, and optimization in its Inside Maia 100 blog post. It makes a good read for developers and AI enthusiasts.

/cdn.vox-cdn.com/uploads/chorus_asset/file/25691163/booxpalma3.jpg)

English (US) ·

English (US) ·