In today’s Linux patch series, AMD engineer Sameul Zhang highlighted an unusual issue where Linux servers are failing to hibernate due to excessive VRAM and a high number of AMD Instinct accelerators per system. For context, Instinct accelerators are powerful AMD GPUs designed specifically for data centers handling AI, high-performance computing, scientific workloads, and other demanding tasks.

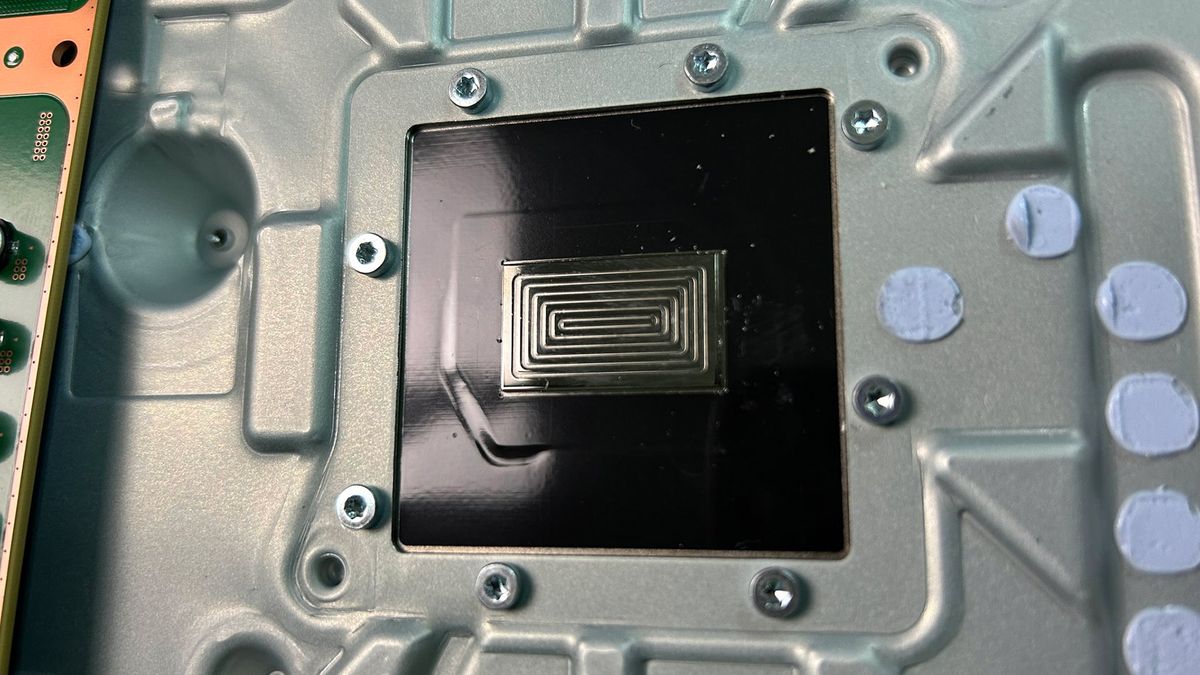

Part of what makes these GPUs so powerful is that they come with massive amounts of VRAM, like 192GB in some, which might sound huge to gamers but is fairly standard for modern data center chips. In fact, this AMD AI Linux-powered server is equipped with a total of eight Instinct cards that bring the total VRAM to around 1.5TB. However, while more VRAM is generally a good thing, in cases like this, it can lead to unexpected issues.

But while VRAM capacity does play a part, the root cause of the hibernation failure isn’t the number of Instinct cards, but rather how Linux handles GPU memory during the hibernation process. When the system initiates hibernation, all GPU memory is first offloaded to system RAM, typically through the Graphics Translation Table (GTT) or shared memory (shmem). From there, the kernel creates a hibernation image by copying all system memory content, which also includes the evicted VRAM, into a second memory region before writing it to disk.

Sounds confusing? Well, in simple terms, if your server has 1.5TB of total VRAM, this duplication can push the memory usage up to 3TB, which easily exceeds the capacity of servers equipped with only 2TB of system memory. The spill-out ultimately causes the hibernation process to fail.

Fortunately, Zhang has been working to address this hibernation issue and suggests two main changes. The first is aimed at reducing the amount of system memory needed during hibernation, which would allow the process to succeed. However, doing so introduces a new issue, as the "thawing" stage (when the system resumes from hibernation) could take nearly an hour due to the large amount of memory. To fix this, a third patch was added to skip restoring these buffer objects during the thaw stage, significantly reducing the resume time.

Now, most high-end AI servers run continuously, so it's fair to ask why anyone would hibernate them. One common reason is to reduce power consumption during downtimes and help stabilize the electrical grid. Since large-scale data centers consume massive amounts of power, this can help lower the risk of blackouts, like the one we recently saw in Spain.

5 months ago

55

5 months ago

55

English (US) ·

English (US) ·