The first GPU company to offer it was Nvidia in 2022, followed by AMD one year later, and now Intel has joined in the fun. I am, of course, talking about frame generation and while none of the systems are perfect, they all share the same issue: increased input latency. However, researchers at Intel have developed a frame generation algorithm that adds no lag whatsoever, because it's frame extrapolation.

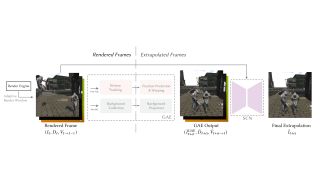

If you've a mind for highly technical documents, you can read the full details about how it all works at one of the researcher's GitHub. Just as with all rendering technologies, this one has a catchy name and suitable initialisation: G-buffer Free Frame Extrapolation (GFFE). To understand what it's doing differently to DLSS, FSR, and XeSS-FG, it helps to have a bit of an understanding of how the current frame generation systems work.

AMD, Intel, and Nvidia have different algorithms but they take the same fundamental approach: Render two frames in succession and store both of them in the graphics card's VRAM, rather than displaying them.

Then, in place of rendering another frame, the GPU either runs a bunch of compute shaders (as per AMD's FSR) or some AI neural networks (Nvidia's DLSS and Intel's XeSS) to analyse the two frames for changes and motion, and then create a frame based on that information. This generated frame is then sequenced between the two previously rendered frames, and then they're sent off to the monitor in that order for display.

While none of the three technologies produce absolutely perfect frames every time, more often than not, you don't really notice them because they only appear on screen for a fraction of a second, before a normally rendered frame takes its place. However, what one can easily notice, is the increased input lag.

Game engines poll for input changes at fixed time intervals and then apply any changes to the next frame to be rendered. Generated frames won't have such information applied to them and because two 'normal' frames have been held back to make the 'artificial' one, there's a degree of additional latency between you waving your mouse about and seeing the motion on the screen.

In theory, that means GFFE could be applied on a driver level, rather than requiring integration in the game's rendering pipeline. And best of all, because no frames are being held back, there's hardly any input lag.

This is where frame extrapolation comes into play. Rather than holding rendered frames back in a queue, the algorithm simply keeps a history of what frames have been rendered before and uses them to generate a new one. The system then just adds the extrapolated frame after a 'normal' one, giving the required performance boost.

Such systems aren't new and they've been in development for many years now, but nothing has appeared so far to match the likes of DLSS, in terms of real-time speed. What sets GFFE apart is that it's pretty fast (6.6 milliseconds to generate a 1080p frame) and it doesn't require access to a rendering engine's motion or vector buffers, just the complete frames.

There will always be some with frame generation, interpolated or extrapolated, because the AI-created frames will never have exact input changes, just estimated ones. So those frames will always feel a little bit 'wrong' but as mentioned before, they exist so fleetingly, that you're unlikely to really notice.

Frame extrapolation is the natural evolution for DLSS, FSR, and XeSS to take, and this work by Intel and the University of California shows that we're probably not far off seeing it in the wild. With all three GPU companies on the verge of releasing new chips (Intel has already announced Battlemage), I suspect they will be joined or rapidly followed by a DLSS and FSR that uses AI to extrapolate motion and new frames.

We all want next-gen GPUs to have more shaders, cache, and bandwidth for games, but we're probably nearing a bit of a plateau in that respect. Graphics cards of the near future will be leveraging neural networks ever more to upscale and generation, to improve performance. If you can't tell that they're being used, though, then I guess it doesn't matter how those pixels are being made.

1 week ago

4

1 week ago

4

English (US) ·

English (US) ·