I've shot thousands of hours of video over the years of my career and I can tell you that it takes lots of preparation, work, and energy. I can also tell you that if you use an AI avatar video generator like HeyGen, it takes almost none of the above, and that scares the heck out of me.

With the advent of high-quality generative video, these AI video avatars are popping up everywhere. I haven't paid much attention, mostly because I like being on camera and am happy to do it for TV and social video. Even so, I know not everyone loves the spotlight and would happily hand the duties over to an avatar, and when I got a glimpse of the apparent quality of HeyGen's avatars, I was intrigued enough to give it a try. Now I honestly wish I hadn't.

HeyGen, which you can use on mobile or desktop, is a simple and powerful platform for creating AI avatars that can, based on scripts you provide, speak to the camera for you. They're useful for video presentations, social media, interactive avatars, training videos, and essentially anything where an engaging human face might help sell the topic or information.

HeyGen lets you create digital twins that can appear in relatively static videos or ones in which the other you is on the move. For my experience, I chose the 'Still' option.

Setting up another me

There are some rules for creating your avatar and I think following them as I did may have resulted in the slightly off-putting quality of my digital twin.

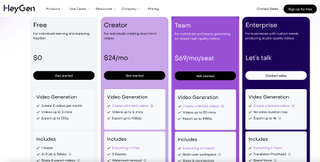

HeyGen recommends you start the process by shooting a video of yourself using either a professional camera or one of your best smartphones, but the video should be at least 1080p. If you use the free version as I did, you'll note that the final videos are only 720p. Upgrade later and you can start producing full HD video avatars (more on the pricing structure later).

There are other bits of guidance like using a "nice background," avoiding harsh shadows" and background noise, and a few that are key to selling the digital twin version of you. HeyGen asked that I look directly (but not creepily, I assume) at the camera, make normal (open to interpretation) gestures below chest level, and take pauses between sentences. The last bit is actually good advice for making real videos. I have a habit of speaking stream of consciousness and forget to pause and create obvious soundbites for editing.

Here, though, the pauses are not about what you're saying, at least for the training video. It seems to be about learning to manage your twin's face and mouth when you're talking and when you're not.

In any case, I could say anything I wanted to the camera as long as it was for at least 2 minutes. More video will help with the quality of new videos featuring your avatar.

Training to be me

I set up my iPhone 16 Pro Max and a couple of lights and filmed myself in my home office for 2 minutes speaking about nonsense, all the while making sure to take 1-second pauses and to keep my gestures from being too wild. After I Airdropped it to my MacBook Air, I uploaded the video. It was at this point it became clear that as a non-paying user, I was handing over virtually all video rights to HeyGen. Not optimal at all but I was not about to start paying $24 a month for the basic plan and to return control of my image.

The HeyGen system took considerable time to ingest the video and prepare my digital twin. Once it was ready, I was able to create my first 3-minute video. Paying customers can create 5-minute videos or longer, depending on which service tier they choose, Paying also grants access to faster video processing.

To create a video, I selected the video format: portrait or landscape. I shot my training video in portrait but that did not seem to matter. I also had to provide a script that I could type or paste into a field that accepts a maximum of 2000 characters.

For someone who writes for a living, I struggled with the script, finally settling a on brief soliloquy from Hamlet. After checking the script length, the system went to work and slowly generated my first HeyGen Digital Twin video. I must've accidentally kept some blank spaces at the end of my script because about half of it is the digital me silently vamping for the cameras. It's unsettling.

Nothing is real

@lanceulanoff ♬ original sound - LanceUlanoffI followed this up with a tight TikTok video where I revealed that the video they were watching was not really me. My third video and the last of my free monthly allotment, was of me telling a joke: "Have you ever played quiet tennis? It's the same as regular tennis but without the racket. Ha ha ha ha ha ha ha ha!" As you might've guessed, the punchline doesn't really land and because my digital twin never smiles and delivers the "laughter" in a completely humorless way, none of it is even remotely funny.

In all of these videos, I was struck by the audio quality. It's the essence of my voice but also not my voice. It's too robotic and lacking in emotion. At least it's properly synced with the mouth. The visuals, on the other hand, are almost perfect. My digital twin looks just like me or, at least a very emotionless version of me who is into Tim Cook keynote-style hand gestures. To be fair, I didn't know what to do with my hands when I originally recorded my training video, worrying that if I didn't control my often wild hand gestures they would look bizarre with my digital twin. I was wrong. This overly-controlled twin is the bizarre one.

Just nope

Can an AI version of me tell a joke? Sort of. #heygen @HeyGen_Official pic.twitter.com/ODke9z67VHOctober 9, 2024

On TikTok, someone wrote, "Nobody likes this. Nobody wants this." When I posted the video on Threads, the reactions ranged from shock to dismay. People noticed my "distracting" hand gestures, called it "creepy", and worried that such videos represented the "death of truth."

But here's the thing. While the AI-generated video is concerning, it did not say anything I did not write or copy and paste. Yes, my digital twin is well past uncanny and deep into unnervingly accurate but at least it's doing my bidding. The concern is if you have a good 2-minute video of someone else speaking, could you upload that and then make it say whatever you want? Possibly.

HeyGen gets credit for effectively creating a no-fuss digital twin video generator. It's far from perfect and could be vastly improved if they also had users train it on emotions (the right looks for 'funny', 'sad', 'mad', you get it) and a wider variety of facial expressions (a smile or two would be nice). Until then these digital twins will be our emotionless doubles, waiting to do our video bidding.

:quality(85):upscale()/2024/10/29/957/n/1922441/c62aba6367215ab0493352.74567072_.jpg)

:quality(85):upscale()/2024/10/29/987/n/49351082/3e0e51c1672164bfe300c1.01385001_.jpg)

:quality(85):upscale()/2024/10/30/711/n/1922441/c62313206722590ade53c4.47456265_.jpg)

English (US) ·

English (US) ·