Look at the numbers involved in AI cloud investment and data center buildout, and the stats are astonishing. The Magnificent 7 tech companies – the biggest tech giants in the world – have collectively invested more than $100 billion in data centers and other infrastructure in the last three months alone. The majority of that comes from four of the seven: Microsoft, Meta, Amazon, and Alphabet.

That spending is having an outsized effect on the economy. Jens Nordvig, the founder of Exante Data, believes that total spending on AI could account for 2% of U.S. GDP this year, based on projections and planned projects.

Good for investors, but is it good for capex?

The kinds of eye-watering sums involved are good news for tech investors, shareholders in those Magnificent 7 firms, and plenty of others. The people leading those companies are making it clear they think it’s necessary. “It’s essential infrastructure,” said Jensen Huang, in Nvidia’s Q1 earnings call in May. “We’re clearly in the beginning of the buildout of this infrastructure.” But the massive interest in data centers is having other knock-on effects beyond making big tech companies even bigger. It’s reshaping how we think about the sectors and components that make those data centers work.

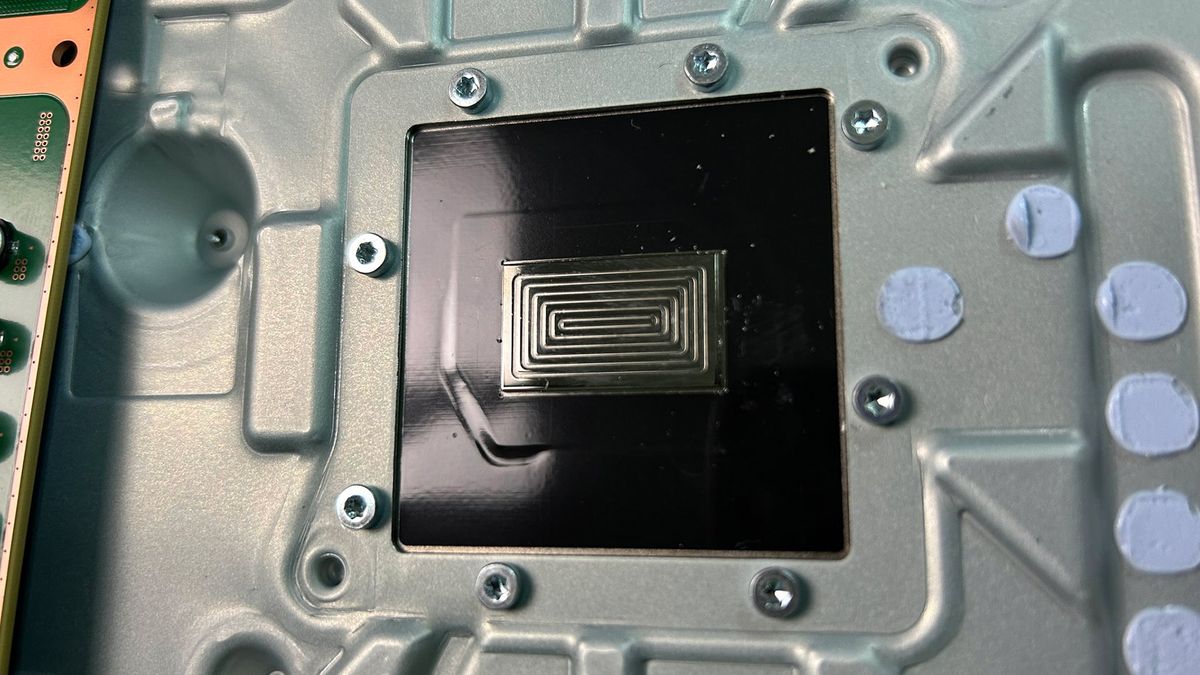

“The central problem today in AI is compute power, and the energy required is getting out of hand,” says Subramanian Iyer, distinguished professor at the Henry Samueli School of Engineering at UCLA, in an interview with Tom's Hardware Premium. Lots has been written about the energy impact of these large data centers, with some companies even starting to consider small modular reactor technology that would power them using nuclear. “That tells you how serious the power problem is,” Iyer says.

Google, for example, raised its 2025 capital expenditure budget to $85 billion from $75 billion because of investments in servers and data center construction, with further acceleration expected in 2026. Google’s monthly token processing also doubled from 480 trillion in May to over 980 trillion. (A little over a year earlier, the number of tokens Google processed was just 1% of that.) All of those tokens need processing. And that processing happens on hardware. Jefferies estimates that Google’s 980 trillion token compute is close to 200 million H100s operating 24 hours a day, seven days a week.

It all adds up to significant expenditure. Moore’s law isn’t completely dead, argues Iyer. But it’s changing. “Transistors are still scaling, but they're no longer getting cheaper,” he says. “In fact, they're getting more expensive.”

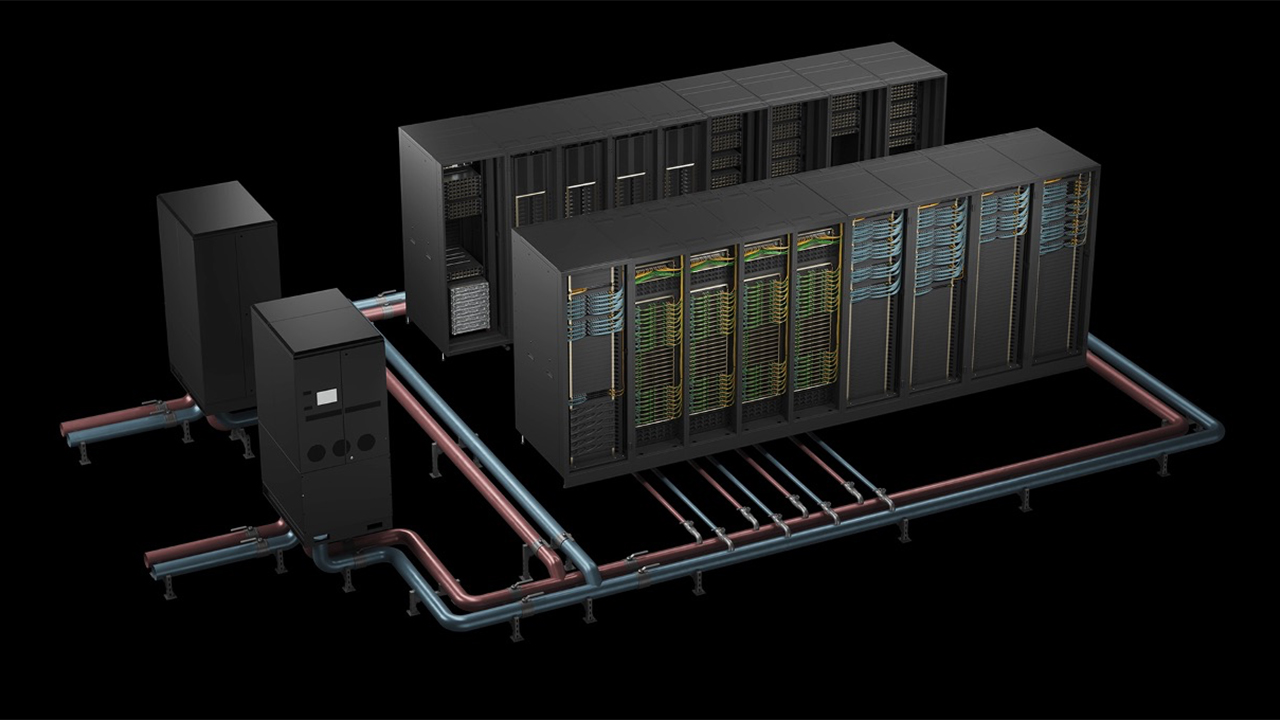

Data centers are changing

What data centers are used for is changing. Unlike their traditional predecessors, they now rely heavily on advanced GPUs, specialized networking, and high-powered cooling, meaning their bill of materials (BOM) has bloated. Estimates put the cost of a fully-equipped AI data center at around $10 million, with power and cooling systems and servers and IT equipment accounting for roughly a third each, with other key categories including network (15%) and storage (10%).

All of those are being squeezed by inflation and surging hardware requirements. But that’s only for smaller enterprise-focused setups: the hyperscale facilities of the type that Donald Trump and other countries around the world are looking at run into the billions of dollars per campus.

The underlying cost of components is also steadily rising. Average material costs increased by 3% and labor by 4% for key data center hardware over the past year, with concrete and copper cable among the biggest risers, according to Turner & Townsend. The smaller but still essential elements like power delivery, printed circuit boards, and advanced packaging are also rising in price thanks to chronic bottlenecks, especially for the high-end AI chips that require stacking and new thermal approaches.

Semiconductors used to drop reliably with each new process node, but that’s no longer the case as manufacturing them becomes more complex, and increased demand globally squeezes supply. TSMC is likely to raise the price of advanced nodes by over 15% in 2025, according to reports, passing on costs to buyers. It all means that every new data center costs more money than it used to.

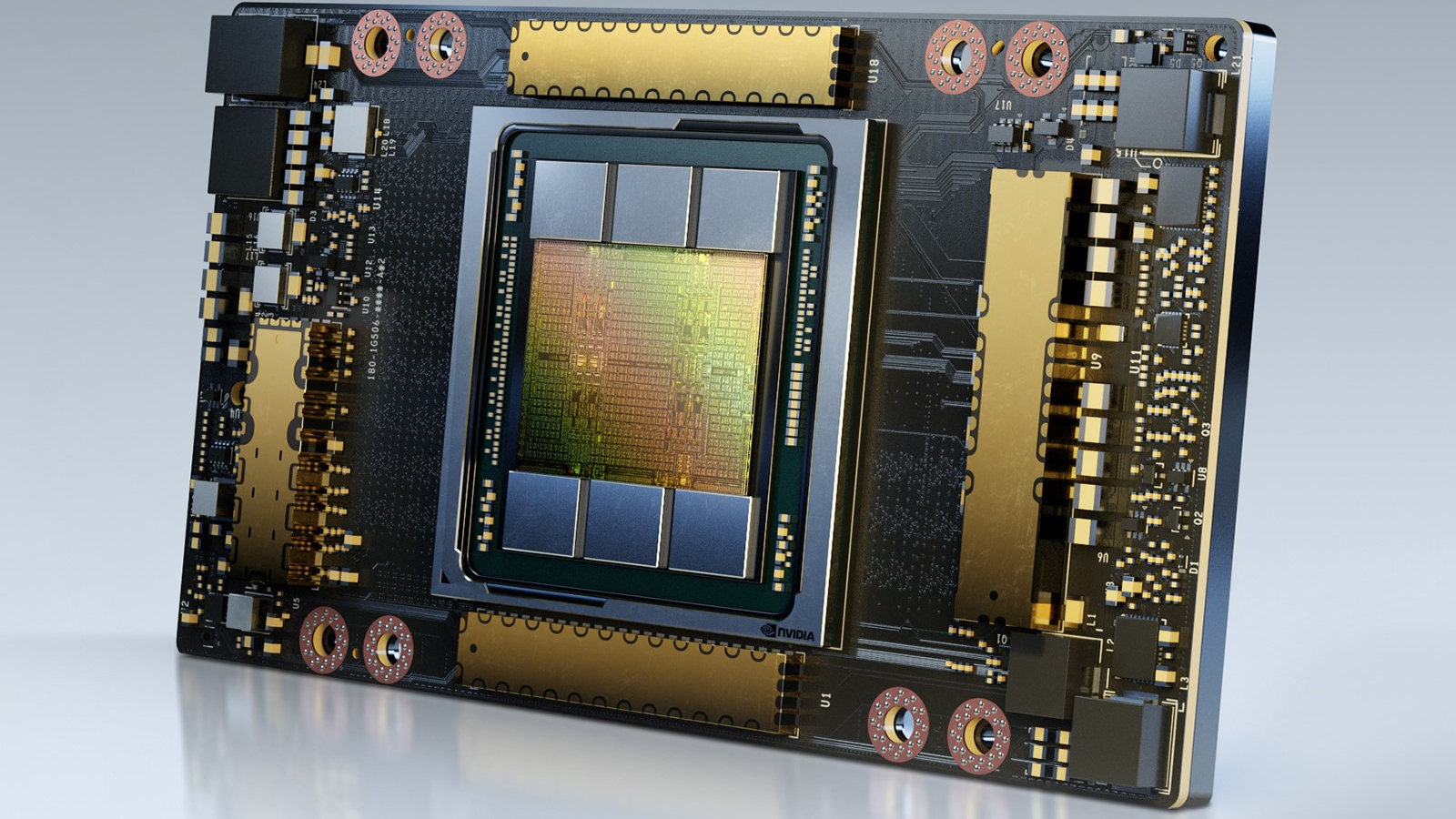

When they were launched in 2020, Nvidia's then-top-tier DGX A100 servers cost $199,000. Prior reporting from Tom’s Hardware suggests analysts believe the GB200 server racks will cost $3 million. There's an argument that the price hike is down to rising manufacturing costs, with those fabs turning into gigaprojects, like TSMC's $65 billion Arizona complex. In part, the cost of these large-scale efforts is so great because the hardware behind them can be comparatively wasteful. “If you spend a megawatt of power for a data center,” says Iyer, “the actual work you’re getting is only about a third of that. The rest of it is pretty much all overhead.”

Those giant fab complexes cost as much money to equip as they do to build. Buyers are absorbing the cost of EUV machines to make the 2nm and 3nm chips populating data centers, which might have dozens of them – and that’s before considering the less advanced, but not significantly less expensive, tools for wafer etching, deposition, and inspection. A single high-end lithography EUV tool from ASML can reportedly cost $400 million alone.

Big tech’s intense AI buildout has forced even the world’s leading chip manufacturers, like TSMC, to invest at an unprecedented scale. Their Arizona cluster, which encompasses three advanced fabs, shows at what scale companies are operating. Elsewhere, Nvidia expects that up to $1 trillion will be spent globally upgrading data centers for AI workloads by 2028, further underlining the scale of the transformation.

Bigger tasks, bigger bills

One reason for the bigger bill is that the purpose – and the amount of work those data centers are being asked to do – has changed and increased. But the cost is also because the hardware requirements for those cloud servers and data centers have altered. Big tech capex keeps climbing because AI workloads now demand the bleeding-edge node – a shift in recent years that has been enacted by the rise of generative AI.

Silicon destined for servers once was able to lag the chips put into smartphones by a process generation or two, but is now “is par à pursue [on par with] with the bleeding edge,” said CJ Muse, an analyst specializing in semiconductors for Cantor Fitzgerland in an interview with Tom's Hardware Premium. That forces data center operators onto the most expensive wafers to cram in as many transistors, and as much compute per watt, as possible. All that comes with a hefty price tag. “A bleeding-edge 2nm fab at TSMC, for every 1,000 wafer starts at about $425 million, and so that adds up pretty quickly,” says Muse.

The race to be at the bleeding edge creates a domino effect. State-of-the-art processors are pointless if starved of data, making high-bandwidth memory (HBM) vital. But now memory is facing its own pressures on supply and cost. “From now on, the HBM segment should face a test of how HBM suppliers can manage supply and protect prices as their technology gap narrows and real competition begins,” said Jongwook Lee, a team leader at Samsung Securities, in a research report.

Lee and his colleagues foresee a future where the HBM market could split into ‘new’ product segments like HBM4, the higher-bandwidth, more luxe standard of memory, which would continue to enjoy a premium, and ‘old’ product segments, which would require discounts to remain competitive.

HBM, DRAM, and other factors further push prices

HBM manufacturing is vastly more complicated and supply-constrained than standard DRAM. With only Samsung, SK Hynix, and Micron as the three major suppliers, HBM can be especially vulnerable to supply disruptions or geopolitical shocks. Demand regularly exceeds supply, and lead times for HBM often top half a year, especially with advanced packaging capacity being booked years in advance for longstanding customers like Nvidia and AMD. It all means intense technical and economic headwinds in HBM, and the advanced packaging ecosystems they depend on, weigh heavily on the speed, cost, and security of the world’s AI data center buildout.

Even global competition for wafer fabrication equipment (WFE) is heating up. Chinese imports grew 14% year-over-year in June 2025, according to Jefferies, breaking a previous downward trend. June was the first month of positive growth in 2025, led by a surge in demand for specific machinery, including etching and deposition tools, which saw growth of 65% and 28% respectively.

Analysts at Jefferies believe that the unexpected growth was from China’s DRAM sector, and particularly CXMT, a major producer that has, to date, dodged being on the US sanctions list of entities not allowed to import chip tech into China. The US-China tech rivalry has led to stringent export controls and sanction lists that continue to constrain Chinese chipmakers from accessing critical semiconductor manufacturing technology to alleviate some of the supply pressures. That’s unlikely to change as Donald Trump continues to pursue an America first strategy for this – but could backfire if Trump pushes his hand too far. China dominates the processing of rare earth elements like neodymium, critical for high-performance components used in data center hardware. Sourcing rare earths, essential for AI chips and data center hardware, could become trickier if any one party chooses to weaponize access to them as part of trade negotiations. The political and regulatory headwinds are increasing cost pressures and investment risks, shaping the competitive landscape in unpredictable ways.

Nvidia's stranglehold, and how companies are fighting back

The problem every tech company faces is that they’re overly reliant on Nvidia at present. As a result, big cloud providers are weighing up whether to develop their own custom ASICs. Broadcom alone expects AI-specific custom silicon and networking sales to reach 42% of its revenue by 2026, according to Muse.

Major hyperscalers like Google, Amazon, and Meta are all actively rolling out custom ASIC chips, creating substantial opportunities for both established vendors and new entrants. Broadcom is booming: analysts say the firm’s custom ASIC and networking revenue for AI is expected to be around $18 billion by 2026, much of it driven by custom chips for hyperscale inference and high-bandwidth AI networking. The demand isn’t just coming from chips for inference. Networking ASICs, interconnect switches, and edge/IoT devices are all seeing surging demand.

Yet Muse points out that building successful custom chips is hard. “Google had three different teams building the TPU, and one was successful, the other two were not,” he says. The answer to that is for companies to try and develop their own ASIC strategy while also recognizing they need to go into the market and buy more GPUs.

That in turn is pushing up prices, in large part because companies that once kept themselves to themselves are not competing with one another. “I think the interesting change statement is that Meta, Amazon, Google and Microsoft all had fairly defined swimming lanes,” says Muse.

“Obviously there’s competition in offering cloud services, but their business models didn’t really overlap, and they all were all doing extraordinarily well,” he explains. That’s since changed. “Now they’re all competing head-to-head, and so there are going to be clear winners and losers.” That head-to-head competition is driving what Muse calls “this mad race and massive investments”.

The outcome will not only determine the next leader in tech, but could also redraw the global map of technological power for a generation.

3 months ago

71

3 months ago

71

English (US) ·

English (US) ·