Noisy, but not that noisy

Benchmark may help us understand how quantum computers can operate with low error.

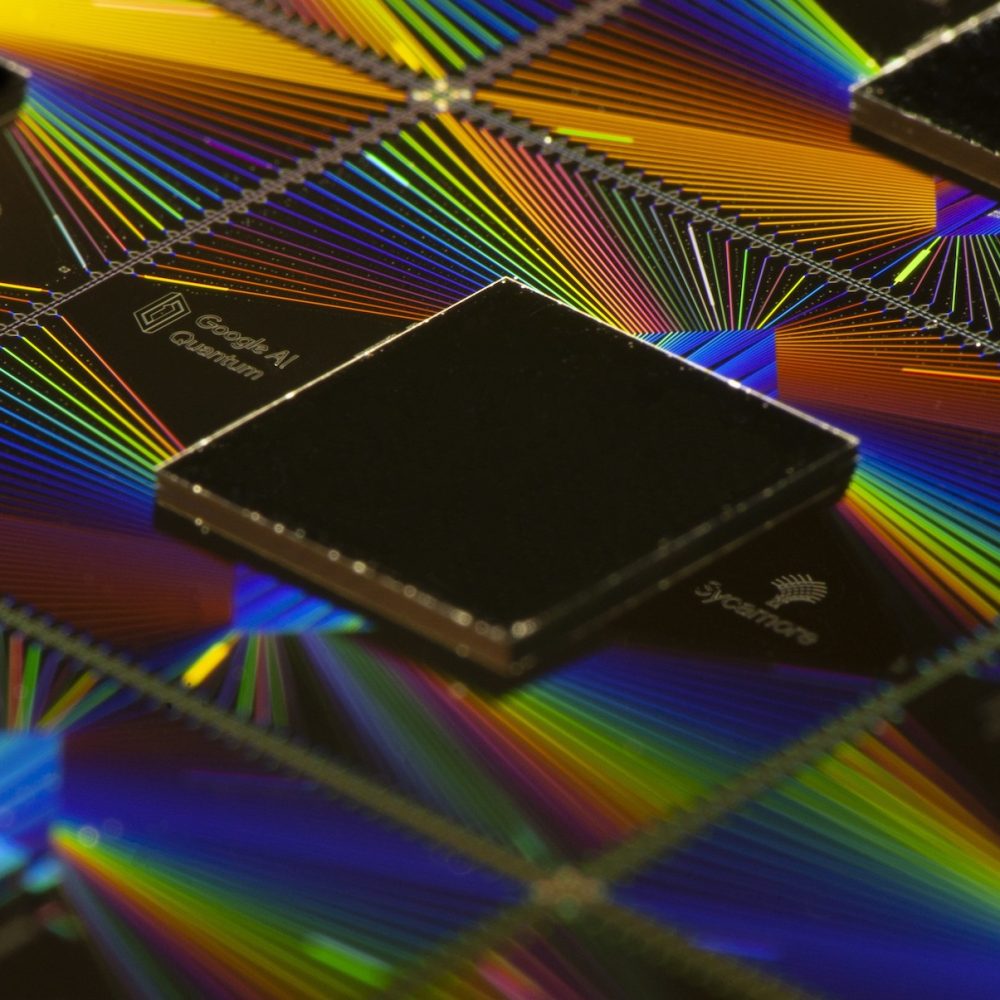

Google's Sycamore processor. Credit: Google

Back in 2019, Google made waves by claiming it had achieved what has been called "quantum supremacy"—the ability of a quantum computer to perform operations that would take a wildly impractical amount of time to simulate on standard computing hardware. That claim proved to be controversial, in that the operations were little more than a benchmark that involved getting the quantum computer to behave like a quantum computer; separately, improved ideas about how to perform the simulation on a supercomputer cut the time required down significantly.

But Google is back with a new exploration of the benchmark, described in a paper published in Nature on Wednesday. It uses the benchmark to identify what it calls a phase transition in the performance of its quantum processor and uses it to identify conditions where the processor can operate with low noise. Taking advantage of that, they again show that, even giving classical hardware every potential advantage, it would take a supercomputer a dozen years to simulate things.

Cross entropy benchmarking

The benchmark in question involves the performance of what are called quantum random circuits, which involves performing a set of operations on qubits and letting the state of the system evolve over time, so that the output depends heavily on the stochastic nature of measurement outcomes in quantum mechanics. Each qubit will have a probability of producing one of two results, but unless that probability is one, there's no way of knowing which of the results you'll actually get. As a result, the output of the operations will be a string of truly random bits.

If enough qubits are involved in the operations, then it becomes increasingly difficult to simulate the performance of a quantum random circuit on classical hardware. That difficulty is what Google originally used to claim quantum supremacy.

The big challenge with running quantum random circuits on today's hardware is the inevitability of errors. And there's a specific approach, called cross-entropy benchmarking, that relates the performance of quantum random circuits to the overall fidelity of the hardware (meaning its ability to perform error-free operations).

Google Principal Scientist Sergio Boixo likened performing quantum random circuits to a race between trying to build the circuit and errors that would destroy it. "In essence, this is a competition between quantum correlations spreading because you're entangling, and random circuits entangle as fast as possible," he told Ars. "We use two qubit gates that entangle as fast as possible. So it's a competition between correlations or entanglement growing as fast as you want. On the other hand, noise is doing the opposite. Noise is killing correlations, it's killing the growth of correlations. So these are the two tendencies."

The focus of the paper is using the cross-entropy benchmark to explore the errors that occur on the company's latest generation of Sycamore chip and use that to identify the transition point between situations where errors dominate, and what the paper terms a "low noise regime," where the probability of errors are minimized—where entanglement wins the race. The researchers likened this to a phase transition between two states.

Low noise performance

The researchers used a number of methods to identify the location of this phase transition, including numerical estimates of the system's behavior and experiments using the Sycamore processor. Boixo explained that the transition point is related to the errors per cycle, with each cycle involving performing an operation on all of the qubits involved. So, the total number of qubits being used influences the location of the transition, since more qubits means more operations to perform. But so does the overall error rate on the processor.

If you want to operate in the low noise regime, then you have to limit the number of qubits involved (which has the side effect of making things easier to simulate on classical hardware). The only way to add more qubits is to lower the error rate. While the Sycamore processor itself had a well-understood minimal error rate, Google could artificially increase that error rate and then gradually lower it to explore Sycamore's behavior at the transition point.

The low noise regime wasn't error free; each operation still has the potential for error, and qubits will sometimes lose their state even when sitting around doing nothing. But this error rate could be estimated using the cross-entropy benchmark to explore the system's overall fidelity. That wasn't the case beyond the transition point, where errors occurred quickly enough that they would interrupt the entanglement process.

When this occurs, the result is often two separate, smaller entangled systems, each of which were subject to the Sycamore chip's base error rates. The researchers simulated this by creating two distinct clusters of entangled qubits that could be entangled with each other by a single operation, allowing them to turn entanglement on and off at will. They showed that this behavior allowed a classical computer to spoof the overall behavior by breaking the computation up into two manageable chunks.

Ultimately, they used their characterization of the phase transition to identify the maximum number of qubits they could keep in the low noise regime given the Sycamore processor's base error rate and then performed a million random circuits on them. While this is relatively easy to do on quantum hardware, even assuming that we could build a supercomputer without bandwidth constraints, simulating it would take roughly 10,000 years on an existing supercomputer (the Frontier system). Allowing all of the system's storage to operate as secondary memory cut the estimate down to 12 years.

What does this tell us?

Boixo emphasized that the value of the work isn't really based on the value of performing random quantum circuits. Truly random bit strings might be useful in some contexts, but he emphasized that the real benefit here is a better understanding of the noise level that can be tolerated in quantum algorithms more generally. Since this benchmark is designed to make it as easy as possible to outperform classical computations, you would need the best standard computers here to have any hope of beating them to the answer for more complicated problems.

"Before you can do any other application, you need to win on this benchmark," Boixo said. "If you are not winning on this benchmark, then you're not winning on any other benchmark. This is the easiest thing for a noisy quantum computer compared to a supercomputer."

Knowing how to identify this phase transition, he suggested, will also be helpful for anyone trying to run useful computations on today's processors. "As we define the phase, it opens the possibility for finding applications in that phase on noisy quantum computers, where they will outperform classical computers," Boixo said.

Implicit in this argument is an indication of why Google has focused on iterating on a single processor design even as many of its competitors have been pushing to increase qubit counts rapidly. If this benchmark indicates that you can't get all of Sycamore's qubits involved in the simplest low-noise regime calculation, then it's not clear whether there's a lot of value in increasing the qubit count. And the only way to change that is to lower the base error rate of the processor, so that's where the company's focus has been.

All of that, however, assumes that you hope to run useful calculations on today's noisy hardware qubits. The alternative is to use error-corrected logical qubits, which will require major increases in qubit count. But Google has been seeing similar limitations due to Sycamore's base error rate in tests that used it to host an error-corrected logical qubit, something we hope to return to in future coverage.

Nature, 2024. DOI: 10.1038/s41586-024-07998-6 (About DOIs).

John is Ars Technica's science editor. He has a Bachelor of Arts in Biochemistry from Columbia University, and a Ph.D. in Molecular and Cell Biology from the University of California, Berkeley. When physically separated from his keyboard, he tends to seek out a bicycle, or a scenic location for communing with his hiking boots.

:quality(85):upscale()/2024/10/29/957/n/1922441/c62aba6367215ab0493352.74567072_.jpg)

:quality(85):upscale()/2024/10/29/987/n/49351082/3e0e51c1672164bfe300c1.01385001_.jpg)

:quality(85):upscale()/2024/10/30/711/n/1922441/c62313206722590ade53c4.47456265_.jpg)

English (US) ·

English (US) ·