Serving tech enthusiasts for over 25 years.

TechSpot means tech analysis and advice you can trust.

While most people think of Cisco as a company that links infrastructure elements in data centers and the cloud, it is not the first company that comes to mind when discussing GenAI. However, at its recent Partner Summit event, the company made several announcements aimed at changing that perception.

Specifically, Cisco debuted several new servers equipped with Nvidia GPUs and AMD CPUs, targeted for AI workloads, a new high-speed network switch optimized for interconnecting multiple AI-focused servers, and several preconfigured PODs of compute and network infrastructure designed for specific applications.

On the server side, Cisco's new UCS C885A M8 Server packages up to eight Nvidia H100 or H200 GPUs and AMD Epyc CPUs into a compact rack server capable of everything from model training to fine-tuning. Configured with both Nvidia Ethernet cards and DPUs, the system can function independently or be networked with other servers into a more powerful system.

The new Nexus 9364E-SG2 switch, based on Cisco's latest G200 custom silicon, offers 800G speeds and large memory buffers to enable high-speed, low-latency connections across multiple servers.

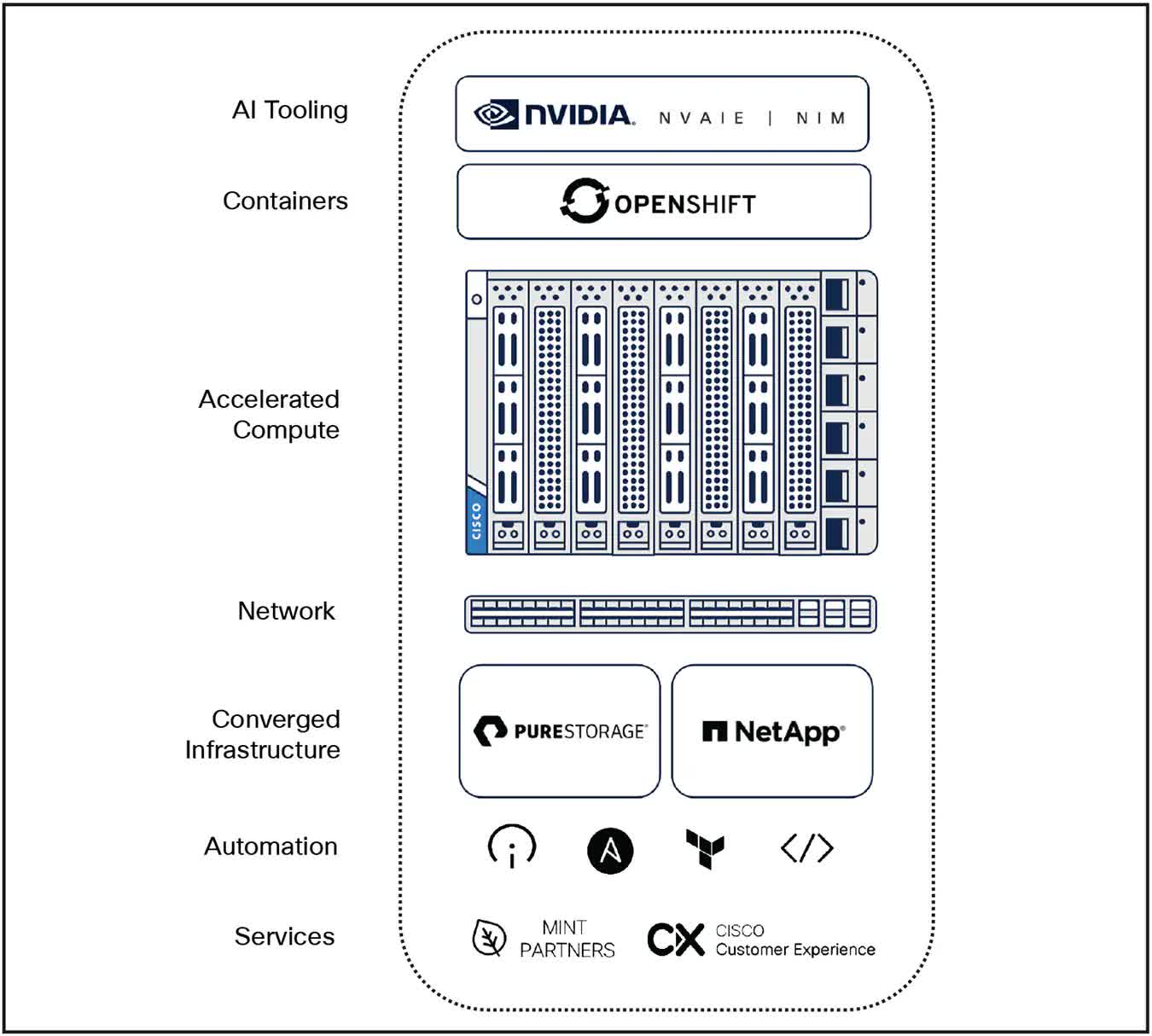

The most interesting new additions are in the form of AI PODs, which are Cisco Validated Designs (CVDs) that combine CPU and GPU compute, storage, and networking along with Nvidia's AI Enterprise platform software. Essentially, they are completely preconfigured infrastructure systems that provide an easier, plug-and-play solution for organizations to launch their AI deployments – something many companies beginning their GenAI efforts need.

Cisco is offering a range of different AI PODs tailored for various industries and applications, helping organizations eliminate some of the guesswork in selecting the infrastructure they need for their specific requirements. Additionally, because they come with Nvidia's software stack, there are several industry-specific applications and software building blocks (e.g., NIMs) that organizations can use to build from. Initially, the PODs are geared more towards AI inferencing than training, but Cisco plans to offer more powerful PODs capable of AI model training over time.

Another key aspect of the new Cisco offerings is a link to its Intersight management and automation platform, providing companies with better device management capabilities and easier integration into their existing infrastructure environments.

The net result is a new set of tools for Cisco and its sales partners to offer to their long-established enterprise customer base.

Realistically, Cisco's new server and compute offerings are unlikely to appeal to big cloud customers who were early purchasers of this type of infrastructure. (Cisco's switches and routers, on the other hand, are key components for hyperscalers.) However, it's becoming increasingly clear that enterprises are interested in building their own AI-capable infrastructure as their GenAI journeys progress. While many AI application workloads will likely continue to exist in the cloud, companies are realizing the need to perform some of this work on-premises.

In particular, because effective AI applications need to be trained or fine-tuned on a company's most valuable (and likely most sensitive) data, many organizations are hesitant to have that data and models based on it in the cloud.

In that regard, even though Cisco is a bit late in bringing certain elements of its AI-focused infrastructure to market, the timing for its most likely audience could be just right. As Cisco's Jeetu Patel commented during the Day 2 keynote, "Data centers are cool again." This point was further reinforced by the recent TECHnalysis Research survey report, The Intelligent Path Forward: GenAI in the Enterprise, which found that 80% of companies engaged in GenAI work were interested in running some of those applications on-premises.

Ultimately, the projected market growth for on-site data centers presents intriguing new possibilities for Cisco and other traditional enterprise hardware suppliers.

Whether due to data gravity, privacy, governance, or other issues, it now seems clear that while the move to hybrid cloud took nearly a decade, the transition to hybrid AI models that leverage cloud and on-premises resources (not to mention on-device AI applications for PCs and smartphones) will be significantly faster. How the market responds to that rapid evolution will be very interesting to observe.

Bob O'Donnell is the president and chief analyst of TECHnalysis Research, LLC, a market research firm that provides strategic consulting and market research services to the technology industry and professional financial community. You can follow Bob on Twitter @bobodtech

:quality(85):upscale()/2024/11/04/810/n/1922564/da875c20672911f51e8a59.58915976_.jpg)

English (US) ·

English (US) ·