China’s AI industry is quietly turning to refurbished and second-hand Nvidia GPUs after fresh curbs on the company’s H20 accelerator left customers scrambling for alternatives. The H20, a cut-down Hopper-based GPU designed specifically to comply with U.S. export restrictions, was meant to keep Nvidia in the Chinese market. But the chip has been effectively sidelined following the resumption of H20 exports in July, after Chinese regulators raised data security concerns and effectively banned purchases of the chip.

According to a recent report by Digitimes, this has led to surging demand for older A100 and H100 cards as firms strip down and reconfigure them into “low-cost, high-performance” custom inference systems.

Why second-hand silicon works

Inference is less compute-intensive than training. Models don’t require full floating-point precision, and workloads can run efficiently on hardware that’s been trimmed or reconfigured. That’s why even an A100, launched back in 2020, remains valuable in some use cases.

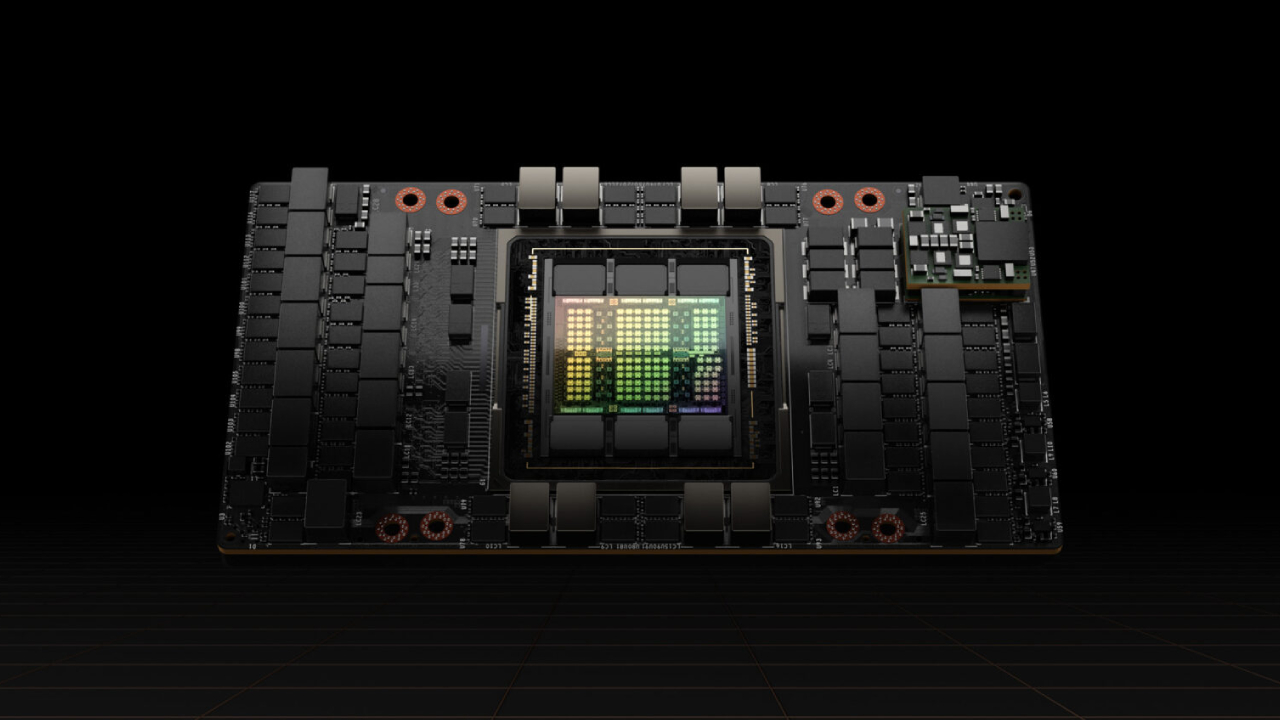

The A100 was built on Nvidia’s Ampere architecture, with up to 80GB of HBM2e memory and 2 TBps bandwidth. While it lacks Hopper’s peak throughput, it remains highly effective for inference tasks thanks to its large memory pool and mature CUDA software ecosystem. For workloads like chatbots and recommendation engines, developers can still achieve cost-efficient results without cutting-edge silicon.

The H100, launched in 2022, scales performance significantly higher, with HBM3 memory and up to six times the AI training throughput of an A100. By contrast, the H20 was tuned for inference but cut back so aggressively that it delivers between three and nearly seven times less AI performance than a full H100, and more than thirty times slower in FP64 supercomputing tasks. In practice, that makes even repurposed A100s a more attractive option for Chinese buyers than a new H20.

With Nvidia’s CUDA ecosystem still unrivaled, older cards remain plug-and-play for developers. And because inference hardware can run around the clock with lower risk of accuracy loss, China’s data centers are evidently happy to pay for refurbished boards even as reliability declines.

Market squeeze for Nvidia

This situation places Nvidia in a strange bind. The company took a $5.5 billion write-down on unsold H20 inventory when Washington introduced licensing requirements for its export to China. Yet, paradoxically, its GPUs remain the catalyst of China’s ongoing AI boom. This is a double-edged sword for Nvidia: Its chips still dominate, but gray market channels risk eroding margins and slowing the adoption of newer architectures.

The gray market is also hugely problematic for Beijing, which is eager for its own competing firms like Huawei and Biren to scale. Every second-hand, stripped-down H100 racked in a Chinese data center is another system not running on Ascend accelerators, which could potentially slow domestic investments.

Ultimately, the flood of recycled GPUs illustrates the unintended consequences of tightening export controls. U.S. policymakers hoped to throttle China’s access to cutting-edge compute, while Beijing has sought to accelerate homegrown silicon adoption. Instead, the result is a recycling economy where yesterday’s A100s and H100s continue to power tomorrow’s AI deployments.

For now, China’s AI industry is making do with pragmatism, stretching the useful life of older Nvidia hardware while waiting for both political and technological roadblocks to clear.

Follow Tom's Hardware on Google News to get our up-to-date news, analysis, and reviews in your feeds. Make sure to click the Follow button.

3 months ago

49

3 months ago

49

English (US) ·

English (US) ·