AMD this week introduced the 7th version of its ROCm (Radeon open compute) open-source software stack for accelerated computing that substantially improves the performance of AI inference on existing hardware compared to ROCm 6, as well as adds support for distributed workloads, and expands to Windows and Radeon GPUs. In addition, ROCm 7 adds support for FP4 and FP6 low-precision formats for the latest Instinct MI350X/MI355X processors.

The biggest change brought by ROCm 7 for client PCs is the extension of ROCm to Windows and Radeon GPUs, which allows the use of discrete and integrated GPUs for AI workloads, but only on Ryzen-based PCs. Starting in the second half of 2025, developers will be able to build and run AI programs on Ryzen desktops and laptops with Radeon GPUs, which could be a big deal for those who want to run higher-end AI LLMs locally.

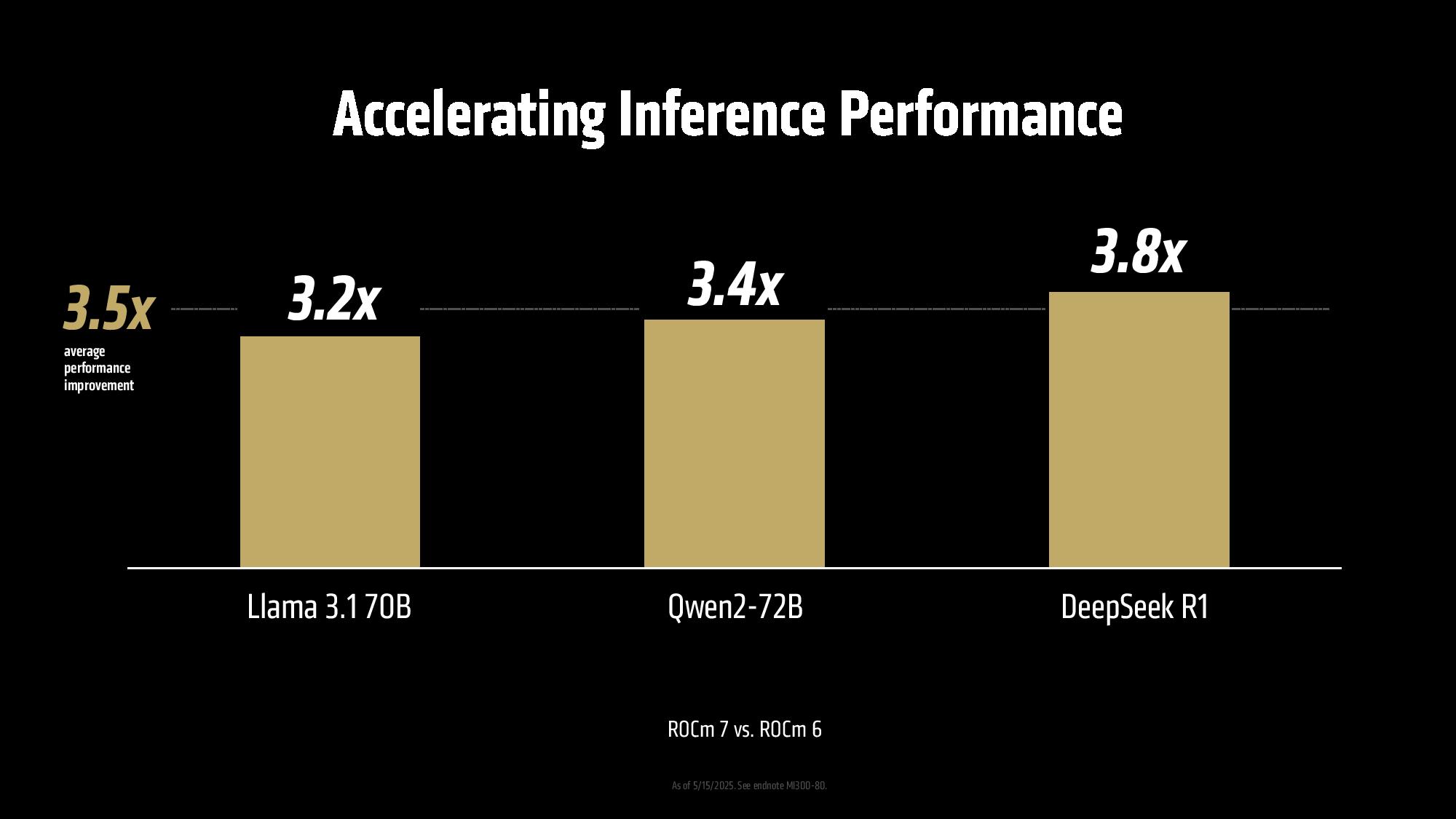

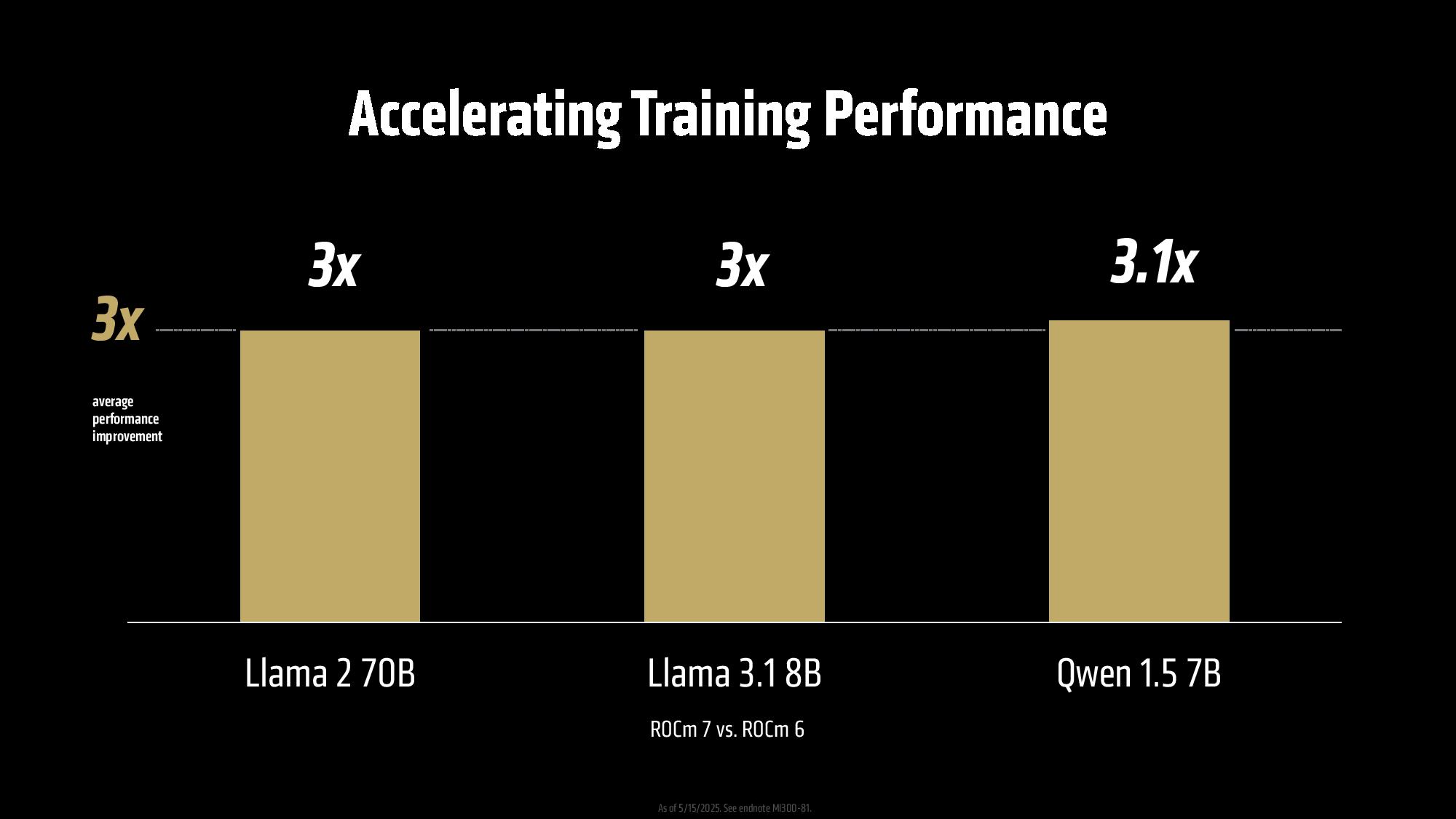

One of the reasons for AMD's weak position in the AI hardware market is imperfect software. But it looks like the situation is improving as AMD's Instinct MI300X with ROCm 7 delivers over 3.5 times the inference performance and 3 times the training throughput compared to ROCm 6, according to AMD. The company conducted tests using an 8-way Instinct MI300X machine running Llama 3.1-70B, Qwen 72B, and Deepseek-R1 models with batch sizes ranging from 1 to 256, and the only difference was the usage of ROCm 7 over ROCm 6. AMD says that such improvements are enabled by enhancements in GPU utilization as well as data movement, though it does not provide any more details.

The new release also introduces support for distributed inference through integration with open frameworks such as vLLM, SGLang, and llm-d. AMD worked with these partners to build shared components and primitives, allowing the software to scale efficiently across multiple GPUs.

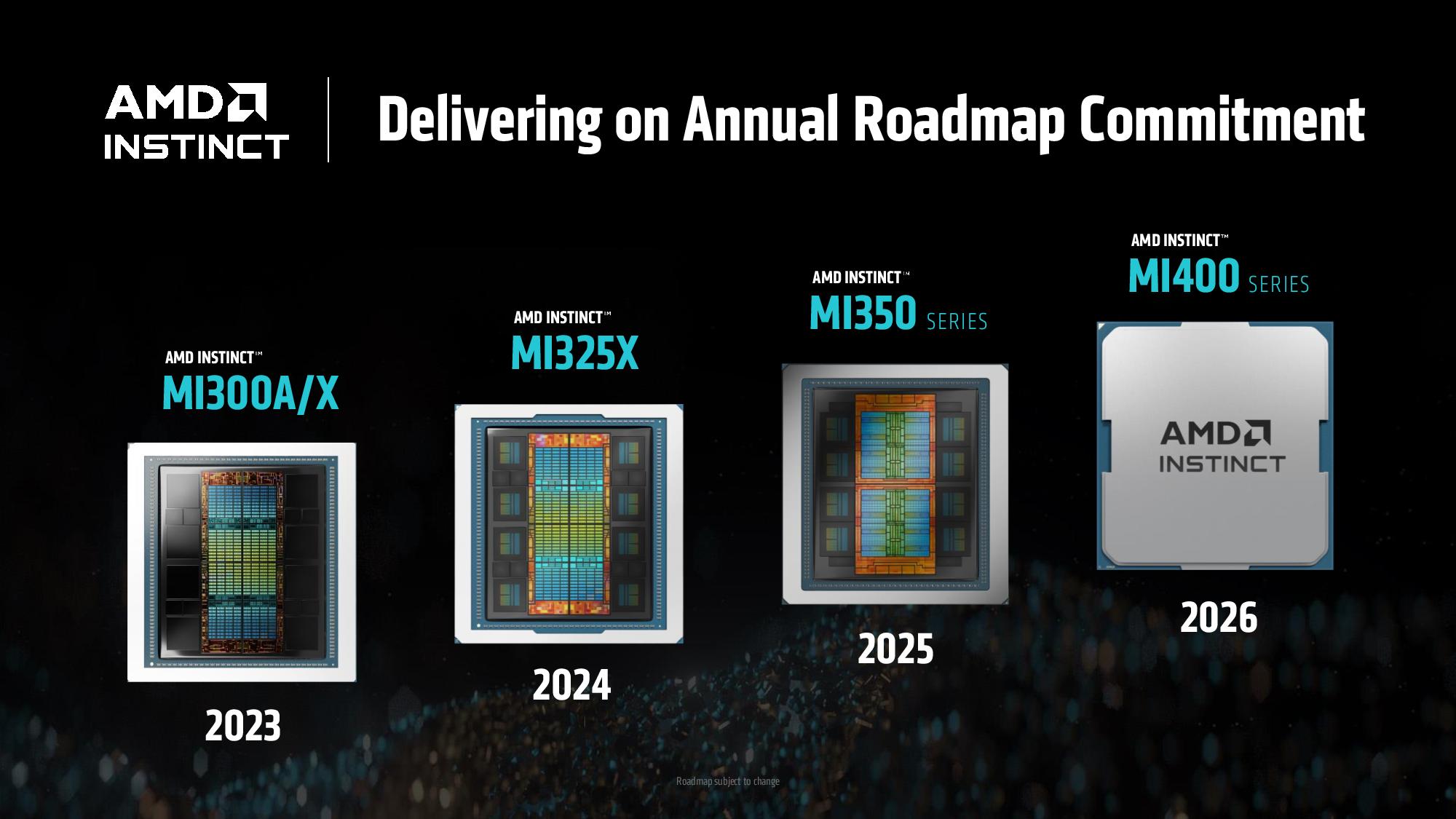

Furthermore, ROCm 7 adds support for lower-precision data types like FP4 and FP6, which will bring tangible improvements for the company's latest CDNA 4-based Instinct MI350X/MI355X processors as well as upcoming CDNA 5-based MI400X and next-generation Instinct MI500X-series products that will succeed the Instinct MI300-series in 2026 and 2027, respectively.

In addition, along with ROCm 7, AMD introduced its ROCm Enterprise AI MLOps solution tailored for enterprise use. The platform offers tools for refining models using domain-specific datasets and supports integration into both structured and unstructured workflows. AMD said it works with ecosystem partners to build reference implementations for applications such as chatbots and document summarization in a bid to make AMD hardware suitable for rapid deployment in production environments.

Last but not least, AMD also launched its Developer Cloud that provides ready-to-use access to MI300X hardware with configurations ranging from a single-GPU MI300X with 192 GB of memory to eight-way MI300X setups with 1536 GB of memory. For starters, AMD provides 25 free usage hours, and additional credits are available through developer programs. Early support for Instinct MI350X-based systems is also planned.

Follow Tom's Hardware on Google News to get our up-to-date news, analysis, and reviews in your feeds. Make sure to click the Follow button.

5 months ago

70

5 months ago

70

English (US) ·

English (US) ·