Serving tech enthusiasts for over 25 years.

TechSpot means tech analysis and advice you can trust.

What just happened? AMD's Instinct MI300 has quickly established itself as a major player in the AI accelerator market, driving significant revenue growth. While it can't hope to match Nvidia's dominant market position, AMD's progress indicates a promising future in the AI hardware sector.

AMD's recently launched Instinct MI300 GPU has quickly become a massive revenue driver for the company, rivaling its entire CPU business in sales. It is a significant milestone for AMD in the competitive AI hardware market, where it has traditionally lagged behind industry leader Nvidia.

During the company's latest earnings call, AMD CEO Lisa Su said that the data center GPU business, primarily driven by the Instinct MI300, has exceeded initial expectations. "We're actually seeing now our [AI] GPU business really approaching the scale of our CPU business," she said.

This achievement is particularly noteworthy given that AMD's CPU business encompasses a wide range of products for servers, cloud computing, desktop PCs, and laptops.

The Instinct MI300, introduced in November 2023, represents AMD's first truly competitive GPU for AI inferencing and training workloads. Despite its relatively recent launch, the MI300 has quickly gained traction in the market.

Financial analysts estimate that AMD's AI GPU revenues for September alone were greater than $1.5 billion, with subsequent months likely showing even stronger performance.

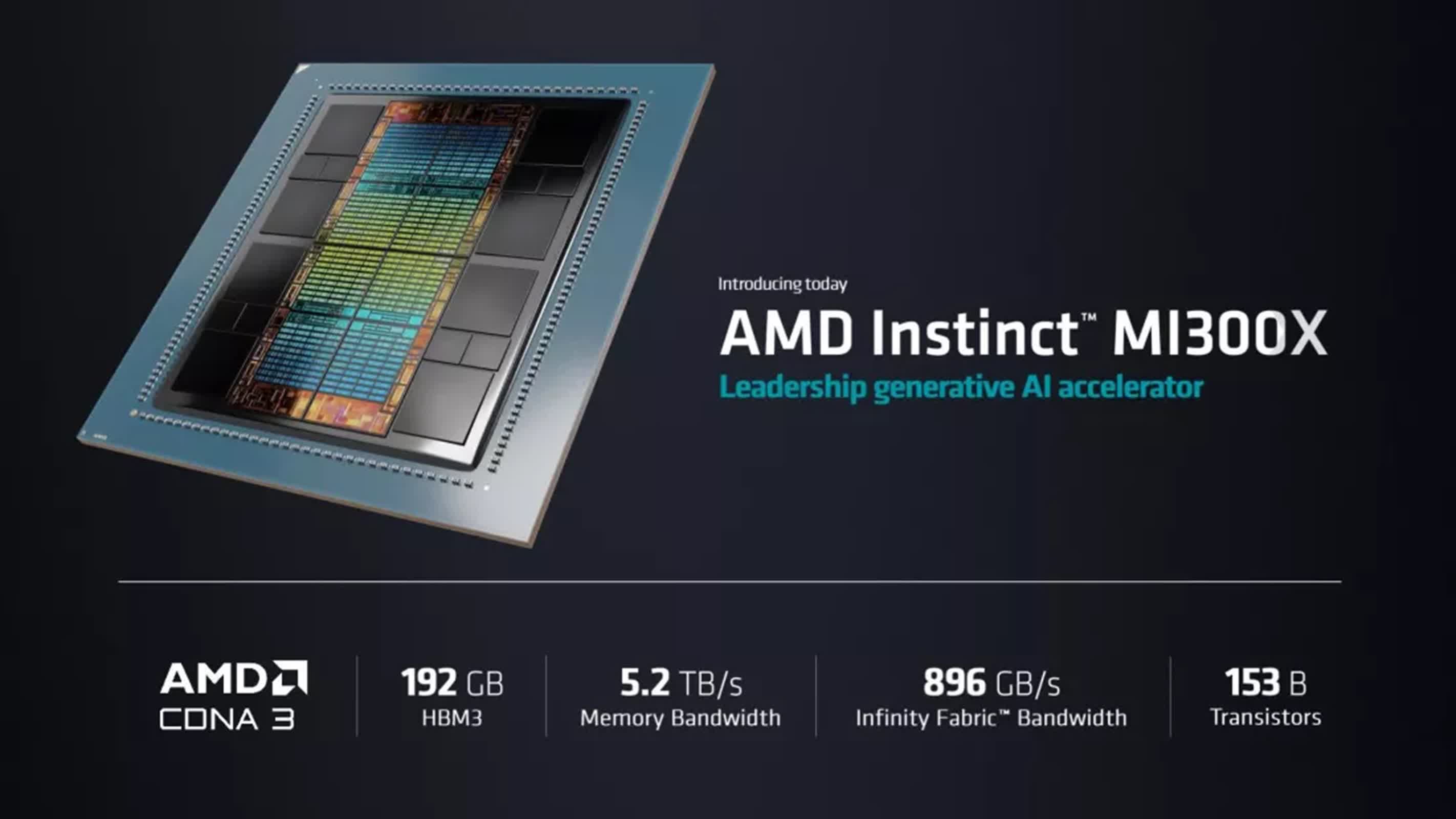

The MI300 specs are very competitive in the AI accelerator market, offering significant improvements in memory capacity and bandwidth.

The MI300X GPU boasts 304 GPU compute units and 192 GB of HBM3 memory, delivering a peak theoretical memory bandwidth of 5.3 TB/s. It achieves peak FP64/FP32 Matrix performance of 163.4 TFLOPS and peak FP8 performance reaching 2,614.9 TFLOPS.

The MI300A APU integrates 24 Zen 4 x86 CPU cores alongside 228 GPU compute units. It features 128 GB of Unified HBM3 Memory and matches the MI300X's peak theoretical memory bandwidth of 5.3 TB/s. The MI300A's peak FP64/FP32 Matrix performance stands at 122.6 TFLOPS.

The success of the Instinct MI300 has also attracted major cloud providers, such as Microsoft. The Windows maker recently announced the general availability of its ND MI300X VM series, which features eight AMD MI300X Instinct accelerators. Earlier this year, Microsoft Cloud and AI Executive Vice President Scott Guthrie said that AMD's accelerators are currently the most cost-effective GPUs available based on their performance in Azure AI Service.

While AMD's growth in the AI GPU market is impressive, the company still trails behind Nvidia in overall market share. Analysts project that Nvidia could achieve AI GPU sales of $50 to $60 billion in 2025, while AMD might reach $10 billion at best.

However, AMD CFO Jean Hu noted that the company is working on over 100 customer engagements for the MI300 series, including major tech companies like Microsoft, Meta, and Oracle, as well as a broad set of enterprise customers.

The rapid success of the Instinct MI300 in the AI market raises questions about AMD's future focus on consumer graphics cards, as the significantly higher revenues from AI accelerators may influence the company's resource allocation. But AMD has confirmed its commitment to the consumer GPU market, with Su announcing that the next-generation RDNA 4 architecture, likely to debut in the Radeon RX 8800 XT, will arrive early in 2025.

:quality(85):upscale()/2024/11/04/810/n/1922564/da875c20672911f51e8a59.58915976_.jpg)

English (US) ·

English (US) ·