Serving tech enthusiasts for over 25 years.

TechSpot means tech analysis and advice you can trust.

In a nutshell: AMD has launched the fifth generation of its Epyc server processors, codenamed "Turin." The lineup includes 27 SKUs and introduces significant advancements with the new Zen 5 and 5c core architectures. These new chips will compete in the data center market against Intel's Granite Rapids and Sierra Forest offerings.

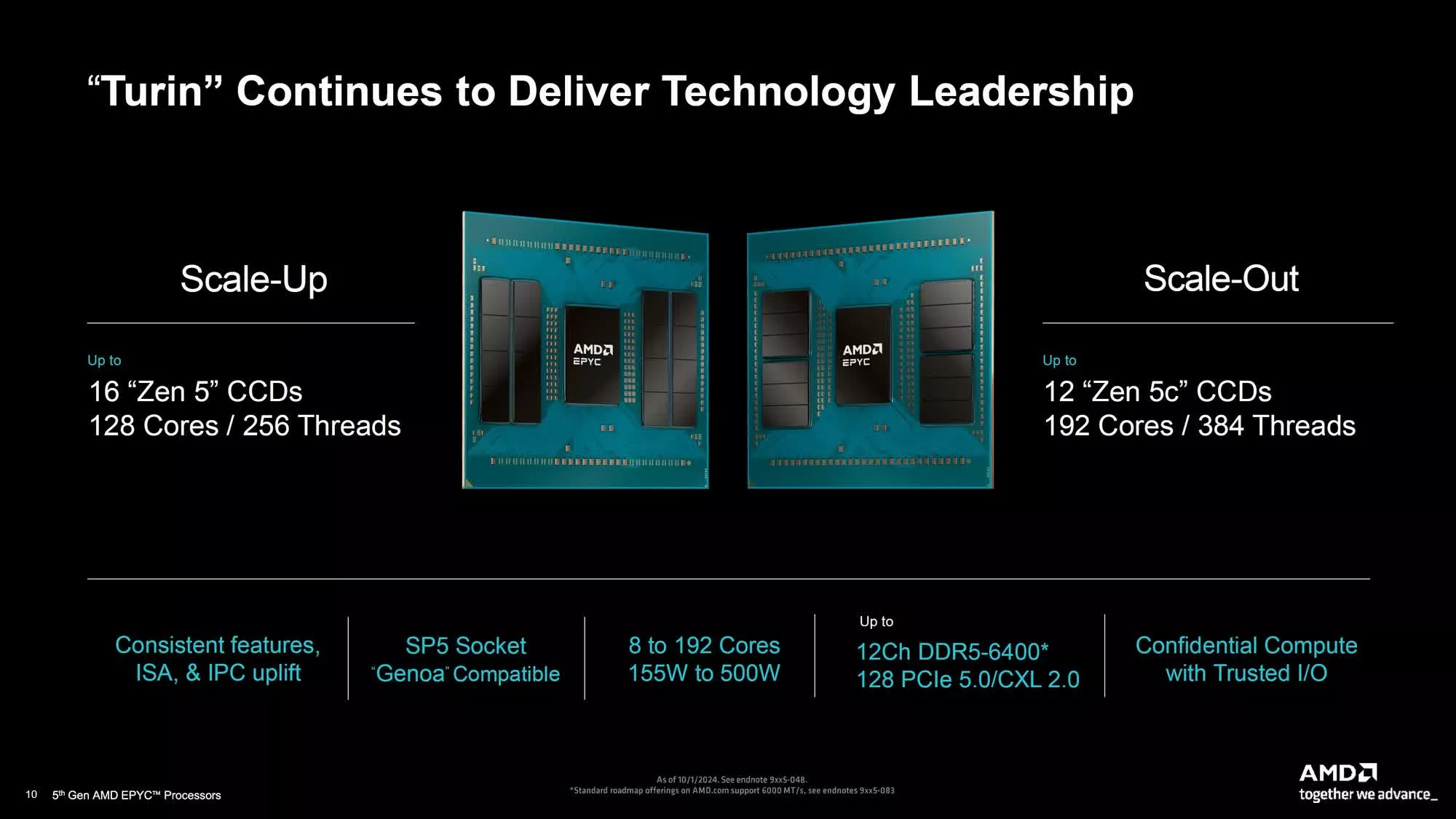

Launched under the Epyc 9005 branding, the 5th-gen Epyc CPU family is compatible with AMD's SP5 socket, just like the Zen 4-based "Genoa" and "Bergamo" processors, and features two distinct designs.

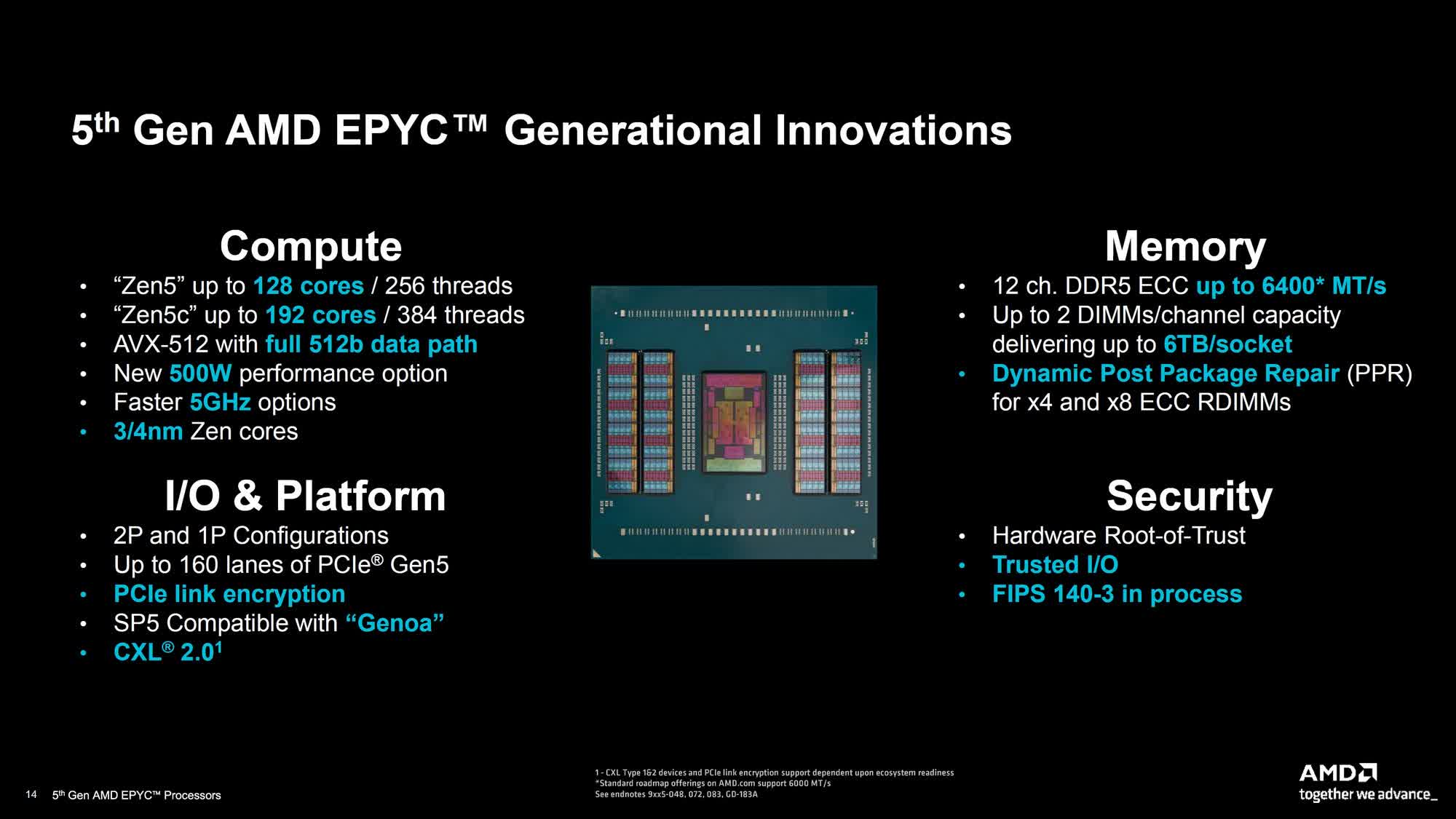

The first is the "Scale-Up" variant, utilizing 4nm Zen 5 cores with up to 16 CCDs for optimal single-threaded performance. The second is the "Scale-Out" variant, which leverages the 3nm Zen 5c core design with up to 12 CCDs for improved multi-core throughput.

The lineup is led by the Epyc 9965, featuring 192 Zen 5c cores, 384 threads, a base clock of 2.5GHz, and a boost clock of up to 3.7GHz. It offers 384MB of L3 cache and has a default TDP of 500W. The processor is priced at $14,813.

The flagship Zen 5 product is the Epyc 9755, which boasts 128 cores, 256 threads, 512MB of L3 cache, a 2.7GHz base clock, a 4.1GHz boost clock, and a 500W TDP. It retails for $12,984.

On the other end of the spectrum, the entry-level Turin processor is the Epyc 9015, featuring 8 Zen 5 cores, a 3.6GHz base clock, a 4.1GHz boost clock, a 125W TDP, and 64MB of L3 cache. It has an MSRP of $527.

AMD claims the Epyc 9965 chip offers up to 3.7x faster performance than the Xeon Platinum 8592+ in end-to-end AI workloads, such as TPCx-AI (derivative). In generative AI models, like Meta's Llama 3.1-8B, the Epyc 9965 is said to deliver 1.9x the throughput performance of the Xeon Platinum 8592+.

According to AMD, the Zen 5 cores enable Turin to deliver significant performance gains over the previous generation, with up to a 17 percent increase for Enterprise and Cloud platforms, and up to a 37 percent improvement for HPC and AI platforms.

The chips also feature boost frequencies of up to 5GHz (Epyc 9575F and 9175F) and AVX-512 support with the full 512-bit data path. The Epyc 9575F, AMD's purpose-built AI host node CPU, leverages its 5GHz boost clock to enable a 1,000-node AI cluster to handle up to 700,000 more inference tokens per second.

Beyond the shift to Zen 5 core architecture, Turin introduces several key advancements, including support for up to 12 channels of DDR5-6400 MT/s memory, 6TB memory capacities per socket, and 128 PCIe 5.0/CXL 2.0 lanes. Another notable feature is Dynamic Post Package Repair (PPR) for x4 and x8 ECC RDIMMs, improving memory reliability.

:quality(85):upscale()/2023/09/21/802/n/1922729/d9a11ce9650c8850437280.00070284_.jpg)

:quality(85):upscale()/2024/10/30/955/n/42301552/28e49c1e6722ab5b973b38.46745005_.jpg)

:quality(85):upscale()/2024/10/30/711/n/1922441/c62313206722590ade53c4.47456265_.jpg)

English (US) ·

English (US) ·