Tech companies are selling a vision of a world in which you can ask their AI for advice on what to cook, wear, and do with your free time. The quality of that advice is often dubious at best—do you like putting glue on your pizza?—but we're meant to believe this is the future of computing. As for who's paying for the massive amounts of electricity used in the course of answering those questions, well, the answer is "us" as consumer electricity prices across the country are already rising due to a power grid that's ill-prepared for the sudden spike in demand.

Newsweek reports that increased consumption from data centers already contributed to a 6.5% increase in energy prices between May 2024 and May 2025. (Though it's worth noting that's just the average, with Connecticut and Maine reporting increases of 18.4% and 36.3%, respectively.) And those numbers are expected to rise as tech companies continue to build out their AI-related infrastructure.

"To keep pace, utilities are increasingly relying on aging fossil fuel plants to generate enough electricity to meet the crushing demand," Newsweek says. "Dominion Energy, which serves much of Virginia, has asked regulators to require large-load customers to pay a fairer share of grid upgrade costs. Without reform, electricity prices in parts of Virginia are expected to climb as much as 25 percent by 2030."

This problem is unlikely to be solved by developing more efficient models, either, and not just because software developers historically see improved efficiency as providing additional space to cram features into rather than a benefit unto itself. It's also because the AI tools available today rely upon the constant ingestion of information, which leads the companies that created them to fetch as much content as they possibly can.

That incessant retrieval of the sum of digitally accessible human knowledge has other consequences—which is why one scholar dubbed AI crawlers a "digital menace"—but right now we're focused on the amount of energy those operations require. And constantly fetching all that information is merely the first step in the process of incorporating it into the AI's dataset.

It's no wonder that Lawrence Berkeley National Laboratory’s 2024 United States Data Center Energy Usage Report (PDF) for the U.S. Department of Energy included a figure on data centers' electricity use that showed a "compound annual growth rate of approximately 7% from 2014 to 2018, increasing to 18% between 2018 and 2023, and then ranging from 13% to 27% between 2023 and 2028.

That would lead to the industry's power usage representing "6.7% to 12.0% of total U.S. electricity consumption forecasted for 2028," according to the report. U.S. electric grids simply aren't prepared to meet those demands—especially since they're also supposed to be preparing for "a combination of electric vehicle adoption, onshoring of manufacturing, hydrogen utilization, and the electrification of industry and buildings."

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

It's not just a matter of unplugging these data centers, either, because their backup power supplies are a problem too. Reuters reports that "the rapid expansion of data centers processing the vast amounts of information used for AI and crypto mining is forcing grid operators to plan for new contingencies and complicating the already difficult task of balancing the country’s supply and demand of electricity."

That report cited several "near-misses" in which grid operators narrowly avoided wide-scale blackouts caused by data centers activating their own power generators, which can lead to an oversupply of electricity that can overwhelm the infrastructure they're connected to, unless the companies operating them respond quickly enough. Failing to do so could lead to "cascading power outages across the [affected] region."

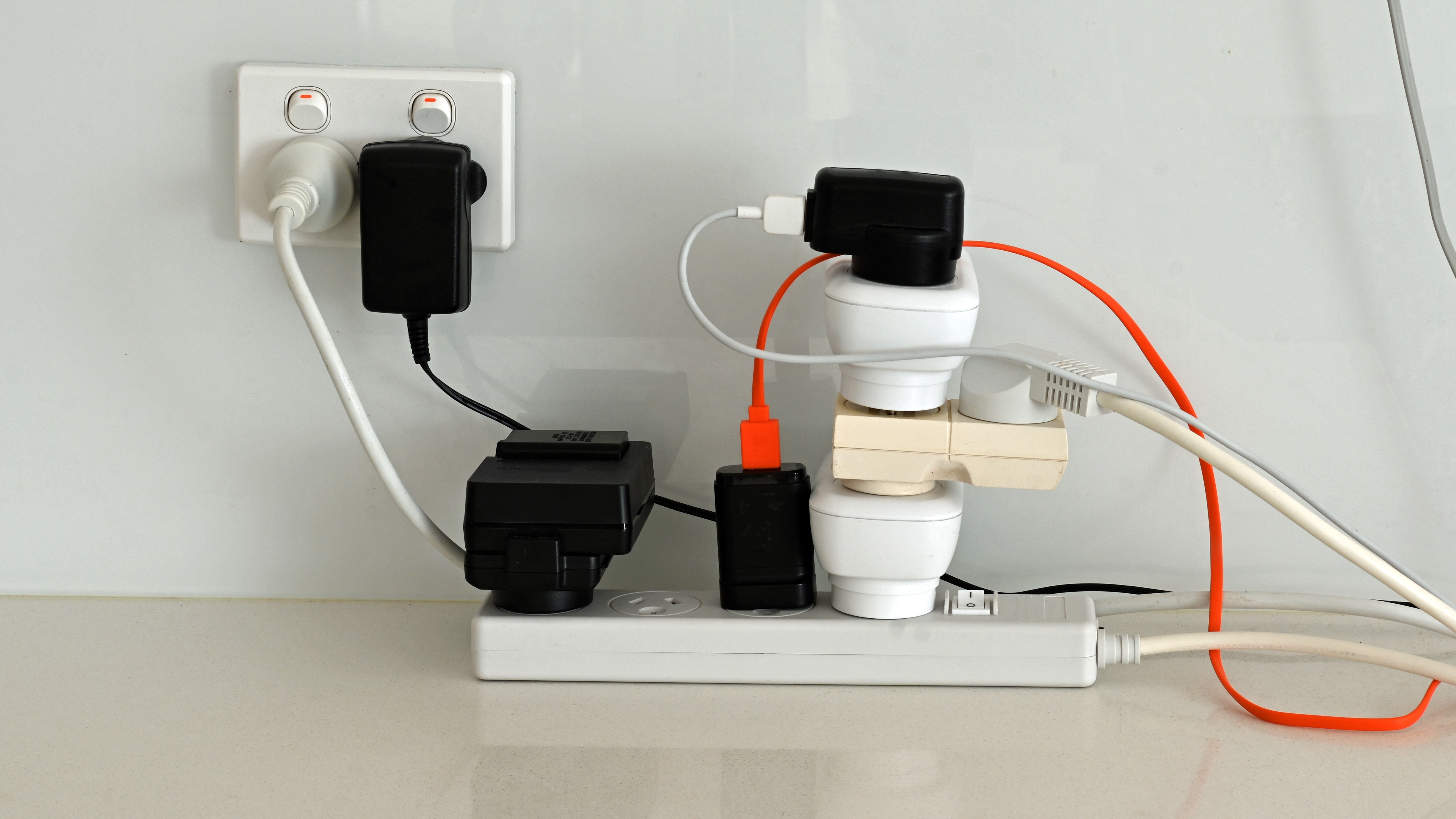

Even if that worst-case scenario is avoided, living near these power-hungry data centers reportedly "reduces the life span of electrical appliances, which can cause malfunctions, overheating, and electrical fires." (Which could in turn make this the first time a "not in my back yard" mentality is somewhat justified.) And that doesn't even consider the air, noise, and light pollution generated by these facilities.

All of which is to say that the current obsession with AI is putting immense demands on electric grids, leading to additional stress on appliances connected to those grids, and increasing the risk of power outages through the use of on-site generators meant solely to protect the facility's equipment without regard for the effect it could have on everyone else—and, per Newsweek's report, Americans are paying for that privilege.

Follow Tom's Hardware on Google News to get our up-to-date news, analysis, and reviews in your feeds. Make sure to click the Follow button.

2 months ago

61

2 months ago

61

English (US) ·

English (US) ·