It's a tough time to be a gamer on a tight budget. The AI boom has made fab time a precious resource. There's no business case for GPU vendors to use their precious TSMC wafers to churn out low-cost, low-margin, entry-level chips, much as we might want them to.

The ever-shifting tariff situation in the USA means prices are constantly in flux. And ever-increasing VRAM requirements mean that the 4GB and 6GB graphics cards of yore are being crushed by the latest games. Even if you can still find those cards on shelves, they're not smart buys.

So what’s the least a PC gamer can spend on a new graphics card these days and get a good gaming experience? We tried to find out.

We drew a hard line at cards with 8GB of VRAM. Recent graphics card launches have shown that 8GB is the absolute minimum for gamers who want to run modern titles at a 1080p resolution.

PC builders in this bracket aren’t going to be turning on Ray Tracing Overdrive mode in Cyberpunk 2077, or RT more generally, which is where VRAM frequently starts to become a true limit. Even raster games can challenge 8GB cards at 1080p with all settings maxed, though.

We also limited our search to modern cards that support DirectX 12 Ultimate. You might find a cheap GPU out there with 8GB of VRAM, but if it doesn’t support DirectX 12 Ultimate, it’s truly ancient.

Within those constraints, we found three potentially appealing options, all around the $200 mark. The Radeon RX 6600 is available for just $219.99 at Newegg right now in the form of ASRock's Challenger D model. Intel's Arc A750 can be had for $199.99, also courtesy of ASRock. Finally, the GeForce RTX 3050 8GB is still hanging around at $221 thanks to MSI's Ventus 2X XS card. We pitted this group against each other to find out whether any of them are still worth buying.

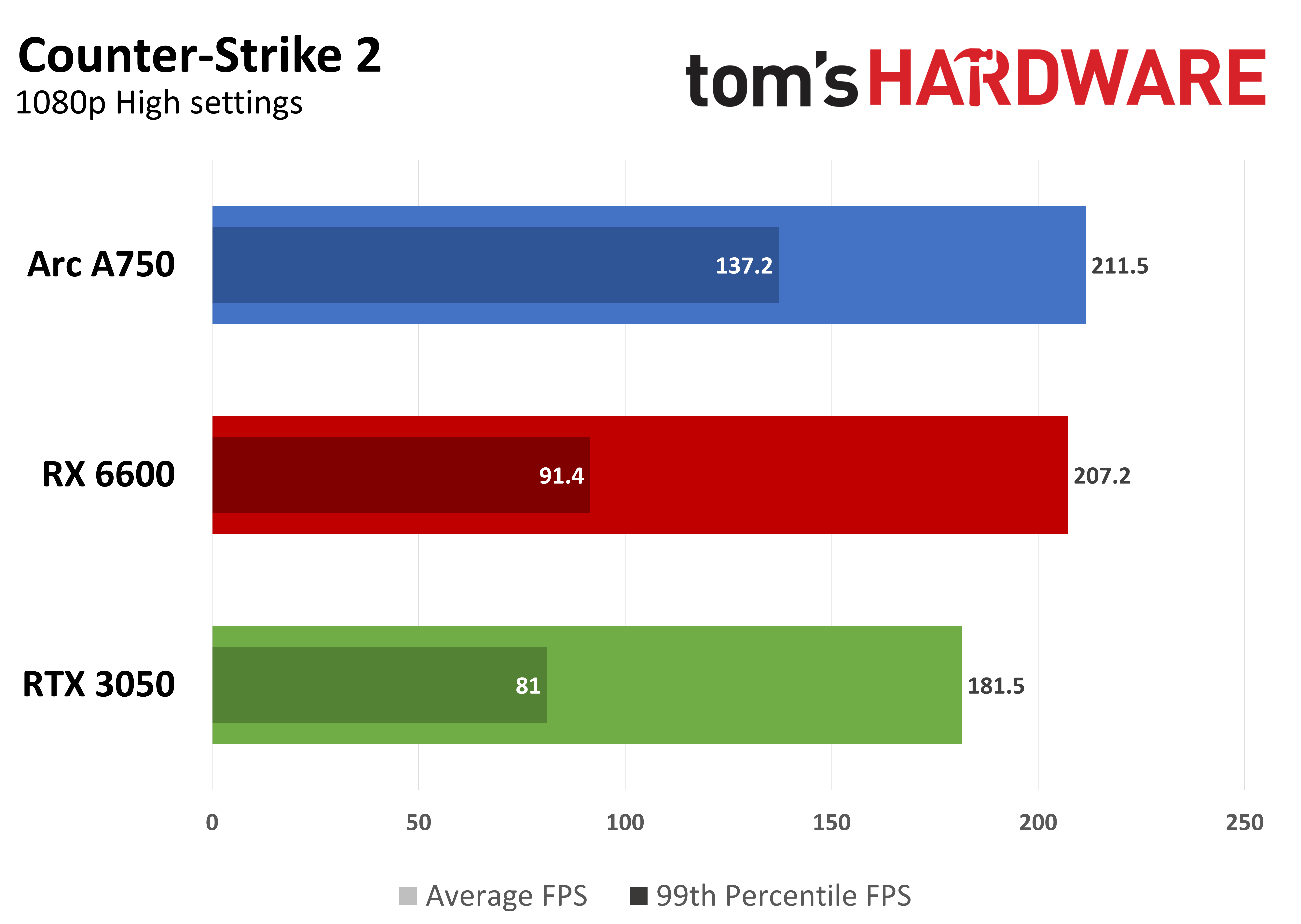

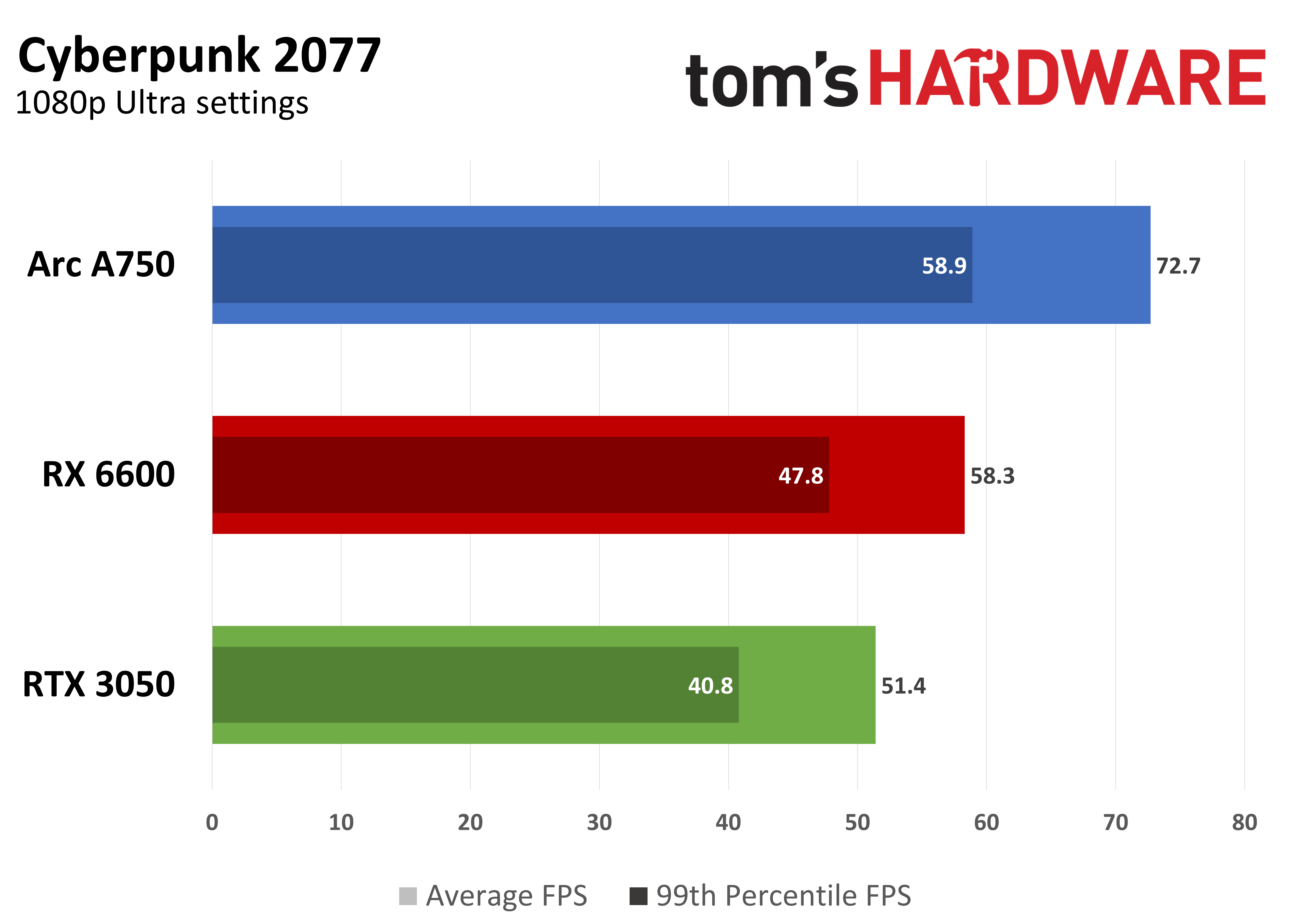

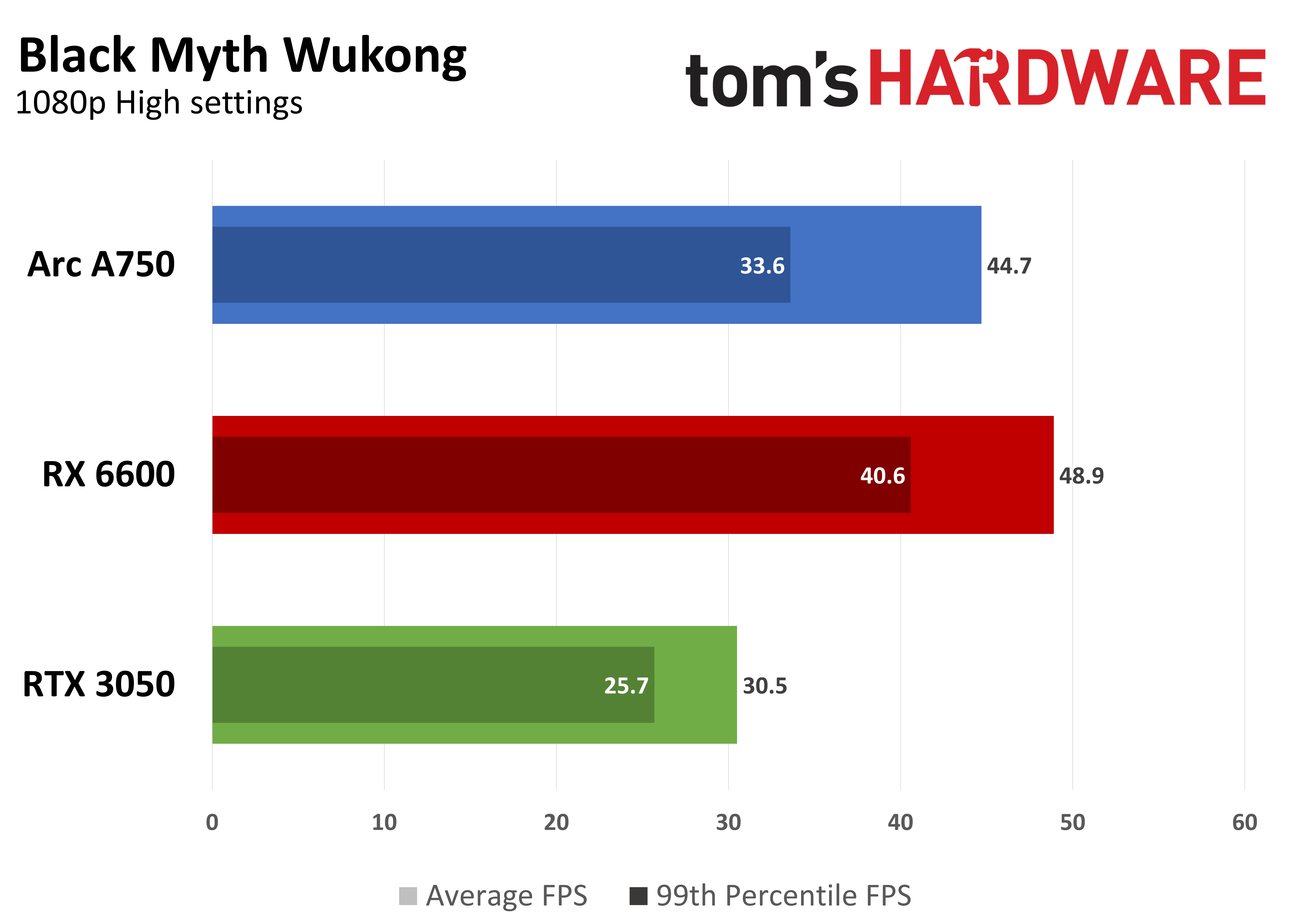

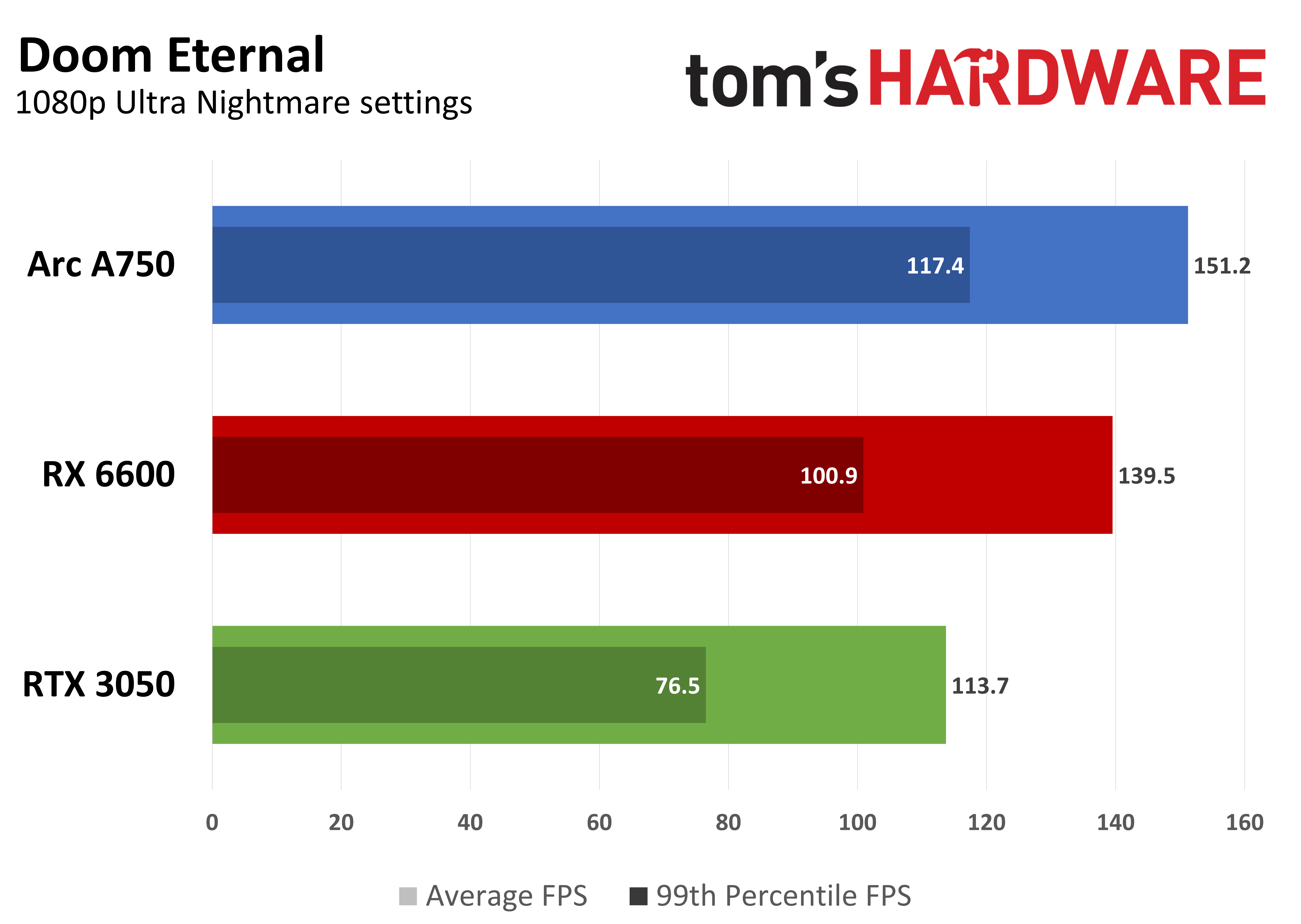

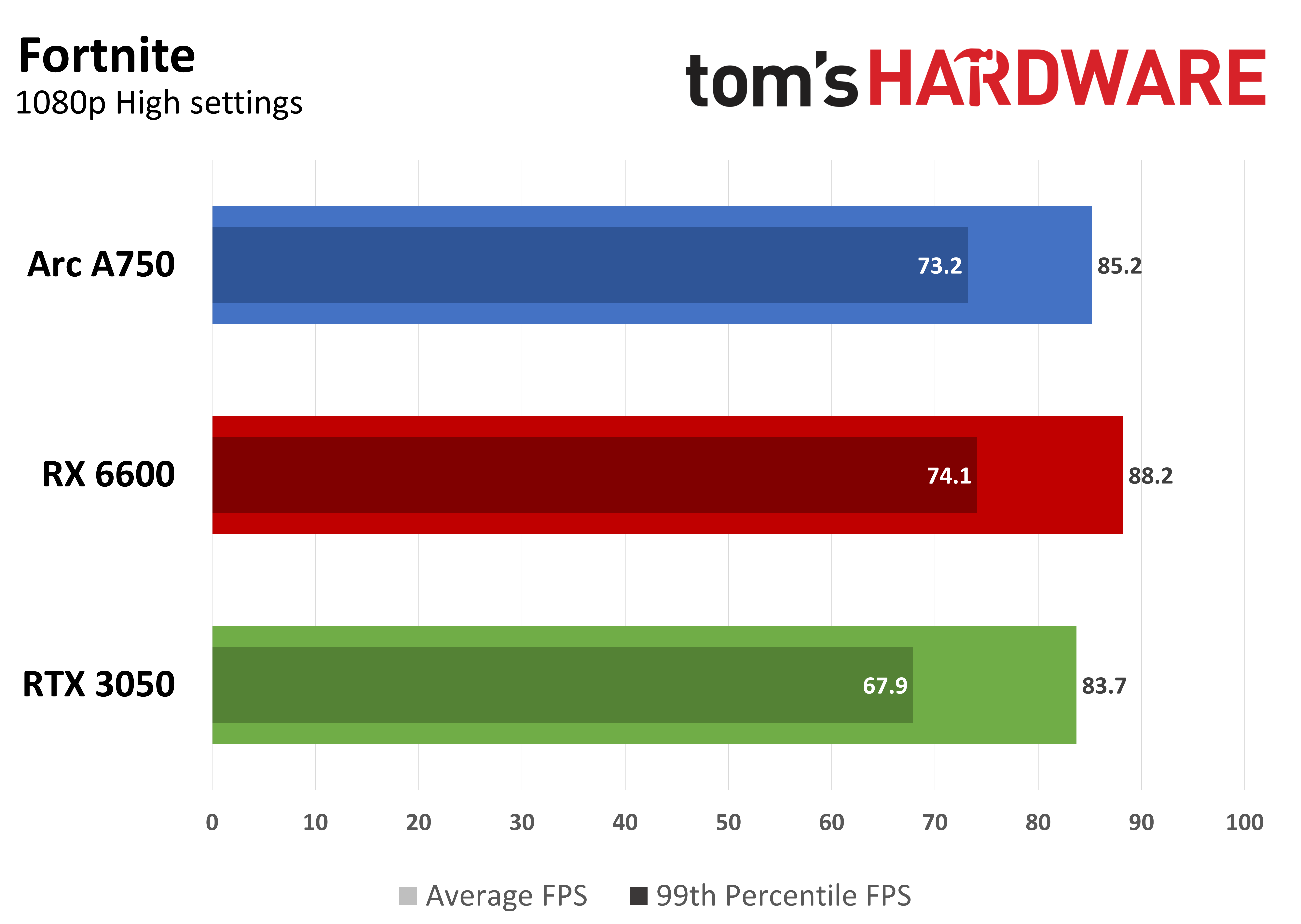

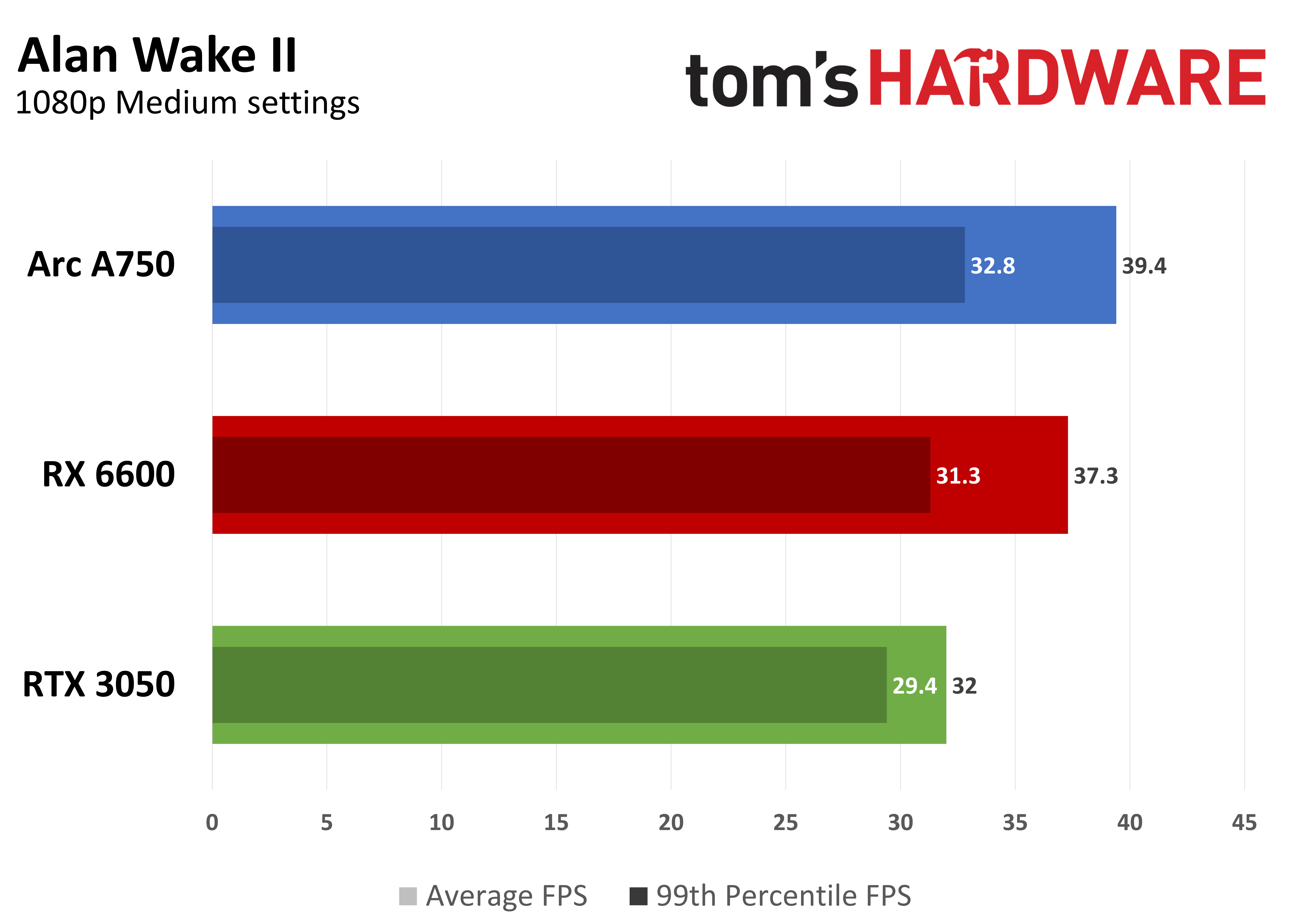

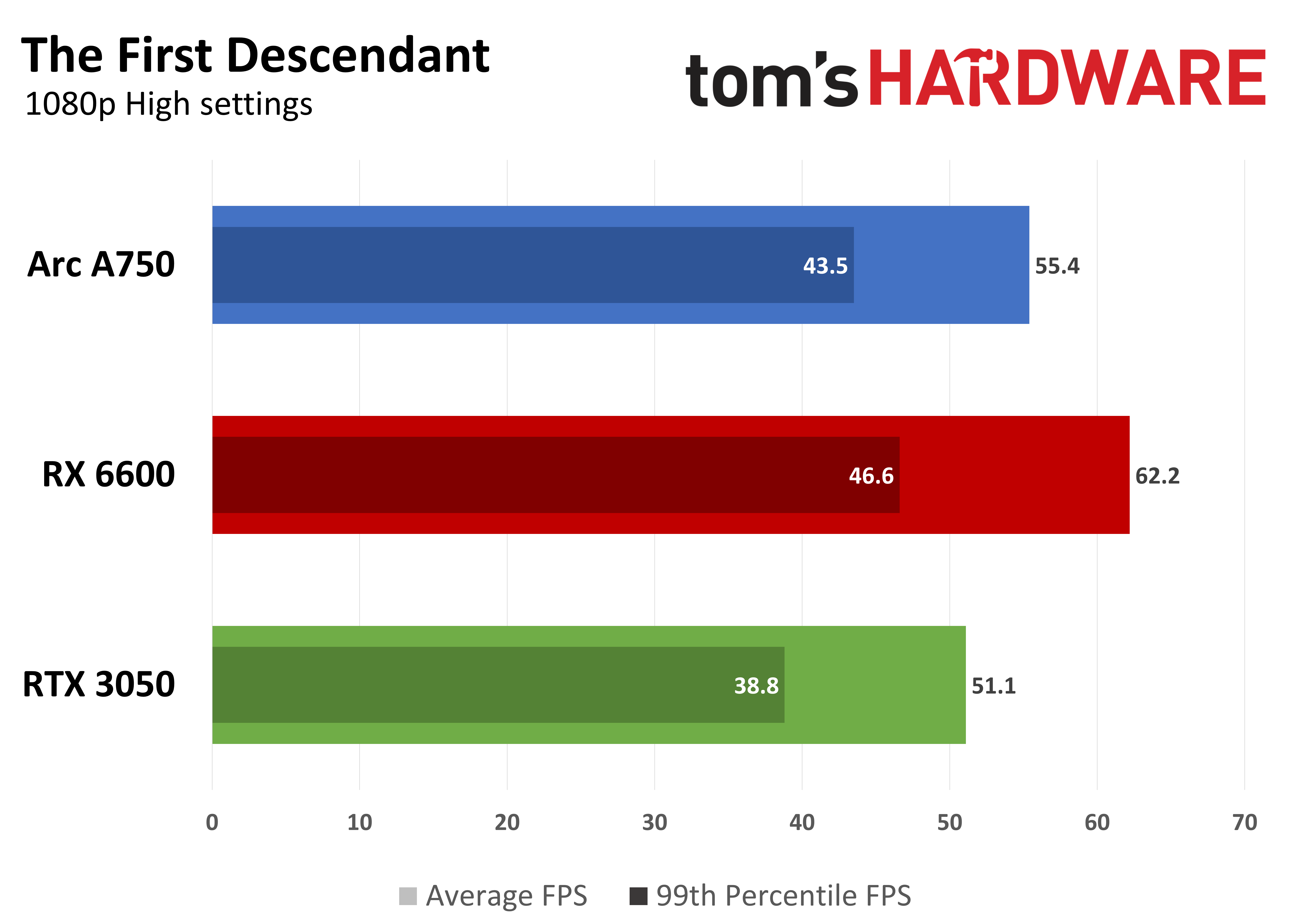

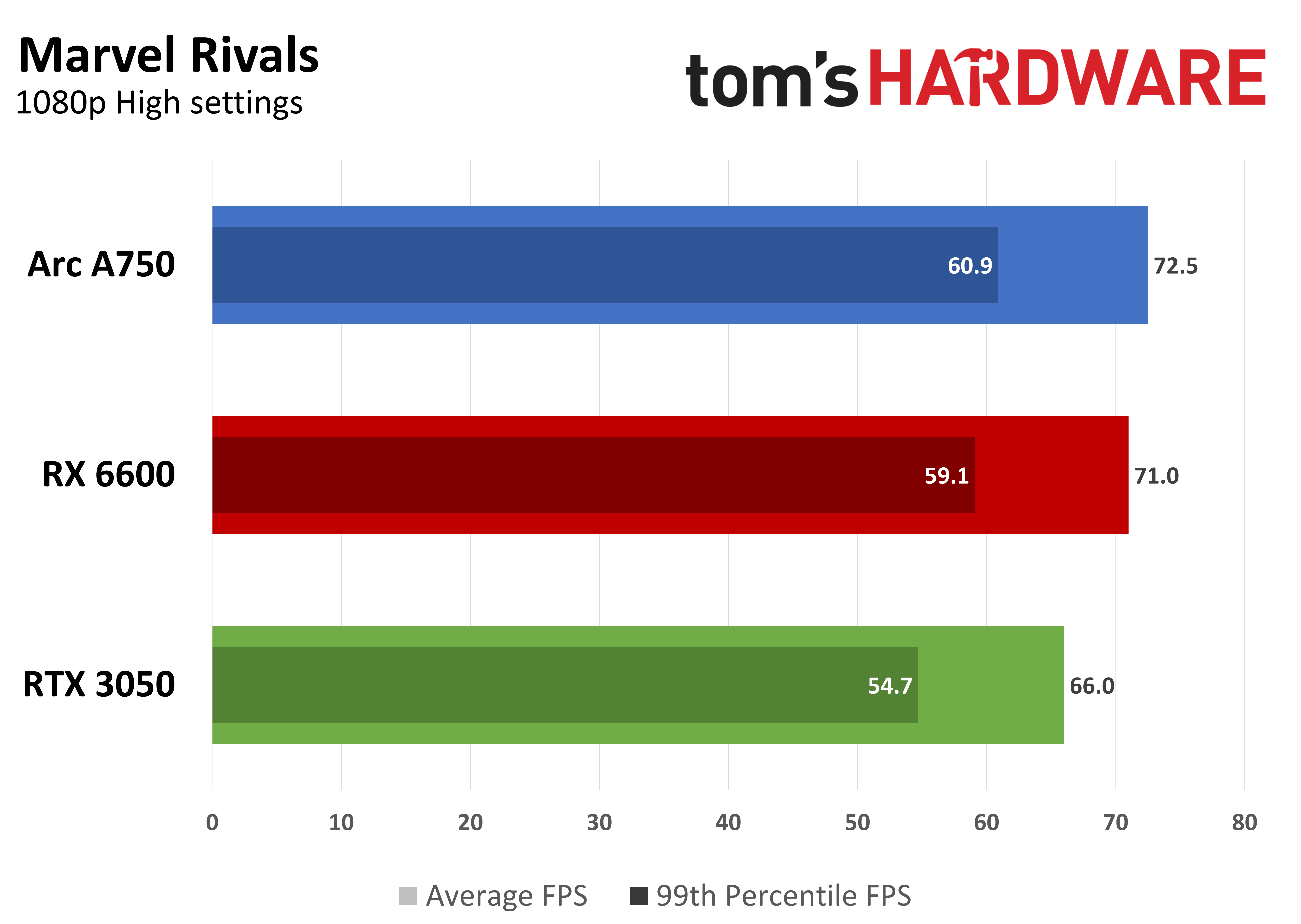

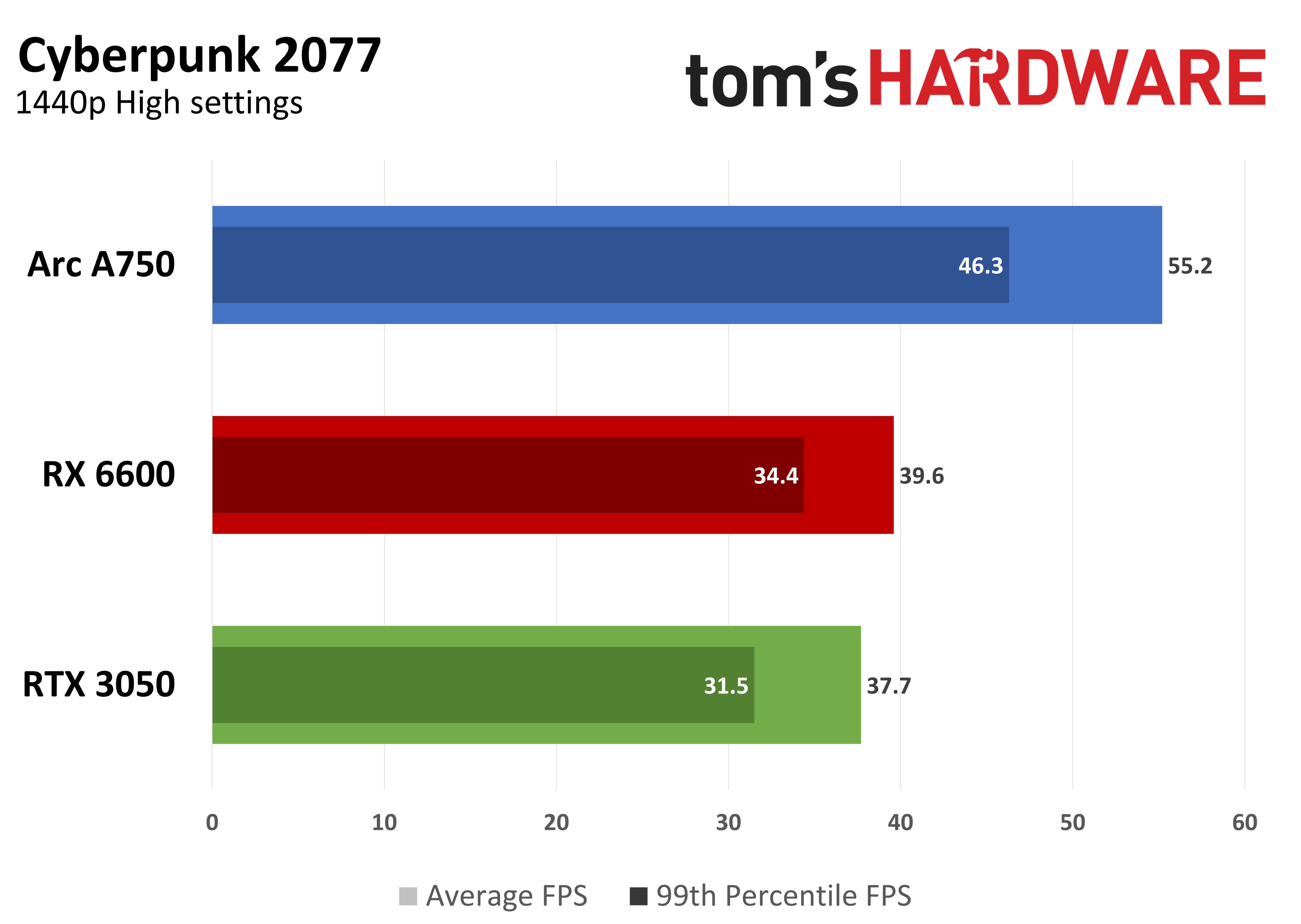

Raster gaming performance

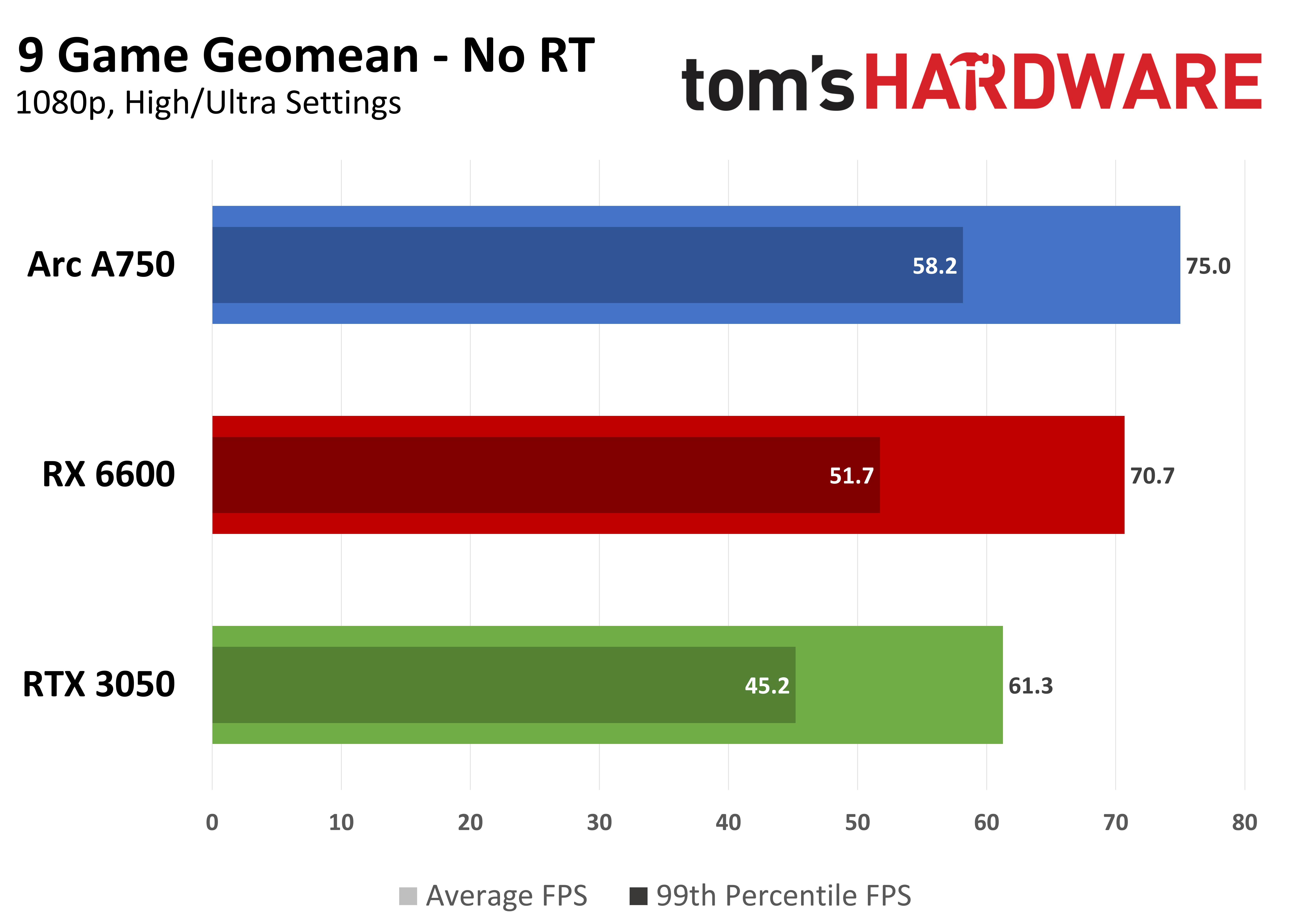

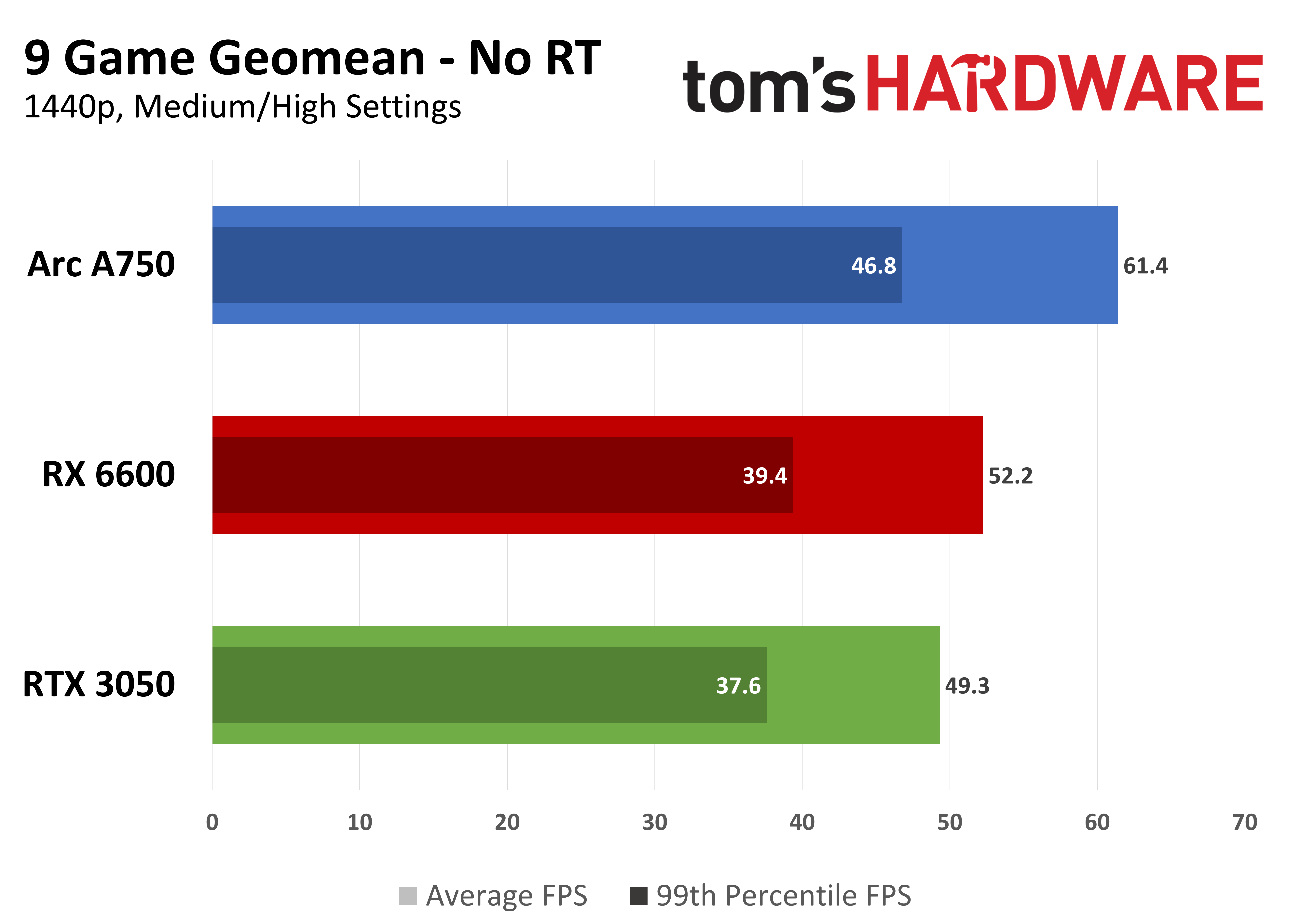

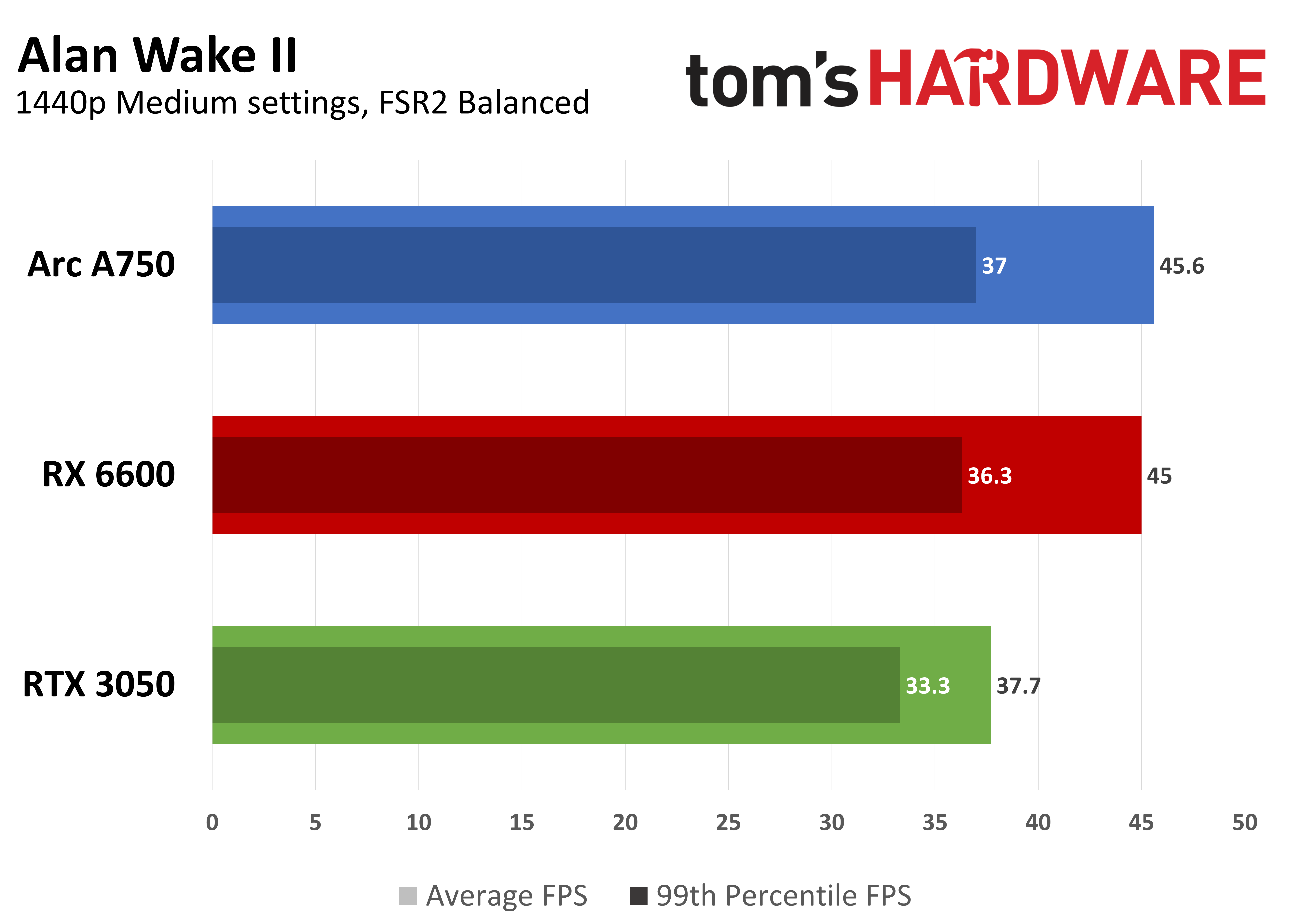

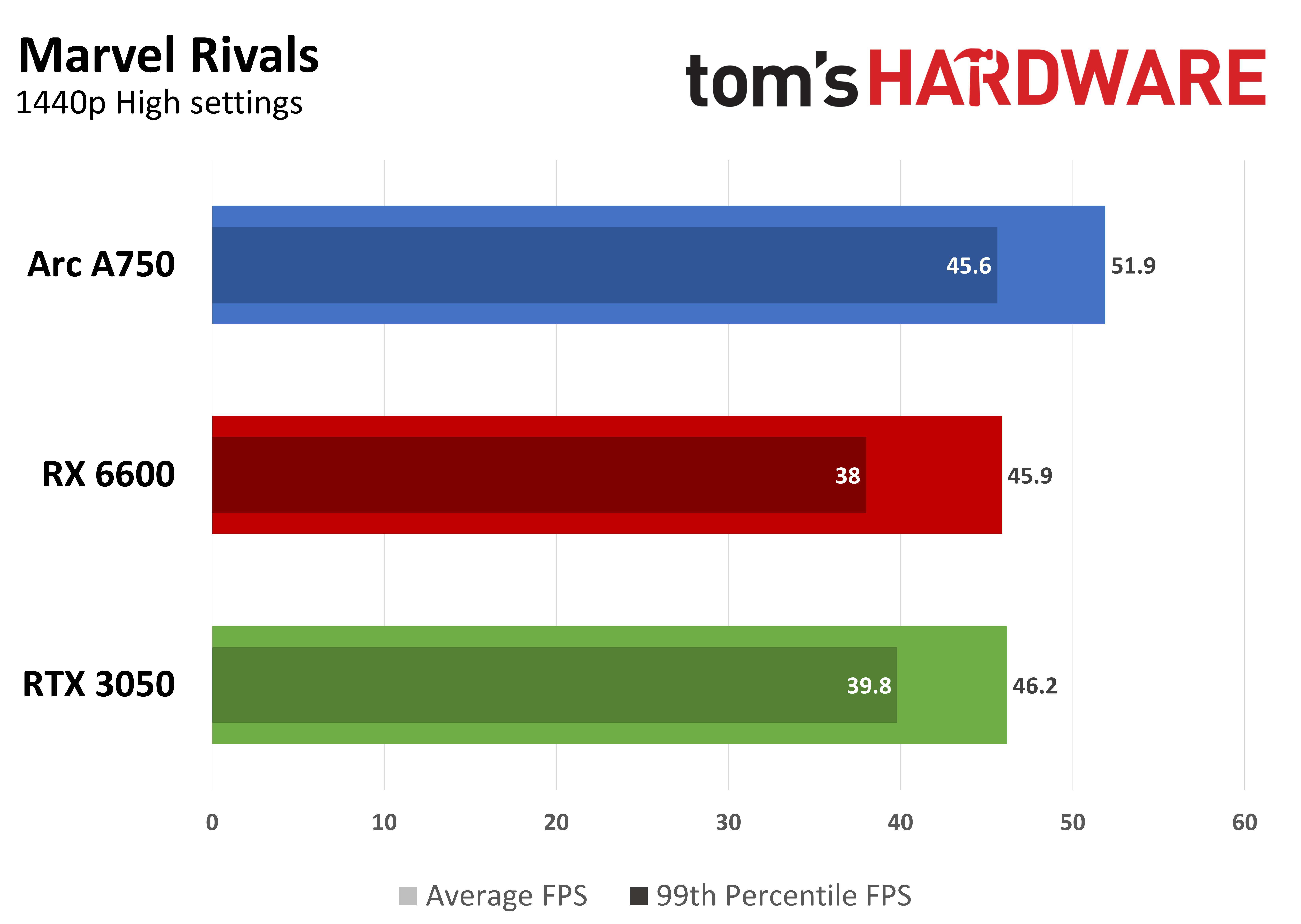

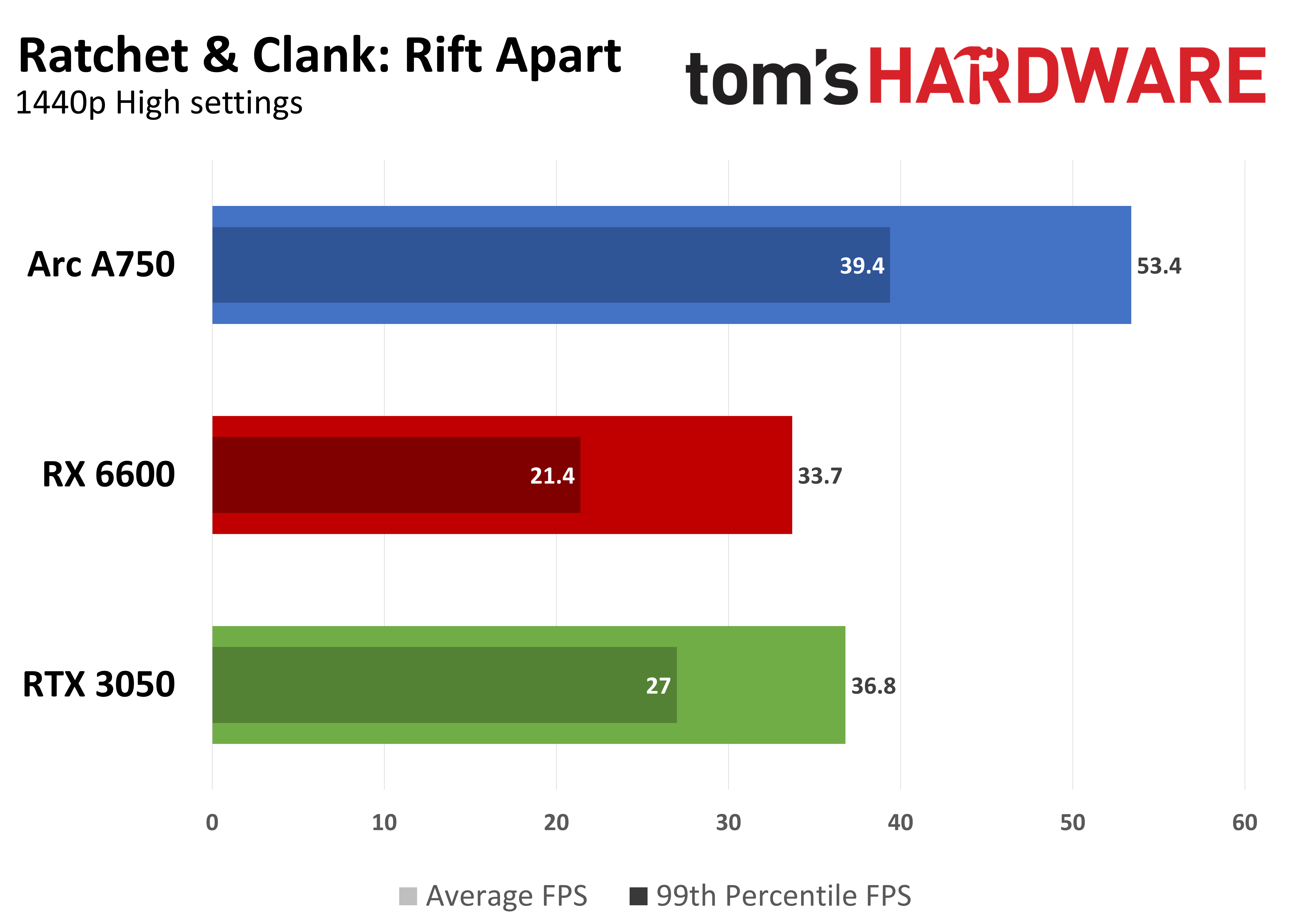

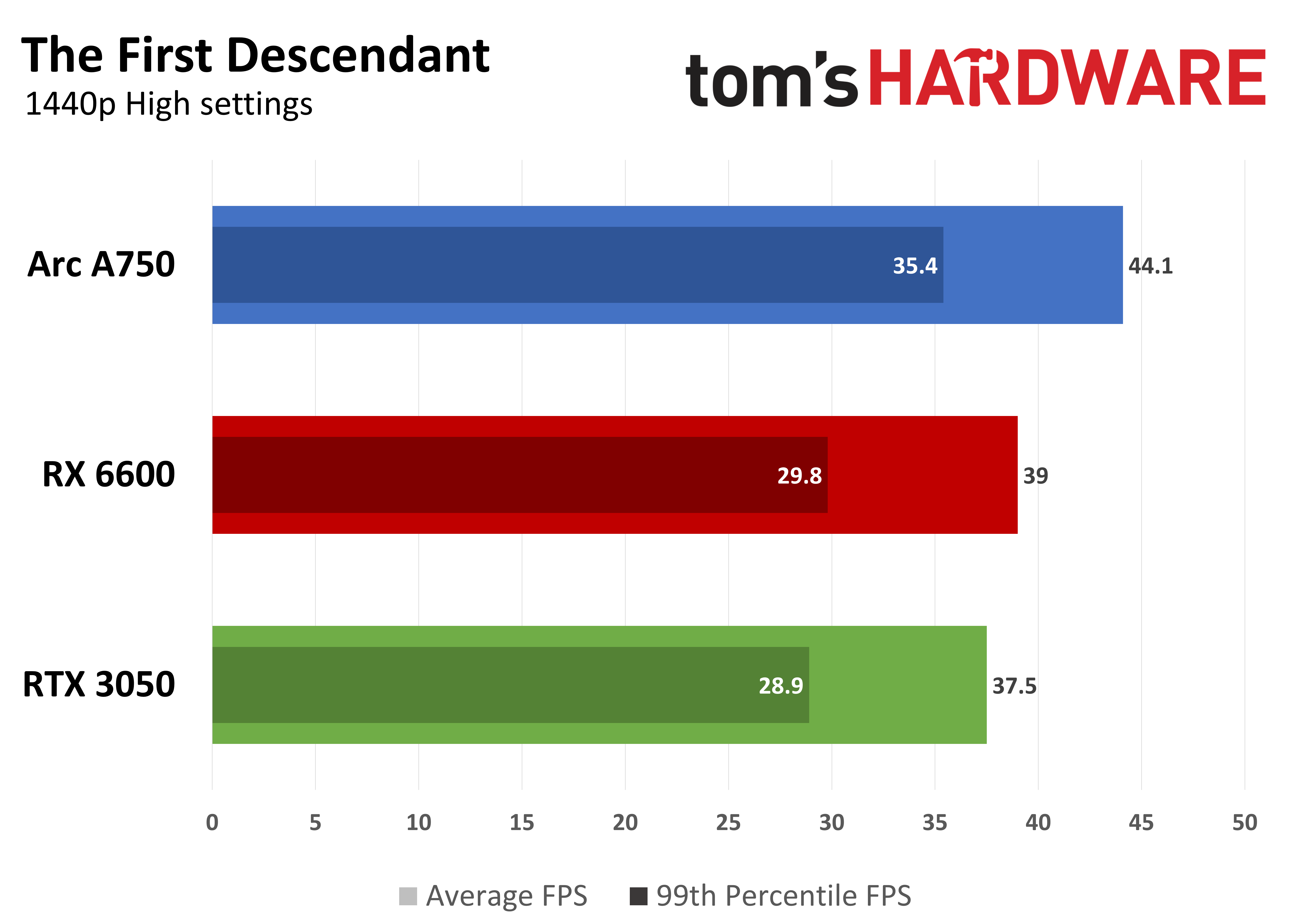

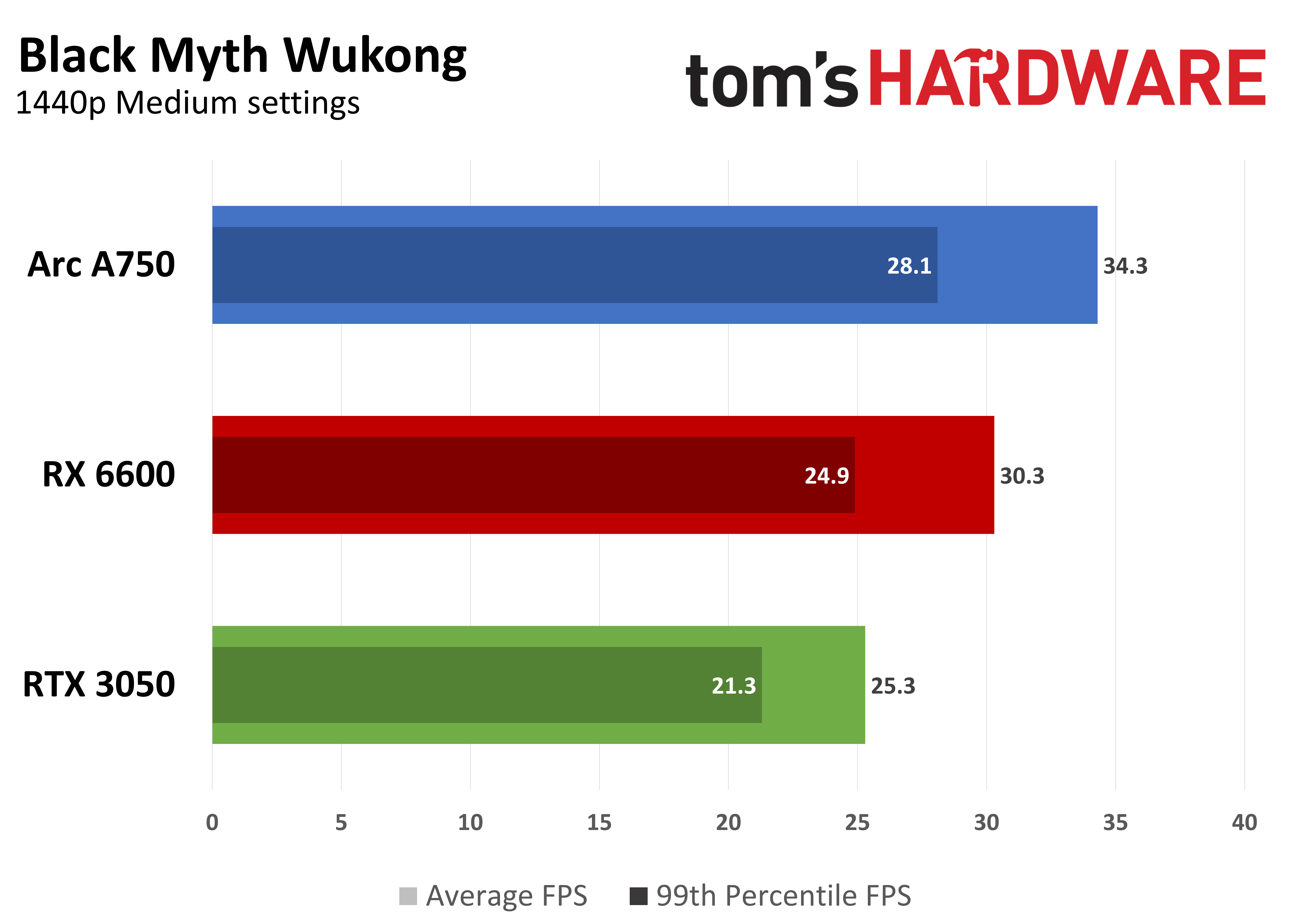

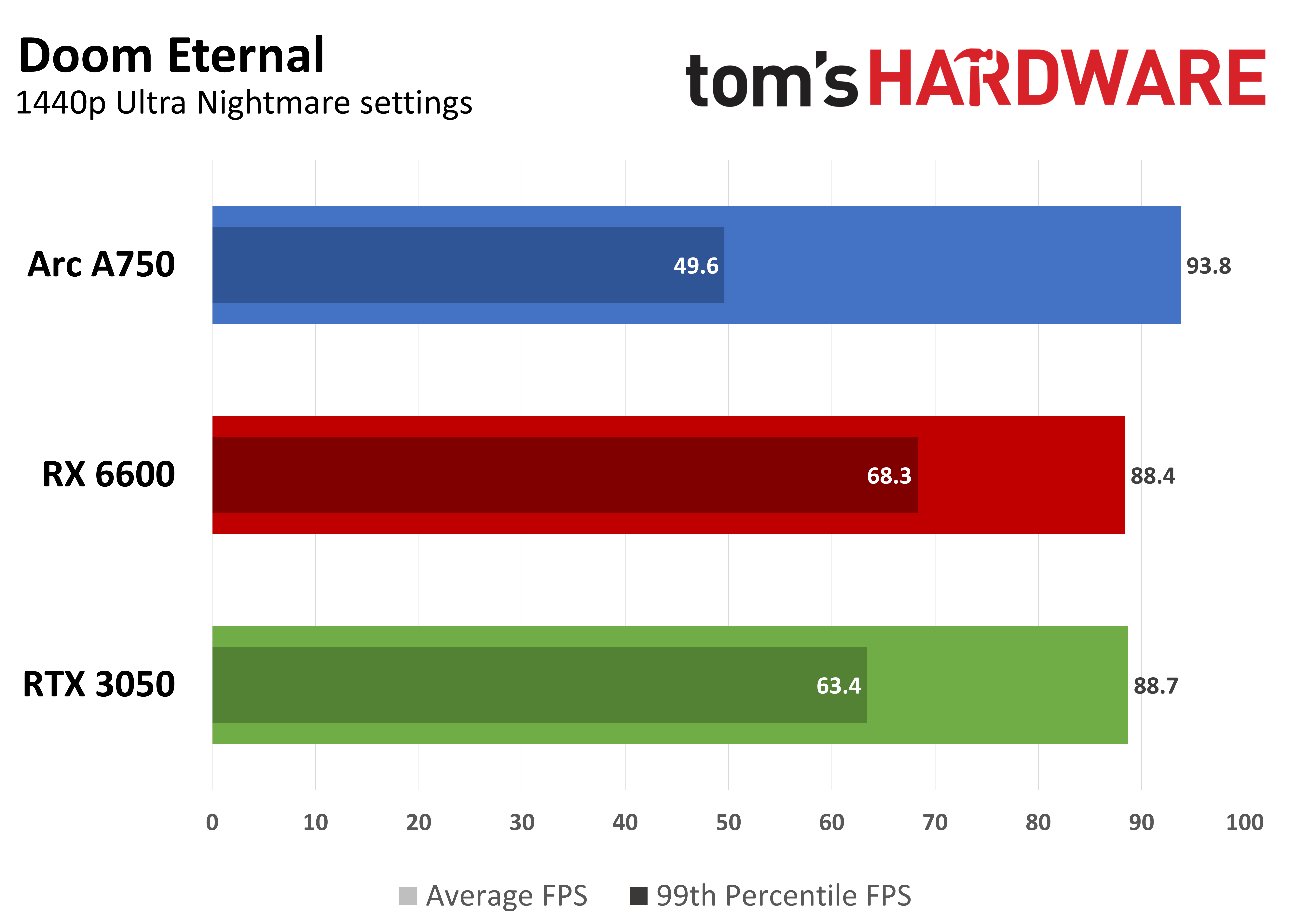

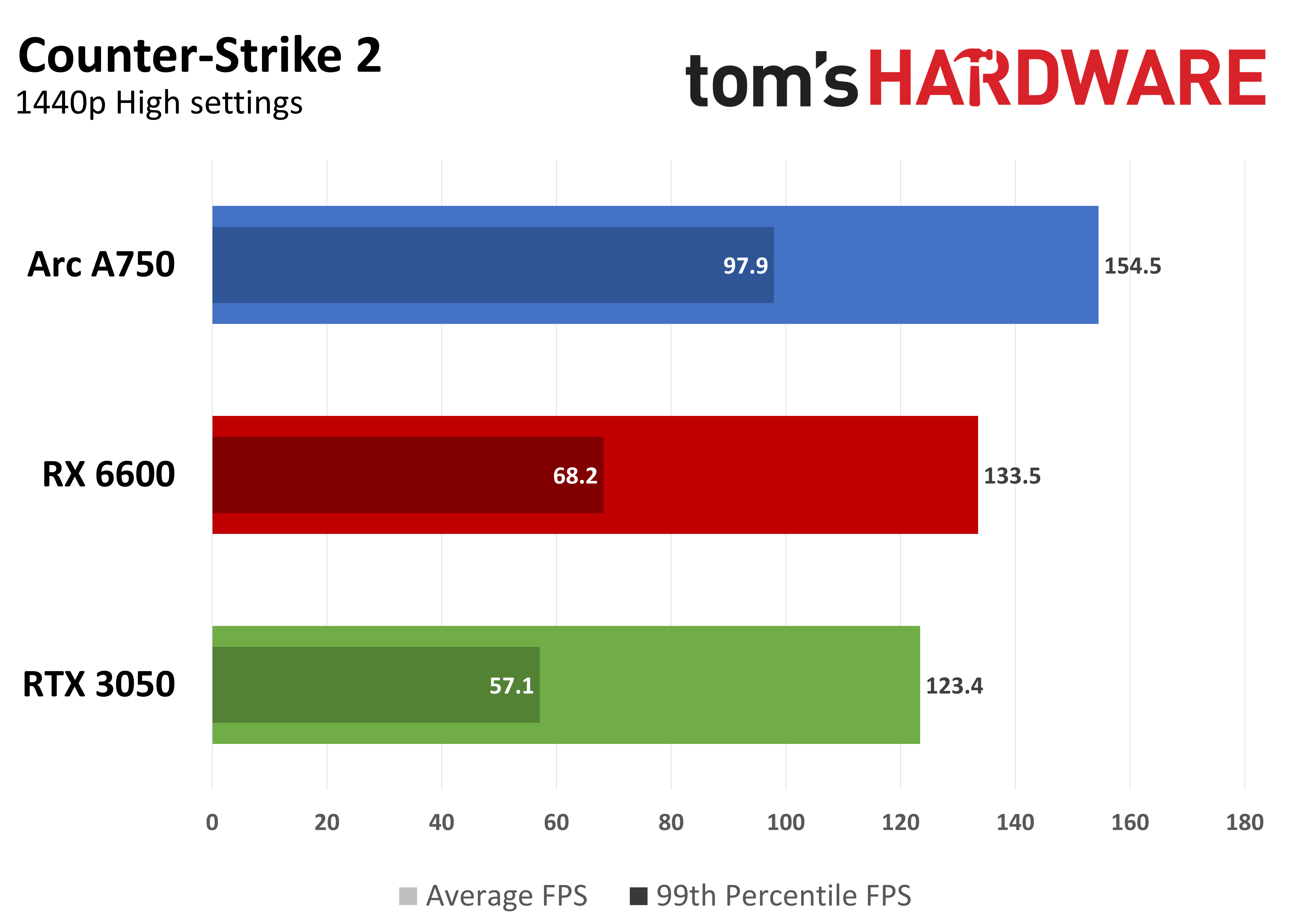

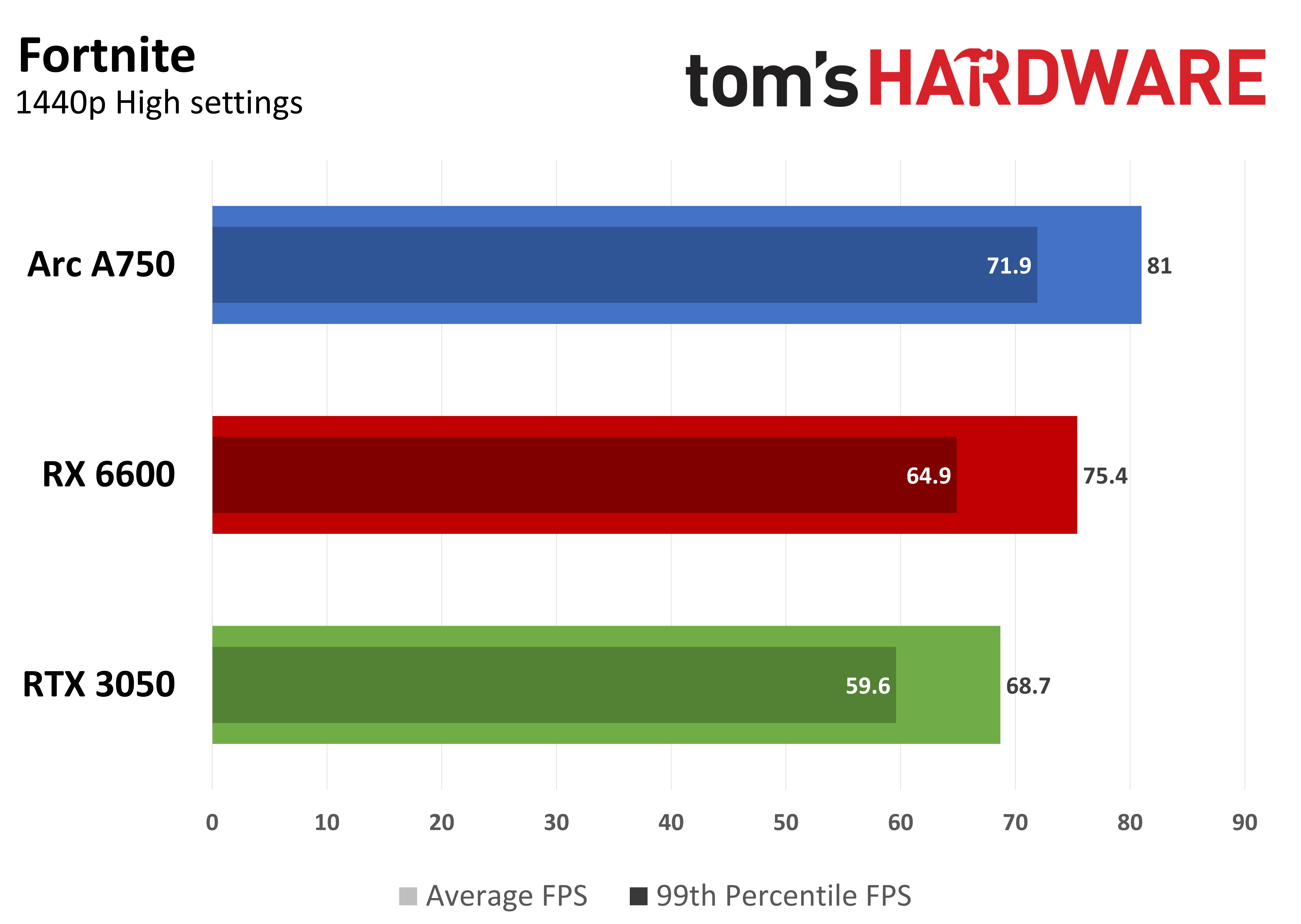

We whipped up a quick grouping of a few of today's most popular and most advanced games at 1080p and high or ultra settings without upscaling enabled, along with a couple older titles, to get a sense of how these cards still perform. We also did 1440p tests across a mix of medium and high settings (plus upscaling on Alan Wake 2) to see how these cards handled a heavier load.

The Arc A750 consistently leads in our geomean of average FPS results at 1080p. It’s 6% faster than the RX 6600 overall and 22% faster than the RTX 3050. At 1440p, the A750 leads the RX 6600 by 18% and the RTX 3050 by 25%.

The Arc A750 also leads the pack in the geomean of our 99th-percentile FPS results. It delivered the smoothest gaming experience across both resolutions.

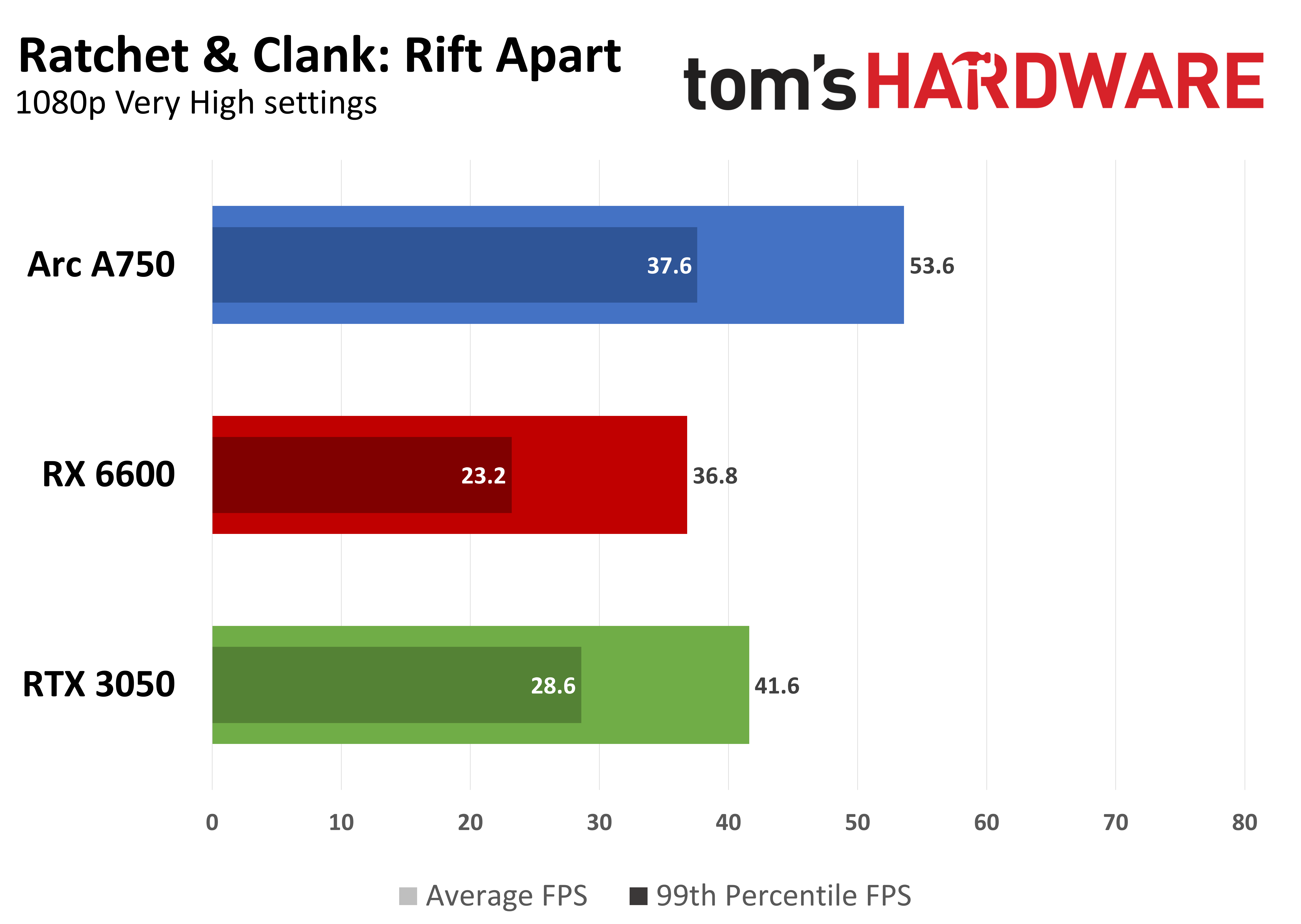

Some notes from our testing: Alan Wake 2 crushes all of these cards, and you’re going to want some kind of upscaling to make it playable. Given the option, we’d also turn Nanite and Lumen off in any Unreal Engine 5 title that supports them, as they either tank performance (in the case of the RTX 3050 and A750) or introduce massive graphical errors (as seen on the Radeon RX 6600 in Fortnite).

The major Fortnite graphics corruptions we saw on the RX 6600 have been reported for months across multiple driver versions on all graphics cards using Navi 23 GPUs, not just on the RX 6600, and it’s not clear why AMD or Epic hasn’t fixed them. The RX 6600 is also the single most popular Radeon graphics card in the Steam hardware survey, so we’re surprised this issue is still around. We’ve brought it up with AMD and will update this article if we hear back.

⭐ Winner: Intel

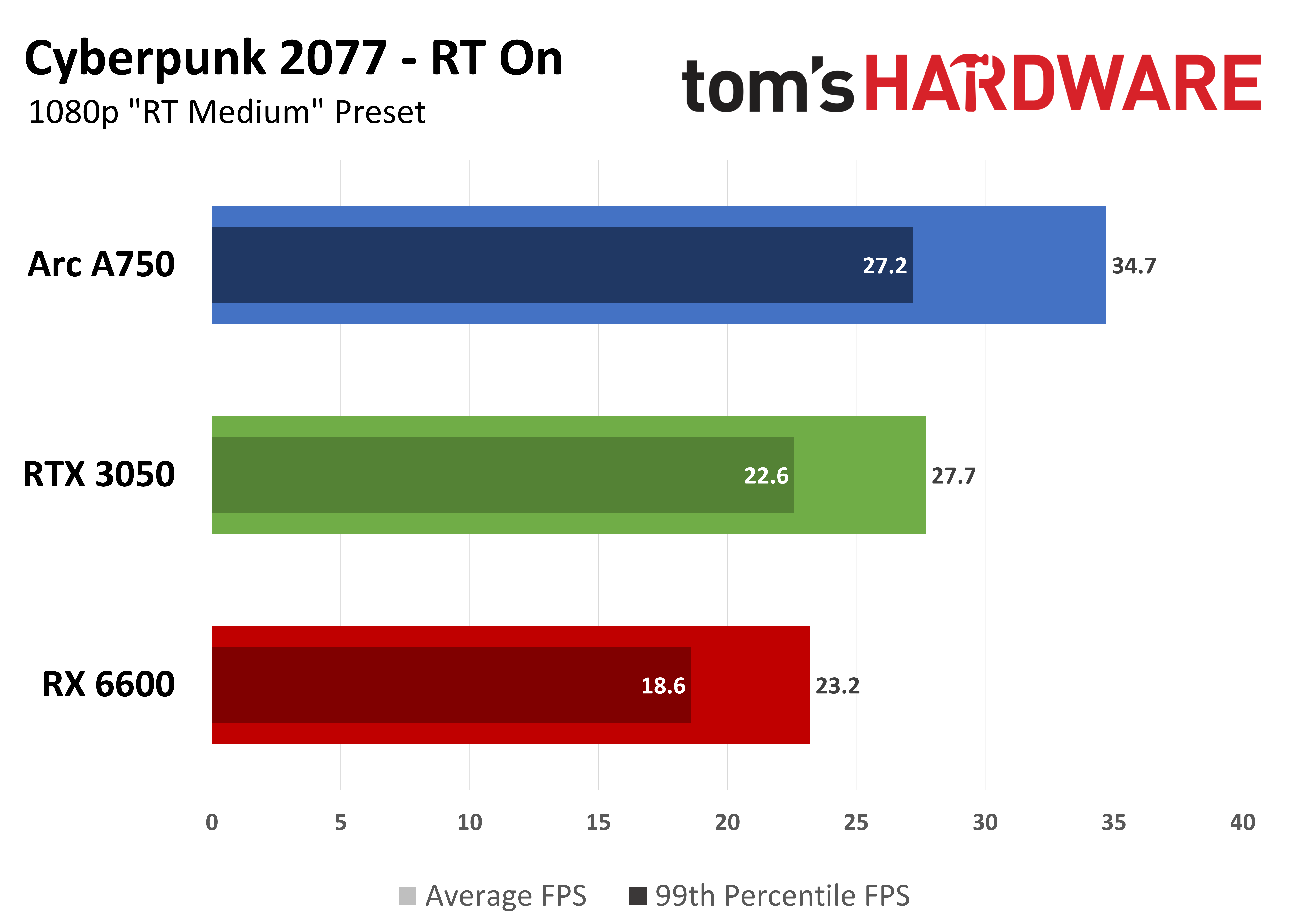

Ray tracing performance

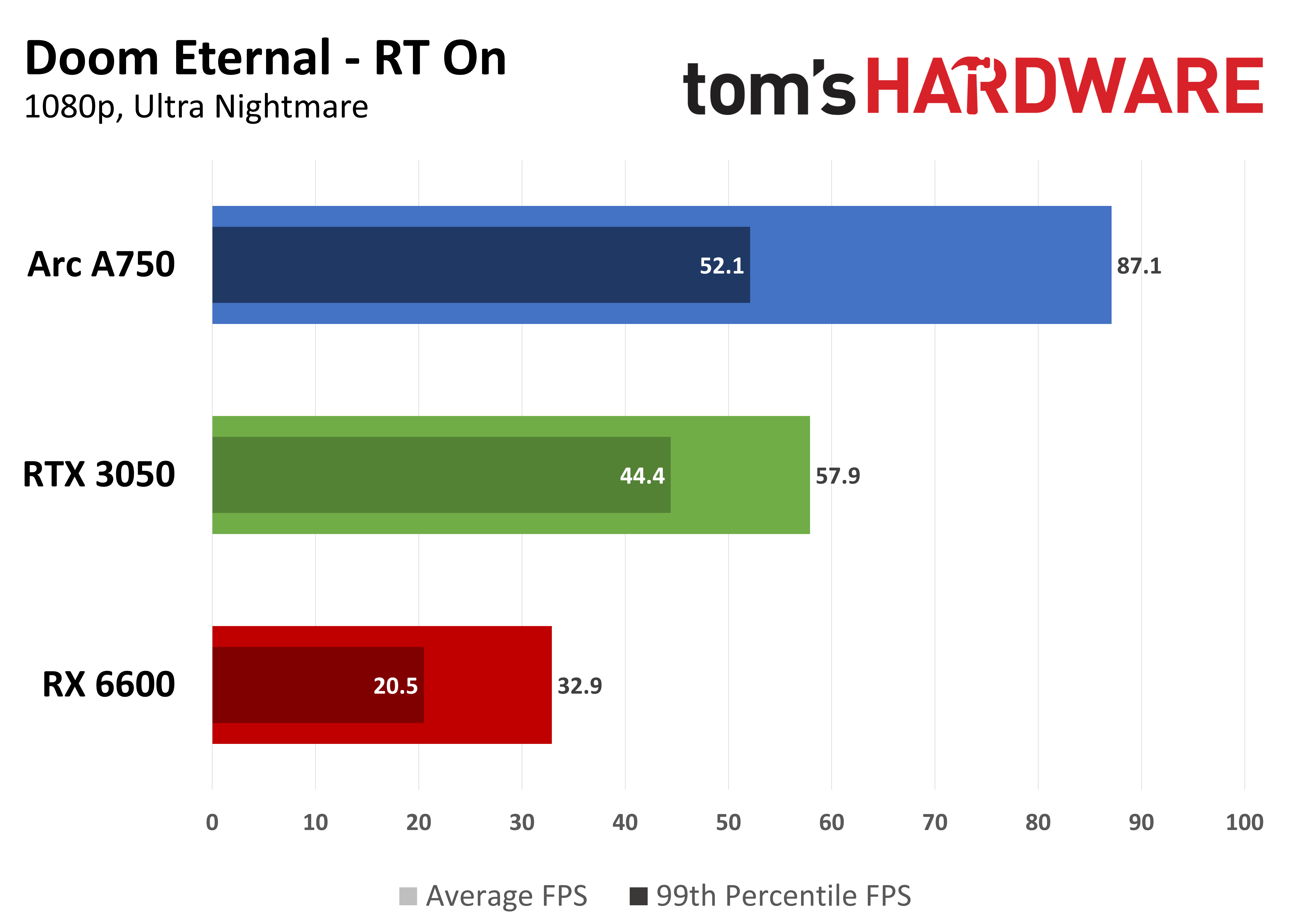

Let’s be blunt: don’t expect a $200 graphics card to deliver acceptable RT performance. 8GB of VRAM isn't enough to enable the spiffiest RT effects in today’s titles; the visual payoff usually isn't worth the performance hit, and enabling upscaling at 1080p generally compromises visual quality, even as it claws back some of that lost performance. It's better to put other priorities first (or to save up for a more modern, more powerful graphics card).

Even with those cautions in mind, we were surprised to see that the Arc A750 can still deliver a reasonably solid experience with RT on in older titles. Doom Eternal still runs at high frame rates with its sole RT toggle flipped on, and Cyberpunk 2077 offers a solid enough foundation for enabling XeSS at 1080p and medium RT settings if you’re hell-bent on tracing rays.

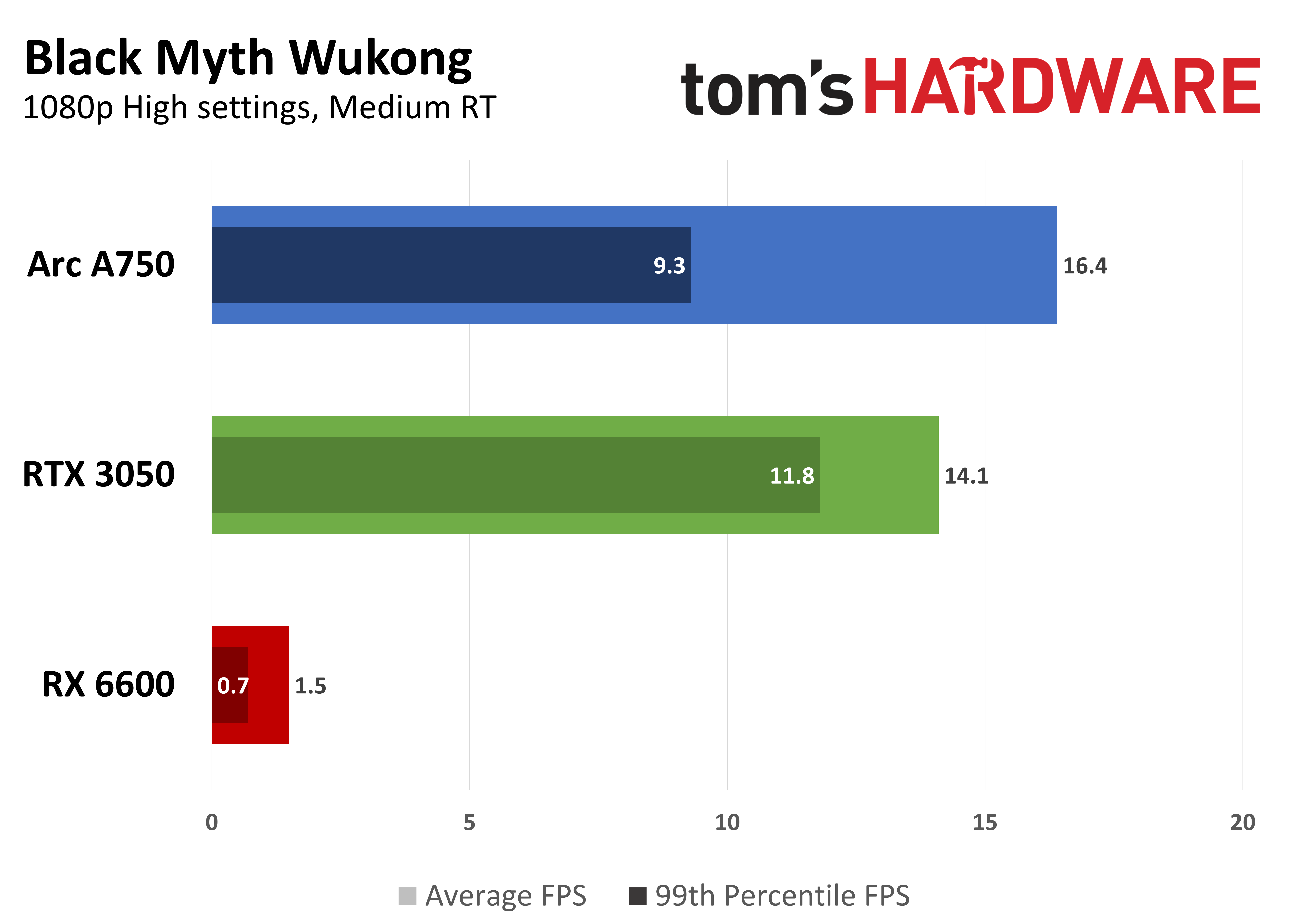

Black Myth Wukong overwhelms the A750 even with low ray tracing settings and XeSS Balanced enabled, though, so performance tanks. XeSS also introduces plenty of intrusive visual artifacts that make it unusable in this benchmark, and the game’s FSR implementation is no better. It’s modern RT titles like this where 8GB cards like the A750 are most likely to end up struggling.

The RTX 3050 does OK with the relatively light RT load of Doom Eternal, but it can’t handle Cyberpunk 2077 well enough to create a good foundation for upscaling, and Black Myth Wukong is also out of the question.

The RX 6600 has the least advanced and least numerous RT accelerators of the bunch, so its performance lands it way at the back of the pack.

⭐ Winner: Intel

Upscaling

The RTX 3050 is the only card among these three that can use Nvidia's best-in-class DLSS upscaler, which recently got even better in some games thanks to the DLSS 4 upgrade and its transformer-powered AI model. DLSS is an awesome technology in general, and Nvidia claims that over 800 games support it; however, the performance boost it offers on the RTX 3050 isn't particularly great. This is not that powerful a GPU to begin with, and multiplying a low frame rate by a scaling factor just results in a slightly less low frame rate.

Four years after its introduction, some version of AMD's FSR is available in over 500 games, and it can be enabled on virtually every GPU. That ubiquity is good news for the RX 6600 (and everybody else), but there's a catch: FSR's delivered image quality so far has tended to be worse than DLSS and XeSS. The image quality gap appears set to close with FSR 4, but the Radeon RX 6600 won't get access to that tech. It's reserved for RX 9000-series cards only.

Intel's XeSS upscaler can be enabled on graphics cards from any vendor if a game supports it, although the best version of the XeSS model only runs on Arc cards. XeSS is available in over 200 titles, so even though it's not as broadly adopted as DLSS or FSR, it’s fairly likely you'll find it as an option. We'd prefer it over FSR on an Arc card where it's available, and you should try it on Radeons to see if the results are better than AMD's own tech.

⭐ Winner: Nvidia (generally), AMD (in this specific context)

Frame generation

The RTX 3050 doesn't support DLSS Frame Generation at all. If you want to try framegen on this card, you'll have to rely on cross-vendor approaches like AMD's FSR 3 Frame Generation.

Intel's Xe Frame Generation comes as part of the XeSS 2 feature set, and those features are only baked into 22 games so far. Unless one of your favorite titles already has XeSS 2 support, it's unlikely that you'll be able to turn on Intel's framegen tech on your Arc card. As with the RTX 3050, your best shot at trying framegen comes from AMD's FSR 3.

AMD's FSR Frame Generation tech comes as part of the FSR 3 feature set, which has been implemented in 140 games so far. As we've noted, FSR 3 framegen is vendor-independent, so you can enable it on any graphics card, not just the RX 6600.

AMD's more basic Fluid Motion Frames technology also works on the RX 6600, but only in games that offer an exclusive fullscreen mode. Since Fluid Motion Frames is implemented at the driver level, it lacks access to important motion vector information that FSR3 Frame Generation gets. FMF should be viewed as a last resort.

⭐ Winner: AMD

Power

The RTX 3050 is rated for 115W of board power, but it doesn’t deliver particularly high performance to go with that rating. It’s just a low-power, low-performance card.

The Radeon RX 6600 delivers the best performance per watt in this group with its 132 W board power. It needs 15% more power than the RTX 3050 to deliver about 14% more performance at 1080p.

Intel’s Arc A750 needs a whopping 225 W to deliver its strong performance in gaming, or nearly 100W more than the RX 6600. That’s 70% more power for just 6% higher performance at 1080p, on average. Worse, Intel’s card also draws much more power at idle than either the RX 6600 or A750 without tweaking BIOS and Windows settings to mitigate that behavior.

⭐ Winner: AMD

Drivers and software

Nvidia's Game Ready drivers reliably appear alongside the latest game releases, and Nvidia has a history of quickly deploying hotfixes to address specific show-stopping issues. Users have reported that Nvidia's drivers have been getting a little shaky alongside the release of RTX 50-series cards, though, and we’ve seen evidence of that same instability in our own game testing.

Games aren’t the only place where drivers matter. Nvidia’s massive financial advantage over the competition means that non-gamers who still need GPU acceleration, like those using Adobe or other creative apps, can generally trust that their GeForce card will offer a stable experience with that software.

The Nvidia App (formerly GeForce Experience) includes tons of handy features, like one-click settings optimization and game recording tools. Nvidia also provides useful tools like Broadcast for GeForce RTX owners free of charge. We don’t think you should pick the RTX 3050 for gaming on the basis of Nvidia’s drivers or software alone, though.

Intel has kept up a regular pace of new driver releases with support for the latest games, although more general app support may be a question mark. Intel Graphics Software has a slick enough UI and an everything-you-need, nothing-you-don't feature set for overclocking and image quality settings. We wouldn't choose an Arc card on the basis of Intel's software support alone, but the company has proven its commitment to maintaining its software alongside its hardware.

AMD releases new graphics drivers on a monthly cadence, but some big issues may be getting through QA for older products like the RX 6600. Even in the limited testing we did for this face-off, we saw show-stopping rendering bugs in the latest version of Fortnite with Nanite virtualized geometry enabled. Users have been complaining of this issue for months, and it seems widespread enough that someone should have noticed by now.

The AMD Software management app boasts a mature, slick interface and useful settings overlay, along with plenty of accumulated features like Radeon Chill that some enthusiasts might find handy.

⭐ Winner: Nvidia

Accelerated video codecs

You probably don’t need a $200 discrete GPU for video encoding alone. If you already have a modern Intel CPU with an integrated graphics processor, you can already get high-quality accelerated video encoding and decoding without buying a discrete GPU.

That said, if you don’t have an Intel CPU with integrated graphics and you must have a high-quality accelerated video codec for transcoding, the RTX 3050 could be worth it as a light-duty option. If NVENC is all you want or need, though, the even cheaper (and less powerful) RTX 3050 6GB can be had for a few bucks less.

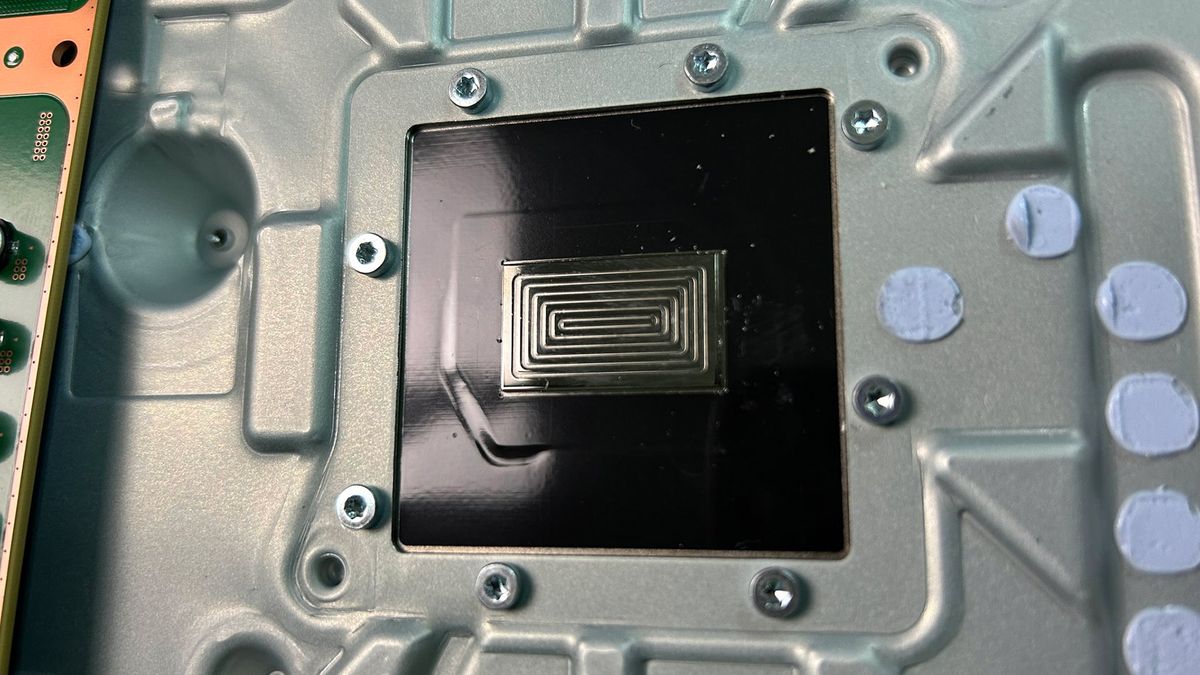

The Arc A750's video engine supports every modern codec we'd want, and it offers high quality and performance. The high power requirements of the A750 (even at idle and under light load) make it unappealing for use in something like a Plex box, though. If accelerated media processing is all you need, you can still pick up an Arc A380 for $140.

The less modern accelerated video codec on the Radeon RX 6600 (and in Ryzen IGPs) produces noticeably worse results than those of AMD or Intel. It works fine in a pinch, but you will notice the lower-quality output versus the competition. If you're particular about your codecs, look elsewhere.

⭐ Winner: Two-way tie (Intel and Nvidia)

Virtual reality

While VR hasn't changed the world as its boosters once promised it would, the enduring popularity of apps like Beat Saber and VRChat means that we should at least give it a cursory look here.

The RTX 3050 and Radeon RX 6600 technically support basic VR experiences just fine, although you may find their limited power requires enabling performance-boosting tech like timewarp and spacewarp to get a comfortable experience.

Intel doesn't support VR HMDs on the Arc A750 (or any Arc card at all, for that matter), so it's a total no-go if you want to experience VR on your PC.

⭐ Winner: Two-way tie (AMD and Nvidia)

Bottom line

Swipe to scroll horizontally

Raster Performance | Row 0 - Cell 1 | Row 0 - Cell 2 | ❌ |

Ray Tracing | Row 1 - Cell 1 | Row 1 - Cell 2 | ❌ |

Upscaling | ❌ | Row 2 - Cell 2 | Row 2 - Cell 3 |

Frame Generation | ❌ | Row 3 - Cell 2 | Row 3 - Cell 3 |

Power | ❌ | Row 4 - Cell 2 | Row 4 - Cell 3 |

Drivers | Row 5 - Cell 1 | ❌ | Row 5 - Cell 3 |

Accelerated Codecs | Row 6 - Cell 1 | ❌ | ❌ |

Virtual reality | ❌ | ❌ | Row 7 - Cell 3 |

Total | 4 | 3 | 3 |

Let’s be frank: it’s a rough time to be buying a “cheap” graphics card for gaming. To even touch a modern GPU architecture, you need to spend around $300 or more. $200 is the bottom of the barrel.

8GB of VRAM is a compromise these days, but our experience shows that you can get by with it at 1080p if you’re willing to tune settings. It isn't reasonable to slam every slider to ultra and expect a good experience here. Relax some settings, enable upscaling when you need it, and you can still have a fun time at 1080p with just two Franklins in your wallet.

So who's our winner? Not the GeForce RTX 3050. This card trails both the Radeon RX 6600 and Arc A750 across the board. You can't enable DLSS Frame Generation on the RTX 3050 at all, and we're not sure that getting access to the image quality of GeForce-exclusive DLSS 4 upscaling is worth dealing with this card’s low baseline performance. Unless you absolutely need a specific feature or capability this card offers, skip it.

Even four years after its launch, the Radeon RX 6600 is still solid enough for 1080p gaming. It trailed the Arc A750 by about 6% on average at 1080p (and about 15% at 1440p).

If it weren’t for this performance gap, the RX 6600's strong showing in other categories would make it our overall winner. But not every win carries the same weight, and performance matters most of all when discussing which graphics card is worth your money.

That said, the RX 6600's performance per watt still stands out. It needs 90 W less power than the A750 to do its thing, and it's well-behaved at idle, even with a 4K monitor. If you have an aging or weak PSU, the RX 6600 might be your upgrade ticket.

AMD's widely adopted and broadly compatible FSR upscaling and frame generation features help the RX 6600’s case, but they also work on the RTX 3050 and A750, so it’s kind of a push. The only real downsides to the RX 6600 are its dated media engine and poor RT performance. We also saw troubling graphical glitches in titles as prominent as Fortnite on this card that we didn’t experience on the Intel or Nvidia competition.

That leaves us with the Arc A750. This card delivers the most raw gaming muscle you can get for $200 at both 1080p and 1440p, but it comes with so many “buts.” Its high power requirements might make gamers with older or lower-end PSUs think twice. Intel's graphics driver can be more demanding on the CPU than the competition, meaning older systems might not be able to realize this card's full potential. And older systems that don’t support Resizable BAR won’t work well with the A750 at all.

Our experience shows that the A750 can stumble with Unreal Engine 5's Lumen and Nanite tech enabled, and not every game exposes them as a simple toggle like Fortnite does. More and more studios are using UE5 as the foundation for their games, so there’s a chance this card could underperform in future titles in spite of its still-strong potential.

If you can’t spend a dollar more than $200 and you don’t mind risking the occasional performance pitfall in exchange for absolute bang-for-the-buck, the Arc A750 is still worth a look. If you want a more mature, well-rounded card, the Radeon RX 6600 is also a good choice for just a few dollars more. But if you have the luxury of saving up enough to get even an RTX 5060 at $300, we’d think long and hard about spending good money to get an aging graphics card.

Bottom line: None of these cards could be described as outright winners. Intel, AMD, and Nvidia all have plenty of opportunity to introduce updated GPUs with modern architectures in this price range, but there are no firm signs that any of them plan to (at least on the desktop). Until that happens, PC gamers on strict budgets will have to pick through older GPUs like these on the discount rack when buying new, or hold out for a used card with all its attendant risks.

5 months ago

101

5 months ago

101

English (US) ·

English (US) ·