Not all solid-state drives are the same. A laptop SSD might open a couple of documents, sync a few gigabytes of video to one of our best cloud storage picks, then idle for hours with maybe an occasional OS update to download and install.

An enterprise SSD, however, does the opposite. It runs around the clock for weeks, months, or even years on end. Instead of various-sized and random file operations normally experienced by a consumer-grade SSD, a server SSD often chews through specific and predictable workloads. A content server SSD might write several large video files to disk one every few months, but is sequentially read thousands of times an hour. A web hosting server will often deal with hundreds of thousands of connection requests on a weekly or daily basis, and will write a tiny log to the SSD in a random fashion each time, completely filling a smaller-capacity enterprise SSD several times over every day.

Endurance is engineered and guaranteed very differently

Consumer-oriented drives are often engineered as all-purpose SSDs and tuned for everyday computing patterns, like short spurts of writes when saving work files with lots of reads from opening programs and various OS operations. These are almost always followed by long idle periods when a computer is not in use, with only routine background operations engaging your SSD.

Their endurance rating is typically expressed as a total terabytes written (TBW) allowance across the standard warranty period (usually three to five years). Only very rarely will consumer-grade drive spec sheets give any kind of drive-writes-per-day expectation, as these drives might go weeks or months without ever "filling" the drive's capacity even once..

Enterprise SSDs are treated very differently. They’re typically sold according to endurance classes, such as half a drive write per day, one per day, three, and even ten a day. What's more, they are usually backed for five years of continuous service and tuned for specific business use cases at the expense of others, like very fast random write IOPS while having modest or even unimpressive sequential read speeds.

None of this is exactly exciting material for any customer to read through. Things like greater permanent over-provisioning, tighter control of write amplification, and steady-state wear leveling firmware optimizations don't make for sizzling marketing copy like "world's fastest SSD," three words that can move the best SSDs on the consumer market off the shelves in record time all on their own.

This divide has tangible consequences. If your workload rewrites a meaningful fraction of capacity every day, a consumer drive can do the job, but it will burn through its TBW budget very quickly and may age out of operation well before the calendar warranty suggests, meaning that these cheaper client SSDs will need to be purchased more often and swapped out constantly, increasing maintenance costs.

Choosing an enterprise SSD with a DWPD rating flips the equation. The device soaks up a lot more punishment than client SSDs, delaying the inevitable creep of IO errors that come with heavy wear, in turn reducing the number of emergency swaps and painstaking rebuilds.

Both TBW and DWPD measure a drive's endurance, but they also underline the vastly different purposes they serve.

Consistency matters more than peak speed

A client SSD can look great if you’re just looking at the peak read/write speeds. Empty the drive, run a short test while it’s cool, and the sequential throughput and burst IOPS are enough to turn heads.

But enterprise production environments aren’t that kind. Real-world servers often run mixed random I/O at variable queue depths and spend most of their lives running with 30% of free capacity at any given time—all while working through background garbage collection and wear leveling.

Amid all this mess, enterprise SSDs are engineered to deliver predictable and even deterministic IO latencies, rather than selling themselves on a single impressive average. These business and industrial-grade SSDs are designed to keep the 99th and even 99.99th percentile latencies inside a strict envelope when the drive is hot, busy, and nearly full, even if the actual operational speed is "lower" than one you'd find on the client side.

This operation environment only works because of permanent over-provisioning, which allows background work to have the breathing room necessary to maintain expected drive performance. It also relies on schedulers that shield small reads from write storms, and on caching strategies that taper controllably rather than collapsing suddenly.

Why should you care? Well, users and applications almost never experience the mean, and the greater the range of variance, the wider the mean’s “margin of error.” Rather, they experience the tail, and a handful of slow I/O operations can stall a query plan and make a supposedly snappy web app slow to a crawl.

That’s why a “modest” enterprise drive can often be preferable in modern servers in a production environment, as evidenced by the continued popularity of PCIe 3.0 and PCIe 4.0 enterprise SSDs when next-gen PCIe 5.0 drives are available.

Power-loss protection makes disasters non-events

Client SSDs often buffer drive writes in volatile DRAM modules or an SLC cache to make short burst operations feel instant.

Those buffers are great until the power goes out, though, and any system can lose power before those writes in DRAM or cache are committed to non-volatile NAND. This is a major problem, since once the data vanishes, it might not be possible to recover, and you can even lose the internal mapping tables and metadata that tell the drive where everything can be found.

Some premium client models add limited safeguards, but enterprise SSDs treat sudden outages as an inevitability and build in true power-loss protection. The PCB board carries purpose-sized capacitors that hold enough energy to flush both data and metadata to NAND safely, and the firmware coordinates an orderly shutdown sequence that drains queues in a defined order and preserves the translation layer.

On top of that, the update model is atomic. In a typical enterprise drive, an operation either completes fully or not at all, sharply reducing the risk of torn writes that leave file systems or databases seeing inconsistent blocks when power returns.

The difference shows up the first time a UPS fails or a breaker trips mid-transaction. Systems that log every change, maintain journals, or continuously write critical metadata, such as databases, mail queues, hypervisor hosts, or distributed file systems, are particularly sensitive to torn writes.

Without true PLP, you’re staring at long recovery windows with multiple checks, roll-forwards, and even the possibility of restoring everything from backup while users are offline.

With enterprise-grade PLP, a drive can land safely after losing power, and its internal state remains coherent, allowing services to come back cleanly. Even in racks with redundant power and industrial UPS units, device-level PLP is the last, reliable line of defense against all the messy ways real power problems show up.

Data integrity is protected end-to-end, not just inside the NAND

Every SSD uses error-correcting codes to keep bit errors at bay, but enterprise drives elevate integrity from a local feature to a contract that spans the entire path.

Data is often protected from the host interface through the controller and any onboard DRAM, into the NAND and back, with checksums or parity that detect silent corruption and, when possible, correct it before the payload is ever exposed to the operating system.

Many enterprise models also support host-visible protection information, often referred to as DIF/DIX or PI, which appends verification bytes to each block. That metadata travels with the data across the stack, allowing the OS, HBA, and drive to verify that nothing changed during its journey.

Inside the device, stronger ECC and RAID-like parity across dies provide resilience against multi-bit errors and failing components, and the acceptable uncorrectable bit error rates are set far tighter than consumer norms.

Silent corruption is more dangerous than a clean crash because you might not notice it immediately, and your backup or replication jobs can faithfully copy the damaged data to every corner of your environment.

End-to-end protection converts that vague risk into a measurable event with clear telemetry. The drive, controller, and host agree on checksums. If something doesn’t line up, the bad block is rejected rather than quietly committed.

That posture is essential for regulated industries regular audits are required. Analytics pipelines that depend on correctness also benefit, and for anyone who would rather relax than chase phantom data bugs.

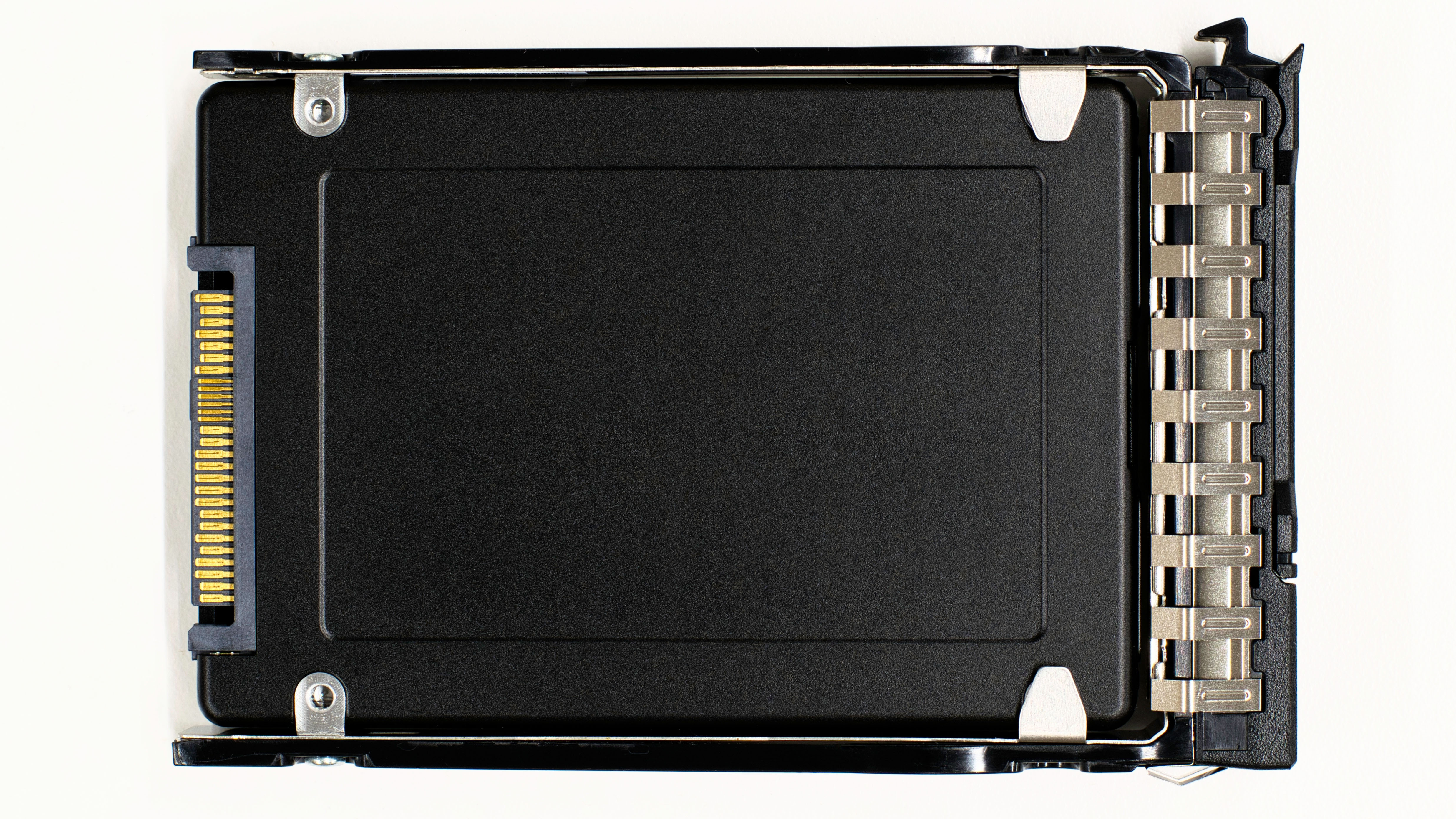

Form factors and interfaces are data center-specific

A client SSD usually lives on an M.2 stick slotted into a consumer-grade motherboard.

These are perfect for general workplace PCs, laptops, and other similar devices, but they’re awkward in a 1U or 2U server where airflow passes from the front to the back of a rack through carefully managed channels while components are replaced from the front without opening the lid.

Enterprise SSDs are designed for this kind of setup. They favor hot-swappable form factors like U.2 or U.3 and the newer EDSFF families (E1.S, E1.L, E3.S) that slot into high-density bays with tool-less carriers and mate to backplanes using blind connectors, all while maintaining firm thermal contact with the chassis.

Front-edge status LEDs also make it easy to identify the right device without tracing cables, and the enclosures themselves act as heat spreaders, so performance remains reliable under sustained load.

Connectivity also changes character when using an enterprise SSD versus client SSDs. Many enterprise drives support dual-port operation, which has long been a staple of SAS and is increasingly available with NVMe, so a single device can maintain two active paths to separate controllers or HBAs.

That dual-path setup is the foundation for high-availability storage arrays and clustered servers, allowing transparent failover if a controller goes down while still allowing access and continuous service during firmware upgrades.

Of course, consumer platforms rarely need these kinds of capabilities, but inside a rack, they make storage easier to scale and safer to operate.

Firmware prioritizes predictability, observability, and control

Client firmware is built to make a single machine feel fast and to hit peak performance during brief benchmarks, while enterprise firmware is built to be quietly dependable for years with consistent behavior across edge cases, deep telemetry, and policy levers.

Detailed health logs track media wear, temperature excursions, error histories, and sanitize operations across a drive’s life, with metrics exposed through standard NVMe log pages and vendor extensions, meaning they’re easy to ingest into monitoring systems for fleet-wide dashboards and alerts.

What’s more, enterprise firmware is signed and deployment staged, and support windows are long enough to be meaningful.

In day-to-day operations, visibility also makes a huge difference. Instead of waiting for a device to fail noisily, you replace it when leading indicators cross critical thresholds in advance of failure.

Those capabilities don’t just prevent outages; they let you focus your work hours on helping teams fix root causes rather than symptoms.

Thermal behavior and power delivery are built for 24/7 operation

Most client SSDs assume a rhythm of action and rest. If you put a consumer-grade M.2 under a sustained mixed workload, it will often throttle once the controller and NAND heat up, dragging throughput and latency down as a result.

Desktop airflow can help, but thermals in consumer cases vary wildly with fan curves and ambient temperature, with even the drive’s position on the motherboard impacting whether you get expected performance or not.

Enterprise SSDs are built for predictable, directed airflow in server chassis and for uninterrupted work. Their enclosures double as heat spreaders and on-board sensors report temperature with a finer granularity than you’ll find on client SSDs. Firmware is also calibrated to sustain performance under heat rather than chasing aggressive boost clocks in quick bursts.

Power delivery is part of the story as well. Enterprise bays and backplanes are designed to supply consistent power to a full array of drives, so a cluster running at 2 a.m. doesn’t discover that peak activity and peak heat arrive together and end up ruining everyone’s night.

A drive that throttles after five minutes tanks performance under load during peak hours, when a predictable response time is most important—and an erratic response time is most noticeable to the end user. Enterprise SSDs keep their promised throughput and latency at realistic fill levels and under real thermal conditions, even if it means sacrificing higher top speeds in the process.

Security and sanitization are a top priority

Encryption boxes appear on plenty of client spec sheets, but enterprise SSDs treat security as an operational necessity, rather than a “nice-to-have” feature.

Self-encrypting implementations are standard on many enterprise drives, with performance that holds up under load and with key-management hooks that integrate cleanly into enterprise security policy.

Profiles like TCG Enterprise or Opal are supported and secure boot of drive firmware is available. There are also sanitize commands that are auditable afterward, so you can prove a device was wiped correctly.

When the time comes to retire or repurpose a drive, enterprise SSDs offer cryptographic erase, which renders data unrecoverable in seconds by destroying the keys needed to unlock the data, rather than relying on slow full-media overwrites or blind faith that a wear-leveled block was truly overwritten.

This matters everywhere, not just in regulated industries. A single mishandled return or a drive that leaves a site without a verifiable wipe can create a legal and reputational mess that dwarfs the cost of the hardware some organizations might think is “too expensive”.

Enterprise features make the right behavior the default, with policy-driven, quick, verifiable, and consistent security across every bay in the rack.

Capacity tradeoffs favor over-provisioning and various write usages

The consumer market rewards the largest capacity number at the lowest price, so client SSDs expose as much user-addressable space as possible and rely on large dynamic SLC caches to make writes feel fast.

Once those caches saturate, though (especially on QLC-based models), write speed can drop sharply. Enterprise SSDs take the opposite approach, reserving a sizable chunk of the drive as permanent over-provisioning and presenting less capacity to the host.

That internal headroom lets the controller manage garbage collection and wear leveling without getting in the way of foreground I/O, which keeps write amplification low and latency consistent under pressure.

Enterprise product lines are also segmented by expected write intensity. Read-optimized models maximize capacity per dollar for cold data and boot volumes, while mixed-use models balance endurance with cost for virtualization and general-purpose databases. Meanwhile, write-optimized models trade capacity for resilience, offering a higher DWPD rating that soaks up logs, cache churn, and other punishing patterns with ease.

Matching the drive to the job matters at the enterprise level, and so by picking the endurance class for your peak write behavior and accepting a little less user-visible capacity in exchange for internal headroom, the drive performs better for longer, with less maintenance.

Validation, lifecycle, and support reflect mission-critical use

Enterprise SSDs go through platform qualification with server vendors who test interoperability with HBAs and backplanes, as well as burn-ins that aim to find the freak edge cases that can bring down an SSD or even the whole data center before a product ever ships in volume.

Availability windows are also measured in years, not quarters, so you can standardize your storage fleet over time while picking up matching spares easily.

Manufacturers publish product change notifications and release notes as well, which avoids silent internal component substitutions that can alter expected performance or endurance characteristics that an organization might be relying on.

They also back these products with fleet support rather than individual drive RMAs and warranties, as you find with consumer-grade SSDs.

In a production environment, the difference between “we’ll ship a replacement sometime next week” and “next-business-day with a plan for data evacuation if needed” makes all the difference in a lot of cases.

English (US) ·

English (US) ·